Shallow Thoughts : : Dec

Akkana's Musings on Open Source Computing and Technology, Science, and Nature.

Sun, 25 Dec 2016

Excellent Xmas to all!

We're having a white Xmas here..

Dave and I have been discussing how "Merry Christmas" isn't

alliterative like "Happy Holidays". We had trouble coming up with a

good C or K adjective to go with Christmas, but then we hit on the

answer: Have an Excellent Xmas! It also has the advantage of

inclusivity: not everyone celebrates the birth of Christ, but Xmas is

a secular holiday of lights, family and gifts, open to people of all

belief systems.

Meanwhile:

I spent a couple of nights recently learning how to photograph Xmas

lights and farolitos.

Meanwhile:

I spent a couple of nights recently learning how to photograph Xmas

lights and farolitos.

Farolitos, a New Mexico Christmas tradition, are paper bags, weighted

down with sand, with a candle inside. Sounds modest, but put a row of

them alongside a roadway or along the top of a typical New Mexican

adobe or faux-dobe and you have a beautiful display of lights.

They're also known as luminarias in southern New Mexico, but

Northern New Mexicans insist that a luminaria is a bonfire, and the

little paper bag lanterns should be called farolitos.

They're pretty, whatever you call them.

Locally, residents of several streets in Los Alamos and White Rock set

out farolitos along their roadsides for a few nights around Christmas,

and the county cooperates by turning off streetlights on those

streets. The display on Los Pueblos in Los Alamos is a zoo, a slow

exhaust-choked parade of cars that reminds me of the Griffith Park

light show in LA. But here in White Rock the farolito displays are

a lot less crowded, and this year I wanted to try photographing them.

Canon bugs affecting night photography

I have a little past experience with night photography. I went through

a brief astrophotography phase in my teens (in the pre-digital phase,

so I was using film and occasionally glass plates). But I haven't done

much night photography for years.

That's partly because I've had problems taking night shots with my

current digital SLRcamera, a Rebel Xsi (known outside the US as a

Canon 450d). It's old and modest as DSLRs go, but I've resisted

upgrading since I don't really need more features.

Except maybe when it comes to night photography. I've tried shooting

star trails, lightning shots and other nocturnal time exposures, and

keep hitting a snag: the camera refuses to take a photo. I'll be in

Manual mode, with my aperture and shutter speed set, with the lens in

Manual Focus mode with Image Stabilization turned off. Plug in the

remote shutter release, push the button ... and nothing happens except

a lot of motorized lens whirring noises. Which shouldn't be happening

-- in MF and non-IS mode the lens should be just sitting there intert,

not whirring its motors. I couldn't seem to find a way to convince it

that the MF switch meant that, yes, I wanted to focus manually.

It seemed to be primarily a problem with the EF-S 18-55mm kit lens;

the camera will usually condescend to take a night photo with my other

two lenses. I wondered if the MF switch might be broken, but then I

noticed that in some modes the camera explicitly told me I was in

manual focus mode.

I was almost to the point of ordering another lens just for night

shots when I finally hit upon the right search terms and found,

if not the reason it's happening, at least an excellent workaround.

Back Button Focus

I'm so sad that I went so many years without knowing about Back Button Focus.

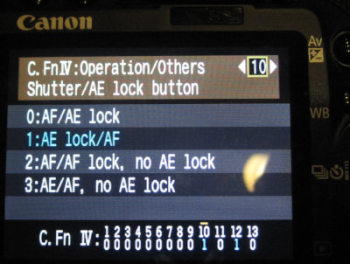

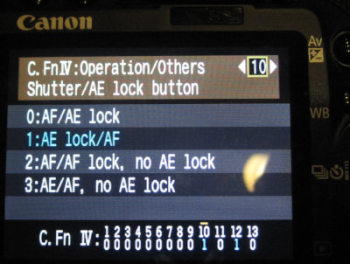

It's well hidden in the menus, under Custom Functions #10.

Normally, the shutter button does a bunch of things. When you press it

halfway, the camera both autofocuses (sadly, even in manual focus mode)

and calculates exposure settings.

But there's a custom function that lets you separate the focus and

exposure calculations. In the Custom Functions menu option #10

(the number and exact text will be different on different Canon models,

but apparently most or all Canon DSLRs have this somewhere),

the heading says: Shutter/AE Lock Button.

Following that is a list of four obscure-looking options:

But there's a custom function that lets you separate the focus and

exposure calculations. In the Custom Functions menu option #10

(the number and exact text will be different on different Canon models,

but apparently most or all Canon DSLRs have this somewhere),

the heading says: Shutter/AE Lock Button.

Following that is a list of four obscure-looking options:

- AF/AE lock

- AE lock/AF

- AF/AF lock, no AE lock

- AE/AF, no AE lock

The text before the slash indicates what the shutter button, pressed

halfway, will do in that mode; the text after the slash is what

happens when you press the * or AE lock button on the

upper right of the camera back (the same button you use to zoom out

when reviewing pictures on the LCD screen).

The first option is the default: press the shutter button halfway to

activate autofocus; the AE lock button calculates and locks exposure settings.

The second option is the revelation: pressing the shutter button halfway

will calculate exposure settings, but does nothing for focus. To focus,

press the * or AE button, after which focus will be locked. Pressing

the shutter button won't refocus. This mode is called "Back button focus"

all over the web, but not in the manual.

Back button focus is useful in all sorts of cases.

For instance, if you want to autofocus once then keep the same focus

for subsequent shots, it gives you a way of doing that.

It also solves my night focus problem: even with the bug (whether it's

in the lens or the camera) that the lens tries to autofocus even in

manual focus mode, in this mode, pressing the shutter won't trigger that.

The camera assumes it's in focus and goes ahead and takes the picture.

Incidentally, the other two modes in that menu apply to AI SERVO mode

when you're letting the focus change constantly as it follows a moving

subject. The third mode makes the * button lock focus and stop

adjusting it; the fourth lets you toggle focus-adjusting on and off.

Live View Focusing

There's one other thing that's crucial for night shots: live view

focusing. Since you can't use autofocus in low light, you have to do

the focusing yourself. But most DSLR's focusing screens aren't good

enough that you can look through the viewfinder and get a reliable

focus on a star or even a string of holiday lights or farolitos.

Instead, press the SET button (the one in the middle of the

right/left/up/down buttons) to activate Live View (you may have to

enable it in the menus first). The mirror locks up and a preview of

what the camera is seeing appears on the LCD. Use the zoom button (the

one to the right of that */AE lock button) to zoom in; there are two

levels of zoom in addition to the un-zoomed view. You can use the

right/left/up/down buttons to control which part of the field the

zoomed view will show. Zoom all the way in (two clicks of the +

button) to fine-tune your manual focus. Press SET again to exit

live view.

It's not as good as a fine-grained focusing screen, but at least

it gets you close. Consider using relatively small apertures, like f/8,

since it will give you more latitude for focus errors. Yyou'll be

doing time exposures on a tripod anyway, so a narrow aperture just

means your exposures have to be a little longer than they otherwise

would have been.

After all that, my Xmas Eve farolitos photos turned out mediocre.

We had a storm blowing in, so a lot of the candles had blown out.

(In the photo below you can see how the light string on the left

is blurred, because the tree was blowing around so much during the

30-second exposure.)

But I had fun, and maybe I'll go out and try again tonight.

An excellent X-mas to you all!

Tags: photography

[

12:30 Dec 25, 2016

More photo |

permalink to this entry |

]

Thu, 22 Dec 2016

I wrote two recent articles on Python packaging:

Distributing Python Packages Part I: Creating a Python Package

and

Distributing Python Packages Part II: Submitting to PyPI.

I was able to get a couple of my programs packaged and submitted.

Ongoing Development and Testing

But then I realized all was not quite right. I could install new releases of

my package -- but I couldn't run it from the source directory any more.

How could I test changes without needing to rebuild the package for

every little change I made?

Fortunately, it turned out to be fairly easy. Set PYTHONPATH to a

directory that includes all the modules you normally want to test.

For example, inside my bin directory I have a python directory

where I can symlink any development modules I might need:

mkdir ~/bin/python

ln -s ~/src/metapho/metapho ~/bin/python/

Then add the directory at the beginning of PYTHONPATH:

export PYTHONPATH=$HOME/bin/python

With that, I could test from the development directory again,

without needing to rebuild and install a package every time.

Cleaning up files used in building

Building a package leaves some extra files and directories around,

and git status will whine at you since they're not

version controlled. Of course, you could gitignore them, but it's

better to clean them up after you no longer need them.

To do that, you can add a clean command to setup.py.

from setuptools import Command

class CleanCommand(Command):

"""Custom clean command to tidy up the project root."""

user_options = []

def initialize_options(self):

pass

def finalize_options(self):

pass

def run(self):

os.system('rm -vrf ./build ./dist ./*.pyc ./*.tgz ./*.egg-info ./docs/sphinxdoc/_build')

(Obviously, that includes file types beyond what you need for just

cleaning up after package building. Adjust the list as needed.)

Then in the setup() function, add these lines:

cmdclass={

'clean': CleanCommand,

}

Now you can type

python setup.py clean

and it will remove all the extra files.

Keeping version strings in sync

It's so easy to update the __version__ string in your module and

forget that you also have to do it in setup.py, or vice versa.

Much better to make sure they're always in sync.

I found several version of that using system("grep..."),

but I decided to write my own that doesn't depend on system().

(Yes, I should do the same thing with that CleanCommand, I know.)

def get_version():

'''Read the pytopo module versions from pytopo/__init__.py'''

with open("pytopo/__init__.py") as fp:

for line in fp:

line = line.strip()

if line.startswith("__version__"):

parts = line.split("=")

if len(parts) > 1:

return parts[1].strip()

Then in setup():

version=get_version(),

Much better! Now you only have to update __version__ inside your module

and setup.py will automatically use it.

Using your README for a package long description

setup has a long_description for the package, but you probably

already have some sort of README in your package. You can use it for

your long description this way:

# Utility function to read the README file.

# Used for the long_description.

def read(fname):

return open(os.path.join(os.path.dirname(__file__), fname)).read()

long_description=read('README'),

Tags: programming, python, open source

[

10:15 Dec 22, 2016

More programming |

permalink to this entry |

]

Sat, 17 Dec 2016

In

Part

I, I discussed writing a setup.py

to make a package you can submit to PyPI.

Today I'll talk about better ways of testing the package,

and how to submit it so other people can install it.

Testing in a VirtualEnv

You've verified that your package installs. But you still need to test

it and make sure it works in a clean environment, without all your

developer settings.

The best way to test is to set up a "virtual environment", where you can

install your test packages without messing up your regular runtime

environment. I shied away from virtualenvs for a long time, but

they're actually very easy to set up:

virtualenv venv

source venv/bin/activate

That creates a directory named venv under the current directory,

which it will use to install packages.

Then you can pip install packagename or

pip install /path/to/packagename-version.tar.gz

Except -- hold on! Nothing in Python packaging is that easy.

It turns out there are a lot of packages that won't install inside

a virtualenv, and one of them is PyGTK, the library I use for my

user interfaces. Attempting to install pygtk inside a venv gets:

********************************************************************

* Building PyGTK using distutils is only supported on windows. *

* To build PyGTK in a supported way, read the INSTALL file. *

********************************************************************

Windows only? Seriously? PyGTK works fine on both Linux and Mac;

it's packaged on every Linux distribution, and on Mac it's packaged

with GIMP. But for some reason, whoever maintains the PyPI PyGTK

packages hasn't bothered to make it work on anything but Windows,

and PyGTK seems to be mostly an orphaned project so that's not likely

to change.

(There's a package called ruamel.venvgtk that's supposed to work around

this, but it didn't make any difference for me.)

The solution is to let the virtualenv use your system-installed packages,

so it can find GTK and other non-PyPI packages there:

virtualenv --system-site-packages venv

source venv/bin/activate

I also found that if I had a ~/.local directory (where packages

normally go if I use pip install --user packagename),

sometimes pip would install to .local instead of the venv. I never

did track down why this happened some times and not others, but when

it happened, a temporary

mv ~/.local ~/old.local fixed it.

Test your Python package in the venv until everything works.

When you're finished with your venv, you can run deactivate

and then remove it with rm -rf venv.

Tag it on GitHub

Is your project ready to publish?

If your project is hosted on GitHub, you can have pypi download it

automatically. In your setup.py, set

download_url='https://github.com/user/package/tarball/tagname',

Check that in. Then make a tag and push it:

git tag 0.1 -m "Name for this tag"

git push --tags origin master

Try to make your tag match the version you've set in setup.py and

in your module.

Push it to pypitest

Register a new account and password on both

pypitest

and on pypi.

Then create a ~/.pypirc that looks like this:

[distutils]

index-servers =

pypi

pypitest

[pypi]

repository=https://pypi.python.org/pypi

username=YOUR_USERNAME

password=YOUR_PASSWORD

[pypitest]

repository=https://testpypi.python.org/pypi

username=YOUR_USERNAME

password=YOUR_PASSWORD

Yes, those passwords are in cleartext. Incredibly, there doesn't seem

to be a way to store an encrypted password or even have it prompt you.

There are tons of complaints about that all over the web but nobody

seems to have a solution.

You can specify a password on the command line, but that's not much better.

So use a password you don't use anywhere else and don't mind too much

if someone guesses.

Update: Apparently there's a newer method called twine that solves the

password encryption problem. Read about it here:

Uploading your project to PyPI.

You should probably use twine instead of the setup.py commands discussed

in the next paragraph.

Now register your project and upload it:

python setup.py register -r pypitest

python setup.py sdist upload -r pypitest

Wait a few minutes: it takes pypitest a little while before new packages

become available.

Then go to your venv (to be safe, maybe delete the old venv and create a

new one, or at least pip uninstall) and try installing:

pip install -i https://testpypi.python.org/pypi YourPackageName

If you get "No matching distribution found for packagename",

wait a few minutes then try again.

If it all works, then you're ready to submit to the real pypi:

python setup.py register -r pypi

python setup.py sdist upload -r pypi

Congratulations! If you've gone through all these steps, you've uploaded

a package to pypi. Pat yourself on the back and go tell everybody they

can pip install your package.

Some useful reading

Some pages I found useful:

A great tutorial except that it forgets to mention signing up for an account:

Python

Packaging with GitHub

Another good tutorial:

First

time with PyPI

Allowed PyPI classifiers

-- the categories your project fits into

Unfortunately there aren't very many of those, so you'll probably be

stuck with 'Topic :: Utilities' and not much else.

Python

Packages and You: not a tutorial, but a lot of good advice on style

and designing good packages.

Tags: programming, python, open source

[

16:19 Dec 17, 2016

More programming |

permalink to this entry |

]

Sun, 11 Dec 2016

I write lots of Python scripts that I think would be useful to other

people, but I've put off learning how to submit to the Python Package Index,

PyPI, so that my packages can be installed using pip install.

Now that I've finally done it, I see why I put it off for so long.

Unlike programming in Python, packaging is a huge, poorly documented

hassle, and it took me days to get a working.package. Maybe some of the

hints here will help other struggling Pythonistas.

Create a setup.py

The setup.py file is the file that describes the files in your

project and other installation information.

If you've never created a setup.py before,

Submitting a Python package with GitHub and PyPI

has a decent example, and you can find lots more good examples with a

web search for "setup.py", so I'll skip the basics and just mention

some of the parts that weren't straightforward.

Distutils vs. Setuptools

However, there's one confusing point that no one seems to mention.

setup.py examples all rely on a predefined function

called setup, but some examples start with

from distutils.core import setup

while others start with

from setuptools import setup

In other words, there are two different versions of setup!

What's the difference? I still have no idea. The setuptools

version seems to be a bit more advanced, and I found that using

distutils.core , sometimes I'd get weird errors when

trying to follow suggestions I found on the web. So I ended up using

the setuptools version.

But I didn't initially have setuptools installed (it's not part of the

standard Python distribution), so I installed it from the Debian package:

apt-get install python-setuptools python-wheel

The python-wheel package isn't strictly needed, but I

found I got assorted warnings warnings from pip install

later in the process ("Cannot build wheel") unless I installed it, so

I recommend you install it from the start.

Including scripts

setup.py has a scripts option to include scripts that

are part of your package:

scripts=['script1', 'script2'],

But when I tried to use it, I had all sorts of problems, starting with

scripts not actually being included in the source distribution. There

isn't much support for using scripts -- it turns out

you're actually supposed to use something called

console_scripts, which is more elaborate.

First, you can't have a separate script file, or even a __main__

inside an existing class file. You must have a function, typically

called main(), so you'll typically have this:

def main():

# do your script stuff

if __name__ == "__main__":

main()

Then add something like this to your setup.py:

entry_points={

'console_scripts': [

script1=yourpackage.filename:main',

script2=yourpackage.filename2:main'

]

},

There's a secret undocumented alternative that a few people use

for scripts with graphical user interfaces: use 'gui_scripts' rather

than 'console_scripts'. It seems to work when I try it, but the fact

that it's not documented and none of the Python experts even seem to

know about it scared me off, and I stuck with 'console_scripts'.

Including data files

One of my packages, pytopo, has a couple of files it needs to install,

like an icon image. setup.py has a provision for that:

data_files=[('/usr/share/pixmaps', ["resources/appname.png"]),

('/usr/share/applications', ["resources/appname.desktop"]),

('/usr/share/appname', ["resources/pin.png"]),

],

Great -- except it doesn't work. None of the files actually gets added

to the source distribution.

One solution people mention to a "files not getting added" problem is

to create an explicit MANIFEST file listing all files that need to be

in the distribution. Normally, setup generates the MANIFEST automatically,

but apparently it isn't smart enough to notice data_files

and include those in its generated MANIFEST.

I tried creating a MANIFEST listing all the .py files plus

the various resources -- but it didn't make any difference. My

MANIFEST was ignored.

The solution turned out to be creating a MANIFEST.in file, which is

used to generate a MANIFEST. It's easier than creating the MANIFEST

itself: you don't have to list every file, just patterns that describe

them:

include setup.py

include packagename/*.py

include resources/*

If you have any scripts that don't use the extension .py,

don't forget to include them as well. This may have been why

scripts= didn't work for me earlier, but by the time

I found out about MANIFEST.in I had already switched to using

console_scripts.

Testing setup.py

Once you have a setup.py, use it to generate a source distribution with:

python setup.py sdist

(You can also use bdist to generate a binary distribution, but you'll

probably only need that if you're compiling C as part of your package.

Source dists are apparently enough for pure Python packages.)

Your package will end up in dist/packagename-version.tar.gz

so you can use tar tf dist/packagename-version.tar.gz

to verify what files are in it. Work on your setup.py until you

don't get any errors or warnings and the list of files looks right.

Congratulations -- you've made a Python package!

I'll post a followup article in a day or two about more ways of testing,

and how to submit your working package to PyPI.

Update: Part II is up:

Distributing Python Packages Part II: Submitting to PyPI.

Tags: programming, python, open source

[

12:54 Dec 11, 2016

More programming |

permalink to this entry |

]

Meanwhile:

I spent a couple of nights recently learning how to photograph Xmas

lights and farolitos.

Meanwhile:

I spent a couple of nights recently learning how to photograph Xmas

lights and farolitos.

But there's a custom function that lets you separate the focus and

exposure calculations. In the Custom Functions menu option #10

(the number and exact text will be different on different Canon models,

but apparently most or all Canon DSLRs have this somewhere),

the heading says: Shutter/AE Lock Button.

Following that is a list of four obscure-looking options:

But there's a custom function that lets you separate the focus and

exposure calculations. In the Custom Functions menu option #10

(the number and exact text will be different on different Canon models,

but apparently most or all Canon DSLRs have this somewhere),

the heading says: Shutter/AE Lock Button.

Following that is a list of four obscure-looking options: