Shallow Thoughts : tags : photography

Akkana's Musings on Open Source Computing and Technology, Science, and Nature.

Sat, 13 Apr 2024

![[Viewing the eclipse from 19 Mile Crossing, TX]](https://shallowsky.com/blog/images/eclipse2024/PXL_20240408_174051739.jpg) I'm sorry, but I have no eclipse photos to share. I messed that up.

But I did get to see totality.

I'm sorry, but I have no eclipse photos to share. I messed that up.

But I did get to see totality.

For the April 8, 2024 eclipse, Dave and I committed early to Texas.

Seemed like that was where the best long-range forecasts were.

In the last week before the eclipse, the forecasts were no longer

looking so good. But I've heard so many stories of people driving around

trying to chase holes in the clouds, only to be skunked,

while people who stayed put got a better view.

We decided to stick with our plan, which was to stay in San Angelo

(some 190 miles off the centerline) the night before,

get up fairly early and drive to somewhere near the centerline.

Read more ...

Tags: eclipse, astronomy, photography

[

12:36 Apr 13, 2024

More science/astro |

permalink to this entry |

]

Mon, 20 Nov 2023

![[Map of GPS from Pixel 6a photos compared with actual positions]](https://shallowsky.com/blog/images/screenshots/pixelphotos/kimberlyT.jpg)

I've been relying more on my phone

for photos I take while hiking, rather than carry a separate camera.

The Pixel 6a takes reasonably good photos, if you can put up with

the wildly excessive processing Google's camera app does whether you

want it or not.

That opens the possibility of GPS tagging photos, so I'd

have a good record of where on the trail each photo was taken.

But as it turns out: no. It seems the GPS coordinates the Pixel's

camera app records in photos is always wrong, by a significant amount.

And, weirdly, this doesn't seem to be something anyone's talking

about on the web ... or am I just using the wrong search terms?

Read more ...

Tags: mapping, GIS, cellphone, google, programming, python, photography, 30DayMapChallenge

[

19:09 Nov 20, 2023

More mapping |

permalink to this entry |

]

Mon, 17 Jul 2023

![[Clouds and mesa shadows from Anderson Overlook]](https://shallowsky.com/blog/images/clouds/PXL_20230514_235319715T.jpg) While driving back down the hill after an appointment, I had to stop

at Anderson Overlook to snap a few photos of the clouds and their shadows

on the mesa.

While driving back down the hill after an appointment, I had to stop

at Anderson Overlook to snap a few photos of the clouds and their shadows

on the mesa.

In a Robert B. Parker novel I read many years ago, a character, musing

on the view from a rich guy's house, comments, "I hear that after a while,

it's just what you see out the window."

Dave and I make fun of that line all the time.

Maybe it's true in Boston, but in New Mexico, I never get tired of the

view and the endlessly changing light and shadow.

I know people who have lived here fifty years or more and still aren't

tired of it.

Tags: nature, photography

[

20:12 Jul 17, 2023

More photo |

permalink to this entry |

]

Sat, 20 May 2023

![[Big snake kite]](https://shallowsky.com/images/kitefest2023/PXL_20230520_192022104T.jpg)

The weather is great for this year's Kite Festival, going on right now

at Overlook Park. It's a little hazy, but there's a good wind,

plenty to keep the kids' small kits aloft, though the big, fancy kites

were struggling a little.

Continuing through Sunday night; if you're in the area, go take a look!

A few photos:

White Rock Kite Festival 2023.

Tags: photography, kites

[

15:43 May 20, 2023

More misc |

permalink to this entry |

]

Sun, 04 Dec 2022

![[Door Bunny]](https://shallowsky.com/blog/images/humor/door-rabbit.jpg) I've been down for a week with the Flu from Hell that's going around.

I've been down for a week with the Flu from Hell that's going around.

We think it's flu because the symptoms match, and

because I got knocked out by it, while Dave caught a much milder case

— and Dave got the double-dose-for-seniors flu shot, while I

only got the regular-for-younger-folks shot. (Our COVID tests are negative

and there's no anosmia or breathing impairment.)

So I haven't been getting much done lately, nor writing blog articles.

But I'm feeling a bit better now. While I recover, here's something

from a few months ago: our annual autumn visit from the Door Bunny.

Read more ...

Tags: humor, photography

[

08:27 Dec 04, 2022

More humor |

permalink to this entry |

]

Wed, 19 Aug 2020

![[Maybe a New Mexico Whiptail]](https://shallowsky.com/nature/reptiles/whiptails/img_2714sm.jpg) Late summer is whiptail season. Whiptails are long, slender,

extremely fast lizards with (as you might expect) especially long tails.

They emerge from hibernation at least a month later than the fence lizards,

but once they're awake, they're everywhere.

Late summer is whiptail season. Whiptails are long, slender,

extremely fast lizards with (as you might expect) especially long tails.

They emerge from hibernation at least a month later than the fence lizards,

but once they're awake, they're everywhere.

In addition to being pretty to look at, fun to watch as they

hit the afterburner and streak across the yard,

and challenging to photograph since they seldom sit still for long,

they're interesting for several reasons.

Read more ...

Tags: nature, lizard, photography

[

19:56 Aug 19, 2020

More nature |

permalink to this entry |

]

Sat, 25 Jul 2020

![[Comet Neowise and Starlink Satellites]](https://shallowsky.com/images/starlink/img_4003sl.jpg) Monday was the last night it's been clear enough to see Comet Neowise.

I shot some photos with the Rebel, but I haven't quite figured out

the alignment and stacking needed for decent astrophotos, so I don't

have much to show. I can't even see the ion tail.

Monday was the last night it's been clear enough to see Comet Neowise.

I shot some photos with the Rebel, but I haven't quite figured out

the alignment and stacking needed for decent astrophotos, so I don't

have much to show. I can't even see the ion tail.

The interesting thing about Monday besides just getting to see

the comet was the never-ending train of satellites.

Read more ...

Tags: astronomy, science, photography

[

20:27 Jul 25, 2020

More science/astro |

permalink to this entry |

]

Sun, 27 Aug 2017

![[2017 Solar eclipse with corona]](http://shallowsky.com/images/eclipse2017/img_1505.jpg) My first total eclipse! The suspense had been building for years.

My first total eclipse! The suspense had been building for years.

Dave and I were in Wyoming. We'd made a hotel reservation nine months

ago, by which time we were already too late to book a room in the zone

of totality and settled for Laramie, a few hours' drive from the centerline.

For visual observing, I had my little portable 80mm refractor. But

photography was more complicated. I'd promised myself that for my

first (and possibly only) total eclipse, I wasn't going to miss the

experience because I was spending too much time fiddling with cameras.

But I couldn't talk myself into not trying any photography at all.

Initially, my plan was to use my

90mm Mak

as a 500mm camera lens. It had worked okay for the

the 2012 Venus transit.

![[Homemade solar finder for telescope]](http://shallowsky.com/blog/images/solar-finder/img_1448.jpg) I spent several weeks before the eclipse in a flurry of creation,

making a couple of

solar finders,

a barn-door

mount, and then wrestling with motorizing the barn-door (which was

a failure because I couldn't find a place to buy decent gears for the motor.

I'm still working on that and will eventually write it up).

I wrote up a plan: what equipment I would use when, a series of

progressive exposures for totality, and so forth.

I spent several weeks before the eclipse in a flurry of creation,

making a couple of

solar finders,

a barn-door

mount, and then wrestling with motorizing the barn-door (which was

a failure because I couldn't find a place to buy decent gears for the motor.

I'm still working on that and will eventually write it up).

I wrote up a plan: what equipment I would use when, a series of

progressive exposures for totality, and so forth.

And then, a couple of days before we were due to leave, I figured I

should test my rig -- and discovered that it was basically impossible

to focus on the sun. For the Venus transit, the sun wasn't that high

in the sky, so I focused through the viewfinder. But for the total

eclipse, the sun would be almost overhead, and the viewfinder nearly

impossible to see. So I had planned to point the Mak at a distant

hillside, focus it, then slip the filter on and point it up to the sun.

It turned out the focal point was completely different through the filter.

![[Solar finder for DSLR, made from popsicle sticks]](http://shallowsky.com/images/eclipse2017/img_7792T.jpg) With only a couple of days left to go, I revised my plan.

The Mak is difficult to focus under any circumstances. I decided

not to use it, and to stick to my Canon 55-250mm zoom telephoto,

with the camera on a normal tripod. I'd skip the partial eclipse

(I've photographed those before anyway) and concentrate on

getting a few shots of the diamond ring and the corona, running

through a range of exposures without needing to look at the camera

screen or do any refocusing. And since I wasn't going to be usinga

telescope, my nifty solar finders wouldn't work; I designed a new

one out of popsicle sticks to fit in the camera's hot shoe.

With only a couple of days left to go, I revised my plan.

The Mak is difficult to focus under any circumstances. I decided

not to use it, and to stick to my Canon 55-250mm zoom telephoto,

with the camera on a normal tripod. I'd skip the partial eclipse

(I've photographed those before anyway) and concentrate on

getting a few shots of the diamond ring and the corona, running

through a range of exposures without needing to look at the camera

screen or do any refocusing. And since I wasn't going to be usinga

telescope, my nifty solar finders wouldn't work; I designed a new

one out of popsicle sticks to fit in the camera's hot shoe.

Getting there

We stayed with relatives in Colorado Saturday night, then drove to

Laramie Sunday. I'd heard horror stories of hotels canceling people's

longstanding eclipse reservations, but fortunately our hotel honored

our reservation. WHEW! Monday morning, we left the hotel at 6am in

case we hit terrible traffic. There was already plenty of traffic on

the highway north to Casper, but we turned east hoping for fewer crowds.

A roadsign sign said "NO PARKING ON HIGHWAY." They'd better not try

to enforce that in the totality zone!

![[Our eclipse viewing pullout on Wyoming 270]](http://shallowsky.com/images/eclipse2017/img_7726T.jpg) When we got to I-25 it was moving and, oddly enough, not particularly

crowded. Glendo Reservoir had looked on the map like a nice spot on

the centerline ... but it was also a state park, so there was a risk

that everyone else would want to go there. Sure enough: although

traffic was moving on I-25 at Wheatland, a few miles north the freeway

came to a screeching halt. We backtracked and headed east toward Guernsey,

where several highways went north toward the centerline.

When we got to I-25 it was moving and, oddly enough, not particularly

crowded. Glendo Reservoir had looked on the map like a nice spot on

the centerline ... but it was also a state park, so there was a risk

that everyone else would want to go there. Sure enough: although

traffic was moving on I-25 at Wheatland, a few miles north the freeway

came to a screeching halt. We backtracked and headed east toward Guernsey,

where several highways went north toward the centerline.

East of Glendo, there were crowds at every highway pullout and rest

stop. As we turned onto 270 and started north, I kept an eye on

OsmAnd on my phone, where I'd loaded

a GPX file of the eclipse path. When we were within a mile of the

centerline, we stopped at a likely looking pullout. It was maybe 9 am.

A cool wind was blowing -- very pleasant since we were expecting a hot

day -- and we got acquainted with our fellow eclipse watchers as we

waited for first contact.

Our pullout was also the beginning of a driveway to a farmhouse we could

see in the distance. Periodically people pulled up, looking lost,

checked maps or GPS, then headed down the road to the farm. Apparently

the owners had advertised it as an eclipse spot -- pay $35, and you

can see the eclipse and have access to a restroom too! But apparently

the old farmhouse's plumbing failed early on, and some of the people

who'd paid came out to the road to watch with us since we had better

equipment set up.

![[Terrible afocal view of partial eclipse]](http://shallowsky.com/images/eclipse2017/img_1491T.jpg) There's not much to say about the partial eclipse. We all traded views

-- there were five or six scopes at our pullout, including a nice

little H-alpha scope. I snapped an occasional photo through the 80mm

with my pocket camera held to the eyepiece, or with the DSLR through

an eyepiece projection adapter. Oddly, the DSLR photos came out worse

than the pocket cam ones. I guess I should try and debug that at some point.

There's not much to say about the partial eclipse. We all traded views

-- there were five or six scopes at our pullout, including a nice

little H-alpha scope. I snapped an occasional photo through the 80mm

with my pocket camera held to the eyepiece, or with the DSLR through

an eyepiece projection adapter. Oddly, the DSLR photos came out worse

than the pocket cam ones. I guess I should try and debug that at some point.

Shortly before totality, I set up the DSLR on the tripod, focused on a

distant hillside and taped the focus with duct tape, plugged in the

shutter remote, checked the settings in Manual mode, then set the

camera to Program mode and AEB (auto exposure bracketing). I put the

lens cap back on and pointed the camera toward the sun using the

popsicle-stick solar finder. I also set a countdown timer, so I could

press START when totality began and it would beep to warn me when it was

time to the sun to come back out. It was getting chilly by then, with

the sun down to a sliver, and we put on sweaters.

The pair of eclipse veterans at our pullout had told everybody to

watch for the moon's shadow racing toward us across the hills from the

west. But I didn't see the racing shadow, nor any shadow bands.

And then Venus and Mercury appeared and the sun went away.

Totality

![[Solar eclipse diamond ring]](http://shallowsky.com/images/eclipse2017/img_1499c.jpg) One thing the photos don't prepare you for is the color of the sky. I

expected it would look like twilight, maybe a little darker; but it

was an eerie, beautiful medium slate blue. With that unworldly

solar corona in the middle of it, and Venus gleaming as bright as

you've ever seen it, and Mercury shining bright on the other side.

There weren't many stars.

One thing the photos don't prepare you for is the color of the sky. I

expected it would look like twilight, maybe a little darker; but it

was an eerie, beautiful medium slate blue. With that unworldly

solar corona in the middle of it, and Venus gleaming as bright as

you've ever seen it, and Mercury shining bright on the other side.

There weren't many stars.

We didn't see birds doing anything unusual; as far as I can tell,

there are no birds in this part of Wyoming. But the cows did all

get in a line and start walking somewhere. Or so Dave tells me.

I wasn't looking at the cows.

Amazingly, I remembered to start my timer and to pull off the DSLR's

lens cap as I pushed the shutter button for the diamond-ring shots

without taking my eyes off the spectacle high above. I turned the

camera off and back on (to cancel AEB), switched to M mode, and

snapped a photo while I scuttled over to the telescope, pulled the

filter off and took a look at the corona in the wide-field eyepiece.

So beautiful! Binoculars, telescope, naked eye -- I don't know which

view was best.

I went through my exposure sequence on the camera, turning the dial a

couple of clicks each time without looking at the settings, keeping my

eyes on the sky or the telescope eyepiece. But at some point I happened

to glance at the viewfinder -- and discovered that the sun was drifting

out of the frame. Adjusting the tripod to get it back in the frame

took longer than I wanted, but I got it there and got my eyes

back on the sun as I snapped another photo ...

and my timer beeped.

I must have set it wrong! It couldn't possibly have been two

and a half minutes. It had been 30, 45 seconds tops.

But I nudged the telescope away from the sun, and looked back up -- to

another diamond ring. Totality really was ending and it was time to

stop looking.

Getting Out

The trip back to Golden, where we were staying with a relative, was

hellish. We packed up immediately after totality -- we figured we'd

seen partials before, and maybe everybody else would stay. No such luck.

By the time we got all the equipment packed there was already a steady

stream of cars heading south on 270.

A few miles north of Guernsey the traffic came to a stop. This was to

be the theme of the afternoon. Every small town in Wyoming has a stop sign

or signal, and that caused backups for miles in both directions.

We headed east, away from Denver, to take rural roads down through

eastern Wyoming and Colorado rather than I-25, but even so,

we hit small-town stop sign backups every five or ten miles.

We'd brought the Rav4 partly for this reason. I kept my eyes glued on

OsmAnd and we took dirt roads when we could, skirting the paved

highways -- but mostly there weren't any dirt roads going where we

needed to go. It took about 7 hours to get back to Golden, about twice

as long as it should have taken. And we should probably count

ourselves lucky -- I've heard from other people who took 11 hours to

get to Denver via other routes.

Lessons Learned

Dave is fond of the quote,

"No battle plan survives contact with the enemy"

(which turns out to be from Prussian military strategist

Helmuth

von Moltke the Elder).

The enemy, in this case, isn't the eclipse; it's time.

Two and a half minutes sounds like a lot, but it goes by like nothing.

Even in my drastically scaled-down plan, I had intended exposures from

1/2000 to 2 seconds (at f/5.6 and ISO 400). In practice, I only made

it to 1/320 because of fiddling with the tripod.

And that's okay. I'm thrilled with the photos I got, and definitely

wouldn't have traded any eyeball time for more photos. I'm more annoyed

that the tripod fiddling time made me miss a little bit of extra looking.

My script actually worked out better than I expected, and I was very

glad I'd done the preparation I had. The script was reasonable, the

solar finders worked really well, and the lens was even in focus

for the totality shots.

Then there's the eclipse itself.

I've read so many articles about solar eclipses as a mystical,

religious experience. It wasn't, for me. It was just an eerily

beautiful, other-worldly spectacle: that ring of cold fire staring

down from the slate blue sky, bright planets but no stars, everything

strange, like nothing I'd ever seen. Photos don't get across what it's

like to be standing there under that weird thing in the sky.

I'm not going to drop everything to become a globe-trotting eclipse

chaser ... but I sure hope I get to see another one some day.

Photos: 2017

August 21 Total Solar Eclipse in Wyoming.

Tags: eclipse, astronomy, photography

[

20:41 Aug 27, 2017

More science/astro |

permalink to this entry |

]

Thu, 20 Apr 2017

![[House on Fire ruin, Mule Canyon UT]](http://shallowsky.com/images/bluff2017/mulecyn/img_7409.jpg) Last week, my hiking group had its annual trip, which this year

was Bluff, Utah, near Comb Ridge and Cedar Mesa, an area particular

known for its Anasazi ruins and petroglyphs.

Last week, my hiking group had its annual trip, which this year

was Bluff, Utah, near Comb Ridge and Cedar Mesa, an area particular

known for its Anasazi ruins and petroglyphs.

(I'm aware that "Anasazi" is considered a politically incorrect term

these days, though it still seems to be in common use in Utah; it isn't

in New Mexico. My view is that I can understand why Pueblo people

dislike hearing their ancestors referred to by a term that means

something like "ancient enemies" in Navajo; but if they want everyone

to switch from using a mellifluous and easy to pronounce word like

"Anasazi", they ought to come up with a better, and shorter,

replacement than "Ancestral Puebloans." I mean, really.)

The photo at right is probably the most photogenic of the ruins I saw.

It's in Mule Canyon, on Cedar Mesa, and it's called "House on Fire"

because of the colors in the rock when the light is right.

The light was not right when we encountered it, in late morning around

10 am; but fortunately, we were doing an out-and-back hike. Someone in

our group had said that the best light came when sunlight reflected

off the red rock below the ruin up onto the rock above it, an effect

I've seen in other places, most notably Bryce Canyon, where the hoodoos

look positively radiant when seen backlit, because that's when

the most reflected light adds to the reds and oranges in the rock.

Sure enough, when we got back to House on Fire at 1:30 pm, the

light was much better. It wasn't completely obvious to the eye,

but comparing the photos afterward, the difference is impressive:

Changing

light on House on Fire Ruin.

![[Brain main? petroglyph at Sand Island]](http://shallowsky.com/images/bluff2017/butlerwash/img_7301.jpg) The weather was almost perfect for our trip, except for one overly hot

afternoon on Wednesday.

And the hikes were fairly perfect, too -- fantastic ruins you can see

up close, huge petroglyph panels with hundreds of different creatures

and patterns (and some that could only have been science fiction,

like brain-man at left), sweeping views of canyons and slickrock,

and the geology of Comb Ridge and the Monument Upwarp.

The weather was almost perfect for our trip, except for one overly hot

afternoon on Wednesday.

And the hikes were fairly perfect, too -- fantastic ruins you can see

up close, huge petroglyph panels with hundreds of different creatures

and patterns (and some that could only have been science fiction,

like brain-man at left), sweeping views of canyons and slickrock,

and the geology of Comb Ridge and the Monument Upwarp.

And in case you read my last article, on translucent windows, and are

wondering how those generated waypoints worked: they were terrific,

and in some cases made the difference between finding a ruin and

wandering lost on the slickrock. I wish I'd had that years ago.

Most of what I have to say about the trip are already in the comments to

the photos, so I'll just link to the photo page:

Photos: Bluff trip, 2017.

Tags: travel, photography

[

19:28 Apr 20, 2017

More travel |

permalink to this entry |

]

Sun, 08 Jan 2017

![[Snowy view of the Rio Grande from Overlook]](http://shallowsky.com/images/snow-lameelk/img_0856.jpg)

The snowy days here have been so pretty, the snow contrasting with the

darkness of the piñons and junipers and the black basalt.

The light fluffy crystals sparkle in a rainbow of colors when they

catch the sunlight at the right angle, but I've been unable to catch

that effect in a photo.

We've had some unusual holiday visitors, too, culminating in this

morning's visit from a huge bull elk.

![[bull elk in the yard]](http://shallowsky.com/images/snow-lameelk/bullelk.jpg) Dave came down to make coffee and saw the elk in the garden right next

to the window. But by the time I saw him, he was farther out in the

yard. And my DSLR batteries were dead, so I grabbed the point-and-shoot

and got what I could through the window.

Dave came down to make coffee and saw the elk in the garden right next

to the window. But by the time I saw him, he was farther out in the

yard. And my DSLR batteries were dead, so I grabbed the point-and-shoot

and got what I could through the window.

Fortunately for my photography the elk wasn't going anywhere in any hurry.

He has an injured leg, and was limping badly.

He slowly made his way down the hill and into the neighbors' yard.

I hope he returns. Even with a limp that bad, an elk that size

has no predators in White Rock, so as long as he stays off the nearby

San Ildefonso reservation (where hunting is allowed) and manages to

find enough food, he should be all right. I'm tempted to buy some

hay to leave out for him.

![[Sunset light on the Sangre de Cristos]](http://shallowsky.com/images/snow-lameelk/img_0851T.jpg) Some of the sunsets have been pretty nice, too.

Some of the sunsets have been pretty nice, too.

A few more photos.

Tags: nature, photography

[

19:48 Jan 08, 2017

More photo |

permalink to this entry |

]

Sun, 25 Dec 2016

Excellent Xmas to all!

We're having a white Xmas here..

Dave and I have been discussing how "Merry Christmas" isn't

alliterative like "Happy Holidays". We had trouble coming up with a

good C or K adjective to go with Christmas, but then we hit on the

answer: Have an Excellent Xmas! It also has the advantage of

inclusivity: not everyone celebrates the birth of Christ, but Xmas is

a secular holiday of lights, family and gifts, open to people of all

belief systems.

Meanwhile:

I spent a couple of nights recently learning how to photograph Xmas

lights and farolitos.

Meanwhile:

I spent a couple of nights recently learning how to photograph Xmas

lights and farolitos.

Farolitos, a New Mexico Christmas tradition, are paper bags, weighted

down with sand, with a candle inside. Sounds modest, but put a row of

them alongside a roadway or along the top of a typical New Mexican

adobe or faux-dobe and you have a beautiful display of lights.

They're also known as luminarias in southern New Mexico, but

Northern New Mexicans insist that a luminaria is a bonfire, and the

little paper bag lanterns should be called farolitos.

They're pretty, whatever you call them.

Locally, residents of several streets in Los Alamos and White Rock set

out farolitos along their roadsides for a few nights around Christmas,

and the county cooperates by turning off streetlights on those

streets. The display on Los Pueblos in Los Alamos is a zoo, a slow

exhaust-choked parade of cars that reminds me of the Griffith Park

light show in LA. But here in White Rock the farolito displays are

a lot less crowded, and this year I wanted to try photographing them.

Canon bugs affecting night photography

I have a little past experience with night photography. I went through

a brief astrophotography phase in my teens (in the pre-digital phase,

so I was using film and occasionally glass plates). But I haven't done

much night photography for years.

That's partly because I've had problems taking night shots with my

current digital SLRcamera, a Rebel Xsi (known outside the US as a

Canon 450d). It's old and modest as DSLRs go, but I've resisted

upgrading since I don't really need more features.

Except maybe when it comes to night photography. I've tried shooting

star trails, lightning shots and other nocturnal time exposures, and

keep hitting a snag: the camera refuses to take a photo. I'll be in

Manual mode, with my aperture and shutter speed set, with the lens in

Manual Focus mode with Image Stabilization turned off. Plug in the

remote shutter release, push the button ... and nothing happens except

a lot of motorized lens whirring noises. Which shouldn't be happening

-- in MF and non-IS mode the lens should be just sitting there intert,

not whirring its motors. I couldn't seem to find a way to convince it

that the MF switch meant that, yes, I wanted to focus manually.

It seemed to be primarily a problem with the EF-S 18-55mm kit lens;

the camera will usually condescend to take a night photo with my other

two lenses. I wondered if the MF switch might be broken, but then I

noticed that in some modes the camera explicitly told me I was in

manual focus mode.

I was almost to the point of ordering another lens just for night

shots when I finally hit upon the right search terms and found,

if not the reason it's happening, at least an excellent workaround.

Back Button Focus

I'm so sad that I went so many years without knowing about Back Button Focus.

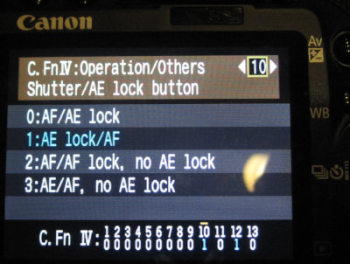

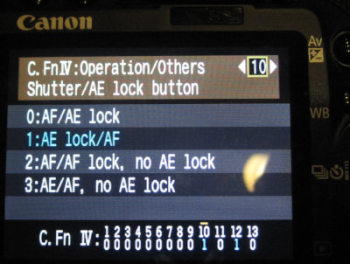

It's well hidden in the menus, under Custom Functions #10.

Normally, the shutter button does a bunch of things. When you press it

halfway, the camera both autofocuses (sadly, even in manual focus mode)

and calculates exposure settings.

But there's a custom function that lets you separate the focus and

exposure calculations. In the Custom Functions menu option #10

(the number and exact text will be different on different Canon models,

but apparently most or all Canon DSLRs have this somewhere),

the heading says: Shutter/AE Lock Button.

Following that is a list of four obscure-looking options:

But there's a custom function that lets you separate the focus and

exposure calculations. In the Custom Functions menu option #10

(the number and exact text will be different on different Canon models,

but apparently most or all Canon DSLRs have this somewhere),

the heading says: Shutter/AE Lock Button.

Following that is a list of four obscure-looking options:

- AF/AE lock

- AE lock/AF

- AF/AF lock, no AE lock

- AE/AF, no AE lock

The text before the slash indicates what the shutter button, pressed

halfway, will do in that mode; the text after the slash is what

happens when you press the * or AE lock button on the

upper right of the camera back (the same button you use to zoom out

when reviewing pictures on the LCD screen).

The first option is the default: press the shutter button halfway to

activate autofocus; the AE lock button calculates and locks exposure settings.

The second option is the revelation: pressing the shutter button halfway

will calculate exposure settings, but does nothing for focus. To focus,

press the * or AE button, after which focus will be locked. Pressing

the shutter button won't refocus. This mode is called "Back button focus"

all over the web, but not in the manual.

Back button focus is useful in all sorts of cases.

For instance, if you want to autofocus once then keep the same focus

for subsequent shots, it gives you a way of doing that.

It also solves my night focus problem: even with the bug (whether it's

in the lens or the camera) that the lens tries to autofocus even in

manual focus mode, in this mode, pressing the shutter won't trigger that.

The camera assumes it's in focus and goes ahead and takes the picture.

Incidentally, the other two modes in that menu apply to AI SERVO mode

when you're letting the focus change constantly as it follows a moving

subject. The third mode makes the * button lock focus and stop

adjusting it; the fourth lets you toggle focus-adjusting on and off.

Live View Focusing

There's one other thing that's crucial for night shots: live view

focusing. Since you can't use autofocus in low light, you have to do

the focusing yourself. But most DSLR's focusing screens aren't good

enough that you can look through the viewfinder and get a reliable

focus on a star or even a string of holiday lights or farolitos.

Instead, press the SET button (the one in the middle of the

right/left/up/down buttons) to activate Live View (you may have to

enable it in the menus first). The mirror locks up and a preview of

what the camera is seeing appears on the LCD. Use the zoom button (the

one to the right of that */AE lock button) to zoom in; there are two

levels of zoom in addition to the un-zoomed view. You can use the

right/left/up/down buttons to control which part of the field the

zoomed view will show. Zoom all the way in (two clicks of the +

button) to fine-tune your manual focus. Press SET again to exit

live view.

It's not as good as a fine-grained focusing screen, but at least

it gets you close. Consider using relatively small apertures, like f/8,

since it will give you more latitude for focus errors. Yyou'll be

doing time exposures on a tripod anyway, so a narrow aperture just

means your exposures have to be a little longer than they otherwise

would have been.

After all that, my Xmas Eve farolitos photos turned out mediocre.

We had a storm blowing in, so a lot of the candles had blown out.

(In the photo below you can see how the light string on the left

is blurred, because the tree was blowing around so much during the

30-second exposure.)

But I had fun, and maybe I'll go out and try again tonight.

An excellent X-mas to you all!

Tags: photography

[

12:30 Dec 25, 2016

More photo |

permalink to this entry |

]

Mon, 05 Sep 2016

We drove up to Taos today to see the

Earthships.

![[Taos Earthships]](http://shallowsky.com/images/earthships/img_6049.jpg) Earthships are sustainable, completely off-the-grid houses built of adobe and

recycled materials. That was pretty much all I knew about them, except

that they were weird looking; I'd driven by on the highway a few times

(they're on highway 64 just west of the

beautiful Rio

Grande Gorge Bridge) but never stopped and paid the $7 admission

for the self-guided tour.

Earthships are sustainable, completely off-the-grid houses built of adobe and

recycled materials. That was pretty much all I knew about them, except

that they were weird looking; I'd driven by on the highway a few times

(they're on highway 64 just west of the

beautiful Rio

Grande Gorge Bridge) but never stopped and paid the $7 admission

for the self-guided tour.

![[Earthship construction]](http://shallowsky.com/images/earthships/img_6045T.jpg) Seeing them up close was fun. The walls are made of old tires packed

with dirt, then covered with adobe. The result is quite strong, though

like all adobe structures it requires regular maintenance if you don't

want it to melt away. For non load bearing walls, they pack adobe

around old recycled bottles or cans.

Seeing them up close was fun. The walls are made of old tires packed

with dirt, then covered with adobe. The result is quite strong, though

like all adobe structures it requires regular maintenance if you don't

want it to melt away. For non load bearing walls, they pack adobe

around old recycled bottles or cans.

The houses have a passive solar design, with big windows along one

side that make a greenhouse for growing food and freshening the air,

as well as collecting warmth in cold weather. Solar panels provide

power -- supposedly along with windmills, but I didn't see any

windmills in operation, and the ones they showed in photos looked

too tiny to offer much help. To help make the most of the solar power,

the house is wired for DC, and all the lighting, water pumps and so

forth run off low voltage DC. There's even a special DC refrigerator.

They do include an AC inverter for appliances like televisions and computer

equipment that can't run directly off DC.

Water is supposedly self sustaining too, though I don't see how that

could work in drought years. As long as there's enough rainfall, water

runs off the roof into a cistern and is used for drinking, bathing etc.,

after which it's run through filters and then pumped into the greenhouse.

Waste water from the greenhouse is used for flushing toilets, after

which it finally goes to the septic tank.

All very cool. We're in a house now that makes us very happy (and has

excellent passive solar, though we do plan to add solar panels and

a greywater system some day) but if I was building a house, I'd be

all over this.

We also discovered an excellent way to get there without getting stuck

in traffic-clogged Taos (it's a lovely town, but you really don't want

to go near there on a holiday, or a weekend ... or any other time when

people might be visiting). There's a road from Pilar that crosses the

Rio Grande then ascends up to the mesa high above the river,

continuing up to highway 64 right near the earthships. We'd been a

little way up that road once, on a petroglyph-viewing hike, but never

all the way through. The map said it was dirt from the Rio all the way

up to 64, and we were in the Corolla, since the Rav4's battery started

misbehaving a few days ago and we haven't replaced it yet.

So we were hesitant. But the nice folks at the Rio Grande Gorge

visitor center at Pilar assured us that the dirt section ended at the

top of the mesa and any car could make it ("it gets bumpy -- a New

Mexico massage! You'll get to the top very relaxed"). They were

right: the Corolla made it with no difficulty and it was a much

faster route than going through Taos.

![[Nice sunset clouds in White Rock]](http://shallowsky.com/images/earthships/20160905_193050c.jpg) We got home just in time for the rouladen I'd left cooking in the

crockpot, and then finished dinner just in time for a great sunset sky.

We got home just in time for the rouladen I'd left cooking in the

crockpot, and then finished dinner just in time for a great sunset sky.

A few more photos:

Earthships (and a

great sunset).

Tags: misc, photography

[

21:05 Sep 05, 2016

More misc |

permalink to this entry |

]

Tue, 09 Aug 2016

A couple of days ago we had a spectacular afternoon double rainbow.

I was out planting grama grass seeds, hoping to take take advantage of

a rainy week, but I cut the planting short to run up and get my camera.

![[Double rainbow]](http://shallowsky.com/images/hummer-rainbow/img_0470-1024.jpg)

![[Hummingbirds and rainbow]](http://shallowsky.com/images/hummer-rainbow/img_0482T.jpg) And then after shooting rainbow shots with the fisheye lens,

it occurred to me that I could switch to the zoom and take some

hummingbird shots with the rainbow in the background. How often

do you get a chance to do that? (Not to mention a great excuse not to

go back to planting grass seeds.)

And then after shooting rainbow shots with the fisheye lens,

it occurred to me that I could switch to the zoom and take some

hummingbird shots with the rainbow in the background. How often

do you get a chance to do that? (Not to mention a great excuse not to

go back to planting grass seeds.)

(Actually, here, it isn't all that uncommon since we get a lot of

afternoon rainbows. But it's the first time I thought of trying it.)

Focus is always chancy when you're standing next to the feeder,

waiting for birds to fly by and shooting whatever you can.

Next time maybe I'll have time to set up a tripod and remote

shutter release. But I was pretty happy with what I got.

Photos:

Double rainbow, with hummingbirds.

Tags: nature, birds, rainbow, photography

[

19:40 Aug 09, 2016

More nature |

permalink to this entry |

]

Tue, 05 Jul 2016

I'll be at Texas LinuxFest in Austin, Texas this weekend.

Friday, July

8 is the big day for open source imaging:

first a morning Photo Walk led by Pat David, from 9-11,

after which Pat, an active GIMP contributor and the driving force

behind the PIXLS.US website and discussion

forums, gives a talk on "Open Source Photography Tools".

Then after lunch I'll give a GIMP tutorial.

We may also have a Graphics Hackathon/Q&A session to discuss

all the open-source graphics tools in the last slot of the day, but

that part is still tentative. I'm hoping we can get some good

discussion especially among the people who go on the photo walk.

Lots of interesting looking talks on Saturday, too. I've never been

to Texas LinuxFest before: it's a short conference, just two days,

but they're packing a lot into those two days and but it looks like

it'll be a lot of fun.

Tags: gimp, conferences, photography

[

18:37 Jul 05, 2016

More conferences |

permalink to this entry |

]

Sun, 04 Oct 2015

For the animations

I made from the lunar eclipse last week, the hard part was aligning

all the images so the moon (or, in the case of the moonrise image, the

hillside) was in the same position in every time.

This is a problem that comes up a lot with astrophotography, where

multiple images are stacked for a variety of reasons: to increase

contrast, to increase detail, or to take an average of a series of images,

as well as animations like I was making this time.

And of course animations can be fun in any context, not just astrophotography.

In the tutorial that follows, clicking on the images will show a full

sized screenshot with more detail.

Load all the images as layers in a single GIMP image

The first thing I did was load up all the images as layers in a single image:

File->Open as Layers..., then navigate to where the images are

and use shift-click to select all the filenames I wanted.

![[Upper layer 50% opaque to align two layers]](http://shallowsky.com/blog/images/screenshots/aligning-eclipse/dim-redT.jpg)

Work on two layers at once

By clicking on the "eyeball" icon in the Layers dialog, I could

adjust which layers were visible. For each pair of layers, I made

the top layer about 50% opaque by dragging the opacity slider (it's

not important that it be exactly at 50%, as long as you can see both

images).

Then use the Move tool to drag the top image on top of the bottom image.

![[]](http://shallowsky.com/blog/images/screenshots/aligning-eclipse/almost-alignedT.jpg)

But it's hard to tell when they're exactly aligned

"Drag the top image on top of the bottom image":

easy to say, hard to do. When the images are dim and red like that,

and half of the image is nearly invisible, it's very hard to tell when

they're exactly aligned.

![[]](http://shallowsky.com/blog/images/screenshots/aligning-eclipse/contrast-filterT.jpg)

Use a Contrast display filter

What helped was a Contrast filter.

View->Display Filters... and in the dialog that pops up,

click on

Contrast, and click on the right arrow to move it to

Active Filters.

The Contrast filter changes the colors so that dim red moon is fully

visible, and it's much easier to tell when the layers are

approximately on top of each other.

![[]](http://shallowsky.com/blog/images/screenshots/aligning-eclipse/almost-aligned-differenceT.jpg)

Use Difference mode for the final fine-tuning

Even with the Contrast filter, though, it's hard to see when the

images are exactly on top of each other. When you have them within a few

pixels, get rid of the contrast filter (you can keep the dialog up but

disable the filter by un-checking its checkbox in

Active Filters).

Then, in the Layers dialog, slide the top layer's Opacity back to 100%,

go to the

Mode selector and set the layer's mode to

Difference.

In Difference mode, you only see differences between the two layers.

So if your alignment is off by a few pixels, it'll be much easier to see.

Even in a case like an eclipse where the moon's appearance is changing

from frame to frame as the earth's shadow moves across it, you can still

get the best alignment by making the Difference between the two layers

as small as you can.

Use the Move tool and the keyboard: left, right, up and down arrows move

your layer by one pixel at a time. Pick a direction, hit the arrow key

a couple of times and see how the difference changes. If it got bigger,

use the opposite arrow key to go back the other way.

When you get to where there's almost no difference between the two layers,

you're done. Change Mode back to Normal, make sure Opacity is at 100%,

then move on to the next layer in the stack.

It's still a lot of work. I'd love to find a program that looks for

circular or partially-circular shapes in successive images and does

the alignment automatically. Someone on GIMP suggested I might be

able to write something using OpenCV, which has circle-finding

primitives (I've written briefly before about

SimpleCV,

a wrapper that makes OpenCV easy to use from Python).

But doing the alignment by hand in GIMP, while somewhat tedious,

didn't take as long as I expected once I got the hang of using the

Contrast display filter along with Opacity and Difference mode.

Creating the animation

Once you have your layers, how do you turn them into an animation?

The obvious solution, which I originally intended to use, is to save

as GIF and check the "animated" box. I tried that -- and discovered

that the color errors you get when converting an image to indexed make

a beautiful red lunar eclipse look absolutely awful.

So I threw together a Javascript script to animate images by loading

a series of JPEGs. That meant that I needed to export all the layers

from my GIMP image to separate JPG files.

GIMP doesn't have a built-in way to export all of an image's layers to

separate new images. But that's an easy plug-in to write, and a web

search found lots of plug-ins already written to do that job.

The one I ended up using was Lie Ryan's Python script in

How

to save different layers of a design in separate files;

though a couple of others looked promising (I didn't try them), such as

gimp-plugin-export-layers

and

save_all_layers.scm.

You can see the final animation here:

Lunar eclipse of

September 27, 2015: Animations.

Tags: gimp, photography, astronomy

[

09:44 Oct 04, 2015

More gimp |

permalink to this entry |

]

Tue, 03 Feb 2015

![[Roof glacier as it slides off the roof]](http://shallowsky.com/blog/images/snow/img_1899.jpg) A few days ago, I wrote about

the snowpack we

get on the roof during snowstorms:

A few days ago, I wrote about

the snowpack we

get on the roof during snowstorms:

It doesn't just sit there until it gets warm enough to melt and run

off as water. Instead, the whole mass of snow moves together,

gradually, down the metal roof, like a glacier.

When it gets to the edge, it still doesn't fall; it somehow stays

intact, curling over and inward, until the mass is too great and it

loses cohesion and a clump falls with a Clunk!

The day after I posted that, I had a chance to see what happens as the

snow sheet slides off a roof if it doesn't have a long distance

to fall. It folds gracefully and gradually, like a sheet.

![[Underside of a roof glacier]](http://shallowsky.com/blog/images/snow/img_1901.jpg)

![[Underside of a roof glacier]](http://shallowsky.com/blog/images/snow/img_1887.jpg) The underside as they slide off the roof is pretty interesting, too,

with varied shapes and patterns in addition to the imprinted pattern

of the roof.

The underside as they slide off the roof is pretty interesting, too,

with varied shapes and patterns in addition to the imprinted pattern

of the roof.

But does it really move like a glacier? I decided to set up a camera

and film it on the move. I set the Rebel on a tripod with an AC power

adaptor, pointed it out the window at a section of roof with a good

snow load, plugged in the intervalometer I bought last summer, located

the manual to re-learn how to program it, and set it for a 30-second

interval. I ran that way for a bit over an hour -- long enough that

one section of ice had detached and fallen and a new section was

starting to slide down. Then I moved to another window and shot a series

of the same section of snow from underneath, with a 40-second interval.

I uploaded the photos to my workstation and verified that they'd

captured what I wanted. But when I stitched them into a movie, the

way I'd used for my

time-lapse

clouds last summer, it went way too fast -- the movie was over in

just a few seconds and you couldn't see what it was doing. Evidently

a 30-second interval is far too slow for the motion of a roof glacier

on a day in the mid-thirties.

But surely that's solvable in software? There must be a way to get avconv

to make duplicates of each frame, if I don't mind that the movie come

out slightly jump. I read through the avconv manual, but it wasn't

very clear about this. After a lot of fiddling and googling and help

from a more expert friend, I ended up with this:

avconv -r 3 -start_number 8252 -i 'img_%04d.jpg' -vcodec libx264 -r 30 timelapse.mp4

In avconv, -r specifies a frame rate for the next file, input or

output, that will be specified. So -r 3 specifies the

frame rate for the set of input images, -i 'img_%04d.jpg';

and then the later -r 30 overrides that 3 and sets a new

frame rate for the output file, -timelapse.mp4. The start

number is because the first file in my sequence is named img_8252.jpg.

30, I'm told, is a reasonable frame rate for movies intended to be watched

on typical 60FPS monitors; 3 is a number I adjusted until the glacier in

the movie moved at what seemed like a good speed.

The movies came out quite interesting! The main movie, from the top,

is the most interesting; the one from the underside is shorter.

I wish I had a time-lapse of that folded sheet I showed above ...

but that happened overnight on the night after I made the movies.

By the next morning there wasn't enough left to be worth setting up

another time-lapse. But maybe one of these years I'll have a chance to

catch a sheet-folding roof glacier.

Tags: photography, time-lapse, glacier, snow

[

19:46 Feb 03, 2015

More photo |

permalink to this entry |

]

Thu, 16 Oct 2014

Last week both of the local mountain ranges turned gold simultaneously

as the aspens turned. Here are the Sangre de Cristos on a stormy day:

![[Sangre de Cristos gold with aspens]](http://shallowsky.com/blog/images/aspens/img_6833c.jpg)

And then over the weekend, a windstorm blew a lot of those leaves away,

and a lot of the gold is gone now. But the aspen groves are still

beautiful up close ... here's one from Pajarito Mountain yesterday.

![[Sangre de Cristos gold with aspens]](http://shallowsky.com/blog/images/aspens/img_1243c.jpg)

Tags: photography, nature

[

13:37 Oct 16, 2014

More nature |

permalink to this entry |

]

Thu, 02 Oct 2014

![[double rainbow]](http://shallowsky.com/doublerainbow/img_9703.jpg)

The wonderful summer thunderstorm season here seems to have died down.

But while it lasted, we had some spectacular double rainbows.

And I kept feeling frustrated when I took the SLR outside only to find

that my 18-55mm kit lens was nowhere near wide enough to capture it.

I could try

stitching

it together as a panorama, but panoramas of rainbows turn out to

be quite difficult -- there are no clean edges in the photo to tell

you where to join one image to the next, and automated programs like

Hugin won't even try.

There are plenty of other beautiful vistas here too -- cloudscapes,

mesas, stars. Clearly, it was time to invest in a wide-angle lens. But

how wide would it need to be to capture a double rainbow?

All over the web you can find out that a rainbow has a radius of 42

degrees, so you need a lens that covers 84 degrees to get the whole thing.

But what about a double rainbow? My web searches came to naught.

Lots of pages talk about double rainbows, but Google wasn't finding

anything that would tell me the angle.

I eventually gave up on the web and went to my physical bookshelf,

where Color and Light in Nature gave me a nice table

of primary and secondary rainbow angles of various wavelengths of light.

It turns out that 42 degrees everybody quotes is for light of 600 nm

wavelength, a blue-green or cyan color. At that wavelength, the

primary angle is 42.0° and the secondary angle is 51.0°.

Armed with that information, I went back to Google and searched for

double rainbow 51 OR 102 angle and found a nice Slate

article on a

Double

rainbow and lightning photo. The photo in the article, while

lovely (lightning and a double rainbow in the South Dakota badlands),

only shows a tiny piece of the rainbow, not the whole one I'm hoping

to capture; but the article does mention the 51-degree angle.

Okay, so 51°×2 captures both bows in cyan light.

But what about other wavelengths?

A typical eye can see from about 400 nm (deep purple)

to about 760 nm (deep red). From the table in the book:

| Wavelength | Primary | Secondary

|

|---|

| 400 | 40.5° | 53.7°

|

| 600 | 42.0° | 51.0°

|

| 700 | 42.4° | 50.3°

|

Notice that while the primary angles get smaller with shorter

wavelengths, the secondary angles go the other way. That makes sense

if you remember that the outer rainbow has its colors reversed from

the inner one: red is on the outside of the primary bow, but the

inside of the secondary one.

So if I want to photograph a complete double rainbow in one shot,

I need a lens that can cover at least 108 degrees.

What focal length lens does that translate to?

Howard's

Astronomical Adventures has a nice focal length calculator.

If I look up my Rebel XSi on Wikipedia to find out that other

countries call it a 450D, and plug that in to the calculator, then

try various focal lengths (the calculator offers a chart but it didn't

work for me), it turns out that I need an 8mm lens, which will give me

an 108° 26‘ 46" field of view -- just about right.

![[Double rainbow with the Rokinon 8mm fisheye]](http://shallowsky.com/doublerainbow/img_6492-640.jpg) So that's what I ordered -- a Rokinon 8mm fisheye. And it turns out to

be far wider than I need -- apparently the actual field of view in

fisheyes varies widely from lens to lens, and this one claims to have

a 180° field. So the focal length calculator isn't all that useful.

At any rate, this lens is plenty wide enough to capture those double

rainbows, as you can see.

So that's what I ordered -- a Rokinon 8mm fisheye. And it turns out to

be far wider than I need -- apparently the actual field of view in

fisheyes varies widely from lens to lens, and this one claims to have

a 180° field. So the focal length calculator isn't all that useful.

At any rate, this lens is plenty wide enough to capture those double

rainbows, as you can see.

About those books

By the way, that book I linked to earlier is apparently out of print

and has become ridiculously expensive. Another excellent book on

atmospheric phenomena is

Light

and Color in the Outdoors by Marcel Minnaert

(I actually have his earlier version, titled

The

Nature of Light and Color in the Open Air). Minnaert doesn't

give the useful table of frequencies and angles, but he has lots

of other fun and useful information on rainbows and related phenomena,

including detailed instructions for making rainbows indoors if you

want to measure angles or other quantities yourself.

Tags: nature, photography, rainbow

[

13:37 Oct 02, 2014

More photo |

permalink to this entry |

]

Mon, 22 Sep 2014

I had the opportunity to borrow a commercial crittercam

for a week from the local wildlife center.

![[Bushnell Trophycam vs. Raspberry Pi Crittercam]](http://shallowsky.com/blog/images/trophy-vs-critter/img_0865.jpg) Having grown frustrated with the high number of false positives on my

Raspberry Pi based

crittercam, I was looking forward to see how a commercial camera compared.

Having grown frustrated with the high number of false positives on my

Raspberry Pi based

crittercam, I was looking forward to see how a commercial camera compared.

The Bushnell Trophycam I borrowed is a nicely compact,

waterproof unit, meant to strap to a tree or similar object.

It has an 8-megapixel camera that records photos to the SD card -- no

wi-fi. (I believe there are more expensive models that offer wi-fi.)

The camera captures IR as well as visible light, like the PiCam NoIR,

and there's an IR LED illuminator (quite a bit stronger than the cheap

one I bought for my crittercam) as well as what looks like a passive IR sensor.

I know the TrophyCam isn't immune to false positives; I've heard

complaints along those lines from a student who's using them to do

wildlife monitoring for LANL.

But how would it compare with my homebuilt crittercam?

I put out the TrophyCam first night, with bait (sunflower seeds) in

front of the camera. In the morning I had ... nothing. No false

positives, but no critters either. I did have some shots of myself,

walking away from it after setting it up, walking up to it to adjust

it after it got dark, and some sideways shots while I fiddled with the

latches trying to turn it off in the morning, so I know it was

working. But no woodrats -- and I always catch a woodrat or two

in PiCritterCam runs. Besides, the seeds I'd put out were gone,

so somebody had definitely been by during the night. Obviously

I needed a more sensitive setting.

I fiddled with the options, changed the sensitivity from automatic

to the most sensitive setting, and set it out for a second night, side

by side with my Pi Crittercam. This time it did a little better,

though not by much: one nighttime shot with a something in it,

plus one shot of someone's furry back and two shots of a mourning dove

after sunrise.

![[blown-out image from Bushnell Trophycam]](http://shallowsky.com/blog/images/trophy-vs-critter/ek000017.jpg) What few nighttime shots there were were mostly so blown out you

couldn't see any detail to be sure. Doesn't this camera know how to

adjust its exposure? The shot here has a creature in it. See it?

I didn't either, at first. It's just to the right of the bush.

You can just see the curve of its back and the beginning of a tail.

What few nighttime shots there were were mostly so blown out you

couldn't see any detail to be sure. Doesn't this camera know how to

adjust its exposure? The shot here has a creature in it. See it?

I didn't either, at first. It's just to the right of the bush.

You can just see the curve of its back and the beginning of a tail.

Meanwhile, the Pi cam sitting next to it caught eight reasonably exposed

nocturnal woodrat shots and two dove shots after dawn.

And 369 false positives where a leaf had moved in the wind or a dawn

shadow was marching across the ground. The TrophyCam only shot 47

photos total: 24 were of me, fiddling with the camera setup to get

them both pointing in the right direction, leaving 20 false positives.

So the Bushnell, clearly, gives you fewer false positives to hunt

through -- but you're also a lot less likely to catch an actual critter.

It also doesn't deal well with exposures in small areas and close distances:

its IR light source seems to be too bright for the camera to cope with.

I'm guessing, based on the name, that it's designed for shooting

deer walking by fifty feet away, not woodrats at a two-foot distance.

Okay, so let's see what the camera can do in a larger space. The next

two nights I set it up in large open areas to see what walked by. The

first night it caught four rabbit shots that night, with only five

false positives. The quality wasn't great, though: all long exposures

of blurred bunnies. The second night it caught nothing at all

overnight, but three rabbit shots the next morning. No false positives.

![[coyote caught on the TrophyCam]](http://shallowsky.com/blog/images/trophy-vs-critter/ek000003.jpg) The final night, I strapped it to a piñon tree facing a little

clearing in the woods. Only two morning rabbits, but during the night

it caught a coyote. And only 5 false positives. I've never caught a

coyote (or anything else larger than a rabbit) with the PiCam.

The final night, I strapped it to a piñon tree facing a little

clearing in the woods. Only two morning rabbits, but during the night

it caught a coyote. And only 5 false positives. I've never caught a

coyote (or anything else larger than a rabbit) with the PiCam.

So I'm not sure what to think. It's certainly a lot more relaxing to

go through the minimal output of the TrophyCam to see what I caught.

And it's certainly a lot easier to set up, and more waterproof, than

my jury-rigged milk carton setup with its two AC cords, one for the Pi

and one for the IR sensor. Being self-contained and battery operated

makes it easy to set up anywhere, not just near a power plug.

But it's made me rethink my pessimistic notion that I should give up

on this homemade PiCam setup and buy a commercial camera.

Even on its most sensitive setting, I can't make the TrophyCam

sensitive enough to catch small animals.

And the PiCam gets better picture quality than the Bushnell, not to

mention the option of hooking up a separate camera with flash.

So I guess I can't give up on the Pi setup yet. I just have to come up

with a sensible way of taming the false positives. I've been doing a lot

of experimenting with SimpleCV image processing, but alas, it's no better

at detecting actual critters than my simple pixel-counting script was.

But maybe I'll find the answer, one of these days. Meanwhile, I may

look into battery power.

Tags: crittercam, nature, raspberry pi, photography, maker

[

14:29 Sep 22, 2014

More hardware |

permalink to this entry |

]

Thu, 18 Sep 2014

A female hummingbird -- probably a black-chinned -- hanging out at

our window feeder on a cool cloudy morning.

![[female hummingbird at the window feeder]](http://shallowsky.com/blog/images/nature/mirror-mirror.jpg)

Tags: birds, nature, photography

[

19:04 Sep 18, 2014

More nature/birds |

permalink to this entry |

]

Fri, 15 Aug 2014

![[Time-lapse clouds movie on youtube]](https://i.ytimg.com/vi/JgQ4RQTvMuI/mqdefault.jpg) A few weeks ago I wrote about building a simple

Arduino-driven

camera intervalometer to take repeat photos with my DSLR.

I'd been entertained by watching the clouds build and gather and dissipate

again while I stepped through all the false positives in my

crittercam,

and I wanted to try capturing them intentionally so I could make cloud

movies.

A few weeks ago I wrote about building a simple

Arduino-driven

camera intervalometer to take repeat photos with my DSLR.

I'd been entertained by watching the clouds build and gather and dissipate

again while I stepped through all the false positives in my

crittercam,

and I wanted to try capturing them intentionally so I could make cloud

movies.

Of course, you don't have to build an Arduino device.

A search for timer remote control or intervalometer

will find lots of good options around $20-30. I bought one

so I'll have a nice LCD interface rather than having to program an

Arduino every time I want to make movies.

Setting the image size

Okay, so you've set up your camera on a tripod with the intervalometer

hooked to it. (Depending on how long your movie is, you may also want

an external power supply for your camera.)

Now think about what size images you want.

If you're targeting YouTube, you probably want to use one of

YouTube's

preferred settings, bitrates and resolutions, perhaps 1280x720 or

1920x1080. But you may have some other reason to shoot at higher resolution:

perhaps you want to use some of the still images as well as making video.

For my first test, I shot at the full resolution of the camera.

So I had a directory full of big ten-megapixel photos with

filenames ranging from img_6624.jpg to img_6715.jpg.

I copied these into a new directory, so I didn't overwrite the originals.

You can use ImageMagick's mogrify to scale them all:

mogrify -scale 1280x720 *.jpg

I had an additional issue, though: rain was threatening and I didn't

want to leave my camera at risk of getting wet while I went dinner shopping,

so I moved the camera back under the patio roof. But with my fisheye lens,

that meant I had a lot of extra house showing and I wanted to crop

that off. I used GIMP on one image to determine the x, y, width and height

for the crop rectangle I wanted.

You can even crop to a different aspect ratio from your target,

and then fill the extra space with black:

mogrify img_6624.jpg -crop 2720x1450+135+315 -scale 1280 -gravity center -background black -extent 1280x720 *.jpg

If you decide to rescale your images to an unusual size, make sure

both dimensions are even, otherwise avconv will complain that

they're not divisible by two.

Finally: Making your movie

I found lots of pages explaining how to stitch

together time-lapse movies using mencoder, and a few

using ffmpeg. Unfortunately, in Debian, both are deprecated.

Mplayer has been removed entirely.

The ffmpeg-vs-avconv issue is apparently a big political war, and

I have no position on the matter, except that Debian has come down

strongly on the side of avconv and I get tired of getting nagged at

every time I run a program. So I needed to figure out how to use avconv.

I found some pages on avconv, but most of them didn't actually work.

Here's what worked for me:

avconv -f image2 -r 15 -start_number 6624 -i 'img_%04d.jpg' -vcodec libx264 time-lapse.mp4

Update: I don't know where that -f image2 came from -- ignore it.

And avconv can take an input and an output frame rate; they're

both specified with -r, and the only way input and output are

distinguished is their position in the command line. So a more

appropriate command might be something like this:

avconv -r 15 -start_number 6624 -i 'img_%04d.jpg' -vcodec libx264 -r 30 time-lapse.mp4

using 30 as a good output frame rate for people viewing on 60fps monitors.

Adjust the input frame rate, the -r 15, as needed to control the speed

of your time-lapse video.

Adjust the start_number and filename appropriately for the files you have.

Avconv produces an mp4 file suitable for uploading to youtube.

So here is my little test movie:

Time Lapse Clouds.

Tags: photography, time-lapse

[

12:05 Aug 15, 2014

More photo |

permalink to this entry |

]

Sat, 09 Aug 2014

![[White-lined sphinx moth on pale trumpets]](http://shallowsky.com/nature/invertebrates/sphinxmoths/img_6577T.jpg) We're having a huge bloom of a lovely flower called pale trumpets

(Ipomopsis longiflora), and it turns out that sphinx moths

just love them.

We're having a huge bloom of a lovely flower called pale trumpets

(Ipomopsis longiflora), and it turns out that sphinx moths

just love them.

The white-lined sphinx moth (Hyles lineata) is a moth the size

of a hummingbird, and it behaves like a hummingbird, too. It flies

during the day, hovering from flower to flower to suck nectar,

being far too heavy to land on flowers like butterflies do.

![[Sphinx moth eye]](http://shallowsky.com/nature/invertebrates/sphinxmoths/img_6584eye.jpg) I've seen them before, on hikes, but only gotten blurry shots with

my pocket camera. But with the pale trumpets blooming, the sphinx

moths come right at sunset and feed until near dark. That gives a

good excuse to play with the DSLR, telephoto lens and flash ...

and I still haven't gotten a really sharp photo, but I'm making

progress.

I've seen them before, on hikes, but only gotten blurry shots with

my pocket camera. But with the pale trumpets blooming, the sphinx

moths come right at sunset and feed until near dark. That gives a

good excuse to play with the DSLR, telephoto lens and flash ...

and I still haven't gotten a really sharp photo, but I'm making

progress.

Check out that huge eye! I guess you need good vision in order to make

your living poking a long wiggly proboscis into long skinny flowers

while laboriously hovering in midair.

Photos here:

White-lined

sphinx moths on pale trumpets.

Tags: nature, moth, photography

[

21:23 Aug 09, 2014

More nature |

permalink to this entry |

]

Wed, 16 Jul 2014

![[Arduino intervalometer]](http://shallowsky.com/blog/images/hardware/img_6213-450.jpg) While testing my

automated critter

camera, I was getting lots of false positives caused by clouds

gathering and growing and then evaporating away. False positives

are annoying, but I discovered that it's fun watching the clouds grow

and change in all those photos

... which got me thinking about time-lapse photography.

While testing my

automated critter

camera, I was getting lots of false positives caused by clouds

gathering and growing and then evaporating away. False positives

are annoying, but I discovered that it's fun watching the clouds grow

and change in all those photos

... which got me thinking about time-lapse photography.

First, a disclaimer: it's easy and cheap to just buy an

intervalometer. Search for timer remote control

or intervalometer and you'll find plenty of options for

around $20-30. In fact, I ordered one.

But, hey, it's not here yet, and I'm impatient.

And I've always wanted to try controlling a camera from an Arduino.

This seemed like the perfect excuse.

Why an Arduino rather than a Raspberry Pi or BeagleBone? Just because

it's simpler and cheaper, and this project doesn't need much compute

power. But everything here should be applicable to any microcontroller.

My Canon Rebel Xsi has a fairly simple wired remote control plug:

a standard 2.5mm stereo phone plug.

I say "standard" as though you can just walk into Radio Shack and buy

one, but in fact it turned out to be surprisingly difficult, even when

I was in Silicon Valley, to find them. Fortunately, I had found some,

several years ago, and had cables already wired up waiting for an experiment.

The outside connector ("sleeve") of the plug is ground.

Connecting ground to the middle ("ring") conductor makes the camera focus,

like pressing the shutter button halfway; connecting ground to the center

("tip") conductor makes it take a picture.

I have a wired cable release that I use for astronomy and spent a few

minutes with an ohmmeter verifying what did what, but if you don't

happen to have a cable release and a multimeter there are plenty of

Canon

remote control pinout diagrams on the web.

Now we need a way for the controller to connect one pin of the remote

to another on command.

There are ways to simulate that with transistors -- my

Arduino-controlled

robotic shark project did that. However, the shark was about a $40

toy, while my DSLR cost quite a bit more than that. While I

did find several people on the web saying they'd used transistors with

a DSLR with no ill effects, I found a lot more who were nervous about

trying it. I decided I was one of the nervous ones.

The alternative to transistors is to use something like a relay. In a relay,

voltage applied across one pair of contacts -- the signal from the

controller -- creates a magnetic field that closes a switch and joins

another pair of contacts -- the wires going to the camera's remote.

But there's a problem with relays: that magnetic field, when it

collapses, can send a pulse of current back up the wire to the controller,

possibly damaging it.

There's another alternative, though. An opto-isolator works like a

relay but without the magnetic pulse problem. Instead of a magnetic

field, it uses an LED (internally, inside the chip where you can't see it)

and a photo sensor. I bought some opto-isolators a while back and had

been looking for an excuse to try one. Actually two: I needed one for

the focus pin and one for the shutter pin.

How do you choose which opto-isolator to use out of the gazillion

options available in a components catalog? I don't know, but when I

bought a selection of them a few years ago, it included a 4N25, 4N26

and 4N27, which seem to be popular and well documented, as well as a

few other models that are so unpopular I couldn't even find a

datasheet for them. So I went with the 4N25.

Wiring an opto-isolator is easy. You do need a resistor across the inputs

(presumably because it's an LED).

380Ω

is apparently a good value for the 4N25, but

it's not critical. I didn't have any 380Ω but I had a bunch of

330Ω so that's what I used. The inputs (the signals from the Arduino)

go between pins 1 and 2, with a resistor; the outputs (the wires to the

camera remote plug) go between pins 4 and 5, as shown in

the diagram on this

Arduino

and Opto-isolators discussion, except that I didn't use any pull-up

resistor on the output.

Then you just need a simple Arduino program to drive the inputs.

Apparently the camera wants to see a focus half-press before it gets

the input to trigger the shutter, so I put in a slight delay there,

and another delay while I "hold the shutter button down" before

releasing both of them.

Here's some Arduino code to shoot a photo every ten seconds:

int focusPin = 6;

int shutterPin = 7;

int focusDelay = 50;

int shutterOpen = 100;

int betweenPictures = 10000;

void setup()

{

pinMode(focusPin, OUTPUT);

pinMode(shutterPin, OUTPUT);

}

void snapPhoto()

{

digitalWrite(focusPin, HIGH);

delay(focusDelay);

digitalWrite(shutterPin, HIGH);

delay(shutterOpen);

digitalWrite(shutterPin, LOW);

digitalWrite(focusPin, LOW);

}

void loop()

{

delay(betweenPictures);

snapPhoto();

}

Naturally, since then we haven't had any dramatic clouds, and the

lightning storms have all been late at night after I went to bed.

(I don't want to leave my nice camera out unattended in a rainstorm.)