Shallow Thoughts : tags : web

Akkana's Musings on Open Source Computing and Technology, Science, and Nature.

Wed, 26 Mar 2025

Michael Kennedy

asked

whether people are using search engines less because of AI chatbots.

I haven't really gotten into using AI chatbots as coding assistants,

so I'm not one to say. But it did make me wonder how many searches I do.

Michael saw a stat that people average fewer than 300 searches per month;

he thought that was absurdly low until he checked his own stats and

found he'd only made 211 searches so far in March.

(Of course, March isn't over yet.

He didn't give a search number for a complete month.)

Read more ...

Tags: tech, firefox, web, python, google, sqlite

[

16:07 Mar 26, 2025

More tech |

permalink to this entry |

]

Thu, 22 Feb 2024

I maintain quite a few small websites. I have several of my own under

different domains (shallowsky.com, nmbilltracker.com and so forth),

plus a few smaller projects like flask apps running on a different port.

In addition, I maintain websites for several organizations on a volunteer

basis (because if you join any volunteer organization and they find out

you're at all technical, that's the first job they want you to do).

I typically maintain a local copy of each website, so I can try out

any change locally first.

Read more ...

Tags: web, shell, programming

[

16:18 Feb 22, 2024

More linux |

permalink to this entry |

]

Thu, 22 Jun 2023

Someone contacted me because my

Galilean Moons

of Jupiter page stopped working.

We've been upgrading the web server to the latest Debian, Bookworm

(we were actually two revs back, on Buster, rather than on Bullseye,

due to excessive laziness) and there have been several glitches that I

had to fix, particularly with the apache2 configuration.

But Galilean? That's just a bunch of JavaScript, no server-side

involvement like Flask or PHP or CGI.

Read more ...

Tags: web, javascript, apache

[

13:53 Jun 22, 2023

More linux |

permalink to this entry |

]

Thu, 13 Apr 2023

Last week I spent some time monitoring my apache error logs to try to

get rid of warnings from my website and see if there are any errors I

need to fix. (Answer: yes, there were a few things I needed to fix,

mostly due to changes in libraries since I wrote the pages in question.)

The vast majority of lines in my error log, however, are requests for

/wp-login.php or /xmlrpc.php. There are so many of them

that they drown out any actual errors on the website.

Read more ...

Tags: web, apache

[

10:28 Apr 13, 2023

More tech/web |

permalink to this entry |

]

Tue, 10 Jan 2023

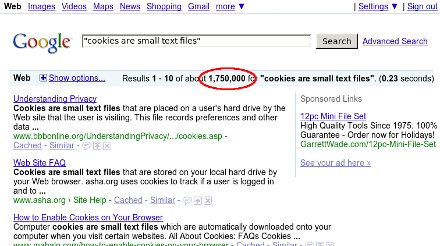

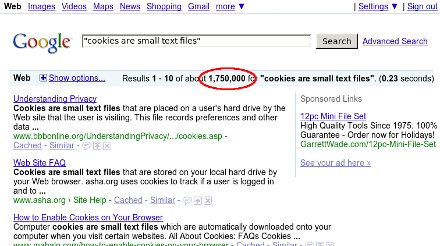

I wanted to find something I'd googled for recently.

That should be easy, right? Just go to the browser's history window.

Well, actually not so much.

You can see them in Firefox's history window, but they're interspersed

with all the other places you've surfed so it's hard to skim the list quickly.

I decided to take a little time and figure out how to extract the

search terms. I was pretty sure that they were in places.sqlite3

inside the firefox profile. And they were.

Read more ...

Tags: web, firefox, google, sqlite

[

16:54 Jan 10, 2023

More tech/web |

permalink to this entry |

]

Sat, 29 Jan 2022

Firefox's zoom settings are useful. You can zoom in on a page with

Ctrl-+ (actually Ctrl-+ on a US-English keyboard), or out with Ctrl--.

Useful, that is, until you start noticing that lots of pages you

visit have weirdly large or small font sizes, and it turns out that

Firefox is remembering a Zoom setting you used on that site

half a year ago on a different monitor.

Whenever you zoom, Firefox remembers that site, and uses that

zoom setting any time you go to that site forevermore (unless you

zoom back out).

Now that I'm using the same laptop in different modes —

sometimes plugged into a monitor, sometimes using its own screen —

that has become a problem.

Read more ...

Tags: web, firefox, sql, database

[

18:04 Jan 29, 2022

More tech/web |

permalink to this entry |

]

Sun, 12 Dec 2021

I've spent a lot of the past week battling Russian spammers on

the New Mexico Bill Tracker.

The New Mexico legislature just began a special session to define the

new voting districts, which happens every 10 years after the census.

When new legislative sessions start, the BillTracker usually needs

some hand-holding to make sure it's tracking the new session. (I've

been working on code to make it notice new sessions automatically, but

it's not fully working yet). So when the session started, I checked

the log files...

and found them full of Russian spam.

Specifically, what was happening was that a bot was going to my

new user registration page and creating new accounts where the

username was a paragraph of Cyrillic spam.

Read more ...

Tags: web, tech, spam, programming, python, flask, captcha

[

18:50 Dec 12, 2021

More tech/web |

permalink to this entry |

]

Mon, 15 Nov 2021

A priest, a minister, and a rabbit walk into a bar.

The bartender asks the rabbit what he'll have to drink.

"How should I know?" says the rabbit. "I'm only here because of autocomplete."

Firefox folks like to call the location bar/URL bar the "awesomebar"

because of the suggestions it makes. Sometimes, those suggestions

are pretty great; there are a lot of sites I don't bother to bookmark

because I know they will show up as the first suggestion.

Other times, the "awesomebar" not so awesome. It gets stuck on some site

I never use, and there's seemingly no way to make Firefox forget that site.

Read more ...

Tags: web, firefox, sql

[

16:54 Nov 15, 2021

More tech/web |

permalink to this entry |

]

Fri, 06 Aug 2021

I maintain quite a few domains, both domains I own and domains

belonging to various nonprofits I belong to.

For testing these websites, I make virtual domains in apache,

choosing an alias for each site.

For instance, for the LWVNM website, the apache site file has

<VirtualHost *:80>

ServerName lwvlocal

and my host table,

/etc/hosts, has

127.0.0.1 localhost lwvlocal

(The

localhost line in my host table has entries for

all the various virtual hosts I use, not just this one).

That all used to work fine. If I wanted to test a new page on the LWVNM

website, I'd go to Firefox's urlbar and type something like

lwvlocal/newpage.html

and it would show me the new page, which I could work on until

it was time to push it to the web server.

A month or so ago, a new update to Firefox broke that.

Read more ...

Tags: firefox, web

[

13:34 Aug 06, 2021

More tech/web |

permalink to this entry |

]

Sun, 06 Jun 2021

![[analemma webapp]](/blog/images/screenshots/analemma-preview.jpg) I have another PEEC Planetarium talk coming up in a few weeks,

a talk on the

summer solstice

co-presenting with Chick Keller on Fri, Jun 18 at 7pm MDT.

I have another PEEC Planetarium talk coming up in a few weeks,

a talk on the

summer solstice

co-presenting with Chick Keller on Fri, Jun 18 at 7pm MDT.

I'm letting Chick do most of the talking about archaeoastronomy

since he knows a lot more about it than I do, while I'll be talking

about the celestial dynamics -- what is a solstice, what is the sun

doing in our sky and why would you care, and some weirdnesses relating

to sunrise and sunset times and the length of the day.

And of course I'll be talking about the analemma, because

just try to stop me talking about analemmas whenever the topic

of the sun's motion comes up.

But besides the analemma, I need a lot of graphics of the earth

showing the terminator, the dividing line between day and night.

Read more ...

Tags: science, astronomy, programming, javascript, web

[

18:33 Jun 06, 2021

More science/astro |

permalink to this entry |

]

Tue, 30 Mar 2021

In my eternal quest for a decent RSS feed for top World/National news,

I decided to try subscribing to the New York Times online.

But when I went to try to add them to my RSS reader, I discovered

it wasn't that easy: their login page sometimes gives a captcha, so

you can't just set a username and password in the RSS reader.

A common technique for sites like this is to log in with a browser,

then copy the browser's cookies into your news reading program.

At least, I thought it was a common technique -- but when I tried

a web search, examples were surprisingly hard to find.

None of the techniques to examine or save browser cookies were all

that simple, so I ended up writing a

browser_cookies.py

Python script to extract cookies from chromium and firefox browsers.

Read more ...

Tags: web, programming, python, cookies, privacy

[

11:19 Mar 30, 2021

More programming |

permalink to this entry |

]

Sat, 08 Aug 2020

It's been a frustration with Firefox for years. You click on a link

and get the "What should Firefox do with this file?" dialog, even

though it's a file type you view all the time -- PDF, say, or JPEG.

You click "View in browser" or "Save file" or whatever ... then you

check the "Do this automatically for files like this from now on"

checkbox, thinking, I'm sure I checked this last time.

Then a few minutes later, you go to a file of the exact same time,

and you get the dialog again. That damn checkbox is like the button

on street crossings or elevators: a no-op to make you think you're

doing something.

I never tried to get to the bottom

of why this happens with some PDFs and not others, some JPGs but not others.

But Los Alamos puts their government meetings on a site called

Legistar.

Legistar does everything as PDF -- and those PDFs all trigger this

Firefox bug, prompting for a download rather than displaying in

Firefox's PDF viewer.

Read more ...

Tags: firefox, web, privacy

[

16:38 Aug 08, 2020

More tech/web |

permalink to this entry |

]

Thu, 09 Jan 2020

I wrote about

various ways of managing a persistent popup window from Javascript,

eventually settling on a postMessage() solution that

turned out not to work in QtWebEngine. So I needed another solution.

Data URI

First I tried using a data: URI.

In that scheme, you encode a page's full content into the URL. For instance:

try this in your browser:

data:text/html,Hello%2C%20World!

So for a longer page, you can do something like:

var htmlhead = '<html>\n'

+ '<head>\n'

+ '<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">\n'

+ '<link rel="stylesheet" type="text/css" href="stylesheet.css">\n'

+ '</head>\n'

+ '\n'

+ '<body>\n'

+ '<div id="mydiv">\n';

var htmltail = '</div>\n'

+ '</body>\n'

+ '</html>\n';

var encodedDataURI = encodeURI(htmlhead + noteText + htmltail);

var notewin = window.open('data:text/html,' + encodedDataURI, "notewindow",

"width=800,height=500");

Nice and easy -- and it even works from file: URIs!

Well, sort of works. It turns out it has a problem related to

the same-origin problems I saw with postMessage.

A data: URI is always opened with an origin of about:blank;

and two about:blank origin pages can't talk to each other.

But I don't need them to talk to each other if I'm not using postMessage,

do I? Yes, I do.

The problem is that stylesheet I included in htmlhead above:

<link rel="stylesheet" type="text/css" href="stylesheet.css">\n'

All browsers I tested refuse to open the stylesheet in the

about:blank popup. This seems strange: don't people use stylesheets

from other domains fairly often? Maybe it's a behavior special to

null (about:blank) origin pages. But in any case, I couldn't find

a way to get my data: URI popup to load a stylesheet. So unless I

hard-code all the styles I want for the notes page into the Javascript

that opens the popup window (and I'd really rather not do that),

I can't use

data: as a solution.

Clever hack: Use the Same Page, Loaded in a Different Way

That's when I finally came across

Remy Sharp's page, Creating popups without HTML files.

Remy first explores the data: URI solution, and rejects it because of

the cross-origin problem, just as I did. But then he comes up with a

clever hack. It's ugly, as he acknowledges ... but it works.

The trick is to create the popup with the URL of the parent page that

created it, but with a named anchor appended:

parentPage.html#popup.

Then, in the Javascript, check whether #popup is in the

URL. If not, we're in the parent page and still need to call

window.open to create the popup. If it is there, then

the JS code is being executed in the popup. In that case, rewrite the

page as needed. In my case, since I want the popup to show only whatever

is in the div named #notes, and the slide content is all inside a div

called #page, I can do this:

function updateNoteWindow() {

if (window.location.hash.indexOf('#notes') === -1) {

window.open(window.location + '#notes', 'noteWin',

'width=300,height=300');

return;

}

// If here, it's the popup notes window.

// Remove the #page div

var pageDiv = document.getElementById("page");

pageDiv.remove();

// and rename the #notes div so it will be displayed in a different place

var notesDiv = document.getElementById("notes");

notesDiv.id = "fullnotes";

}

It works great, even in file: URIs, and even in QtWebEngine.

That's the solution I ended up using.

Tags: programming, web, javascript

[

19:44 Jan 09, 2020

More tech/web |

permalink to this entry |

]

Sun, 05 Jan 2020

I'm trying to update my

htmlpreso

HTML presentation slide system to allow for a separate notes window.

Up to now, I've just used display mirroring. I connect to the

projector at 1024x768, and whatever is on the first (topmost/leftmost)

1024x768 pixels of my laptop screen shows on the projector. Since my

laptop screen is wider than 1024 pixels, I can put notes to myself

to the right of the slide, and I'll see them but the audience won't.

That works fine, but I'd like to be able to make the screens completely

separate, so I can fiddle around with other things while still

displaying a slide on the projector. But since my slides are in HTML,

and I still want my presenter notes, that requires putting the notes

in a separate window, instead of just to the right of each slide.

The notes for each slide are in a <div id="notes">

on each page. So all I have to do is pop up another browser window

and mirror whatever is in that div to the new window, right?

Sure ...

except this is JavaScript, so nothing is simple. Every little thing

is going to be multiple days of hair-tearing frustration, and this

was no exception.

I should warn you up front that I eventually found a much simpler way

of doing this. I'm documenting this method anyway because it seems

useful to be able to communicate between two windows, but if you

just want a simple solution for the "pop up notes in another window"

problem, stay tuned for Part 2.

Step 0: Give Up On file:

Normally I use file: URLs for presentations. There's no need

to run a web server, and in fact, on my lightweight netbook I usually

don't start apache2 by default, only if I'm actually working on

web development.

But most of the methods of communicating between windows don't work in

file URLs, because of the "same-origin policy".

That policy is a good security measure: it ensures that a page

from innocent-url.com can't start popping up windows with content

from evilp0wnU.com without you knowing about it. I'm good with that.

The problem is that file: URLs have location.origin

of null, and every null-origin window is considered to be a

different origin -- even if they're both from the same directory. That

makes no sense to me, but there seems to be no way around it. So if I

want notes in a separate window, I have to run a web server and use

http://localhost.

Step 1: A Separate Window

The first step is to pop up the separate notes window, or get a

handle to it if it's already up.

JavaScript offers window.open(), but there's a trick:

if you just call

notewin = window.open("notewin.html", "notewindow")

you'll actually get a new tab, not a new window. If you actually

want a window, the secret code for that is to give it a size:

notewin = window.open("notewin.html", "notewindow",

"width=800,height=500");

There's apparently no way to just get a handle to an existing window.

The only way is to call window.open(),

pop up a new window if it wasn't there before, or reloads it if it's

already there.

I saw some articles implying that passing an empty string ""

as the first argument would return a handle to an existing window without

changing it, but it's not true: in Firefox and Chromium, at least,

that makes the existing window load about:blank instead of

whatever page it already has. So just give it the same page every time.

Step 2: Figure Out When the Window Has Loaded

There are several ways to change the content in the popup window from

the parent, but they all have one problem:

if you update the content right away after calling window.open,

whatever content you put there will be overwritten immediately when

the popup reloads its notewin.html page (or even about:blank).

So you need to wait until the popup is finished loading.

That sounds suspiciously easy. Assuming you have a function called

updateNoteWinContent(), just do this:

// XXX This Doesn't work:

notewin.addEventListener('load', updateNoteWinContent, false);

Except it turns out the "load" event listener isn't called on reloads,

at least not in popups.

So this will work the first time, when the

note window first pops up, but never after that.

I tried other listeners, like "DOMContentLoaded" and

"readystatechange", but none of them are called on reload.

Why not? Who knows?

It's possible this is because the listener gets set too early, and

then is wiped out when the page reloads, but that's just idle

speculation.

For a while, I thought I was going to have to resort to an ugly hack:

sleep for several seconds in the parent window to give the popup time

to load:

await new Promise(r => setTimeout(r, 3000));

(requires declaring the calling function as async).

This works, but ... ick.

Fortunately, there's a better way.

Step 2.5: Simulate onLoad with postMessage

What finally worked was a tricky way to use postMessage()

in reverse. I'd already experimented with using postMessage()

from the parent window to the popup, but it didn't work because the

popup was still loading and wasn't ready for the content.

What works is to go the other way. In the code loaded by the popup

(notewin.html in this example), put some code at the end

of the page that calls

window.opener.postMessage("Loaded");

Then in the parent, handle that message, and don't try to update the

popup's content until you've gotten the message:

function receiveMessageFromPopup(event) {

console.log("Parent received a message from the notewin:", event.data);

// Optionally, check whether event.data == "Loaded"

// if you want to support more than one possible message.

// Update the "notes" div in the popup notewin:

var noteDiv = notewin.document.getElementById("notes");

noteDiv.innerHTML = "Here is some content.

";

}

window.addEventListener("message", receiveMessageFromPopup, false);

Here's a complete working test:

Test of

Persistent Popup Window.

In the end, though, this didn't solve my presentation problem.

I got it all debugged and working, only to discover that

postMessage doesn't work in QtWebEngine, so

I couldn't use it in my slide presentation app.

Fortunately, I found a couple of other ways: stay tuned for Part 2.

(Update: Part 2: A Clever Hack.)

Debugging Multiple Windows: Separate Consoles

A note on debugging:

One thing that slowed me down was that JS I put in the popup didn't

seem to be running: I never saw its console.log() messages.

It took me a while to realize that each window has its own web console,

both in Firefox and Chromium. So you have to wait until the popup has

opened before you can see any debugging messages for it. Even then,

the popup window doesn't have a menu, and its context menu doesn't

offer a console window option. But it does offer Inspect element,

which brings up a Developer Tools window where you can click on

the Console tab to see errors and debugging messages.

Tags: programming, web, javascript

[

20:29 Jan 05, 2020

More tech/web |

permalink to this entry |

]

Thu, 10 Jan 2019

Years ago, I saw someone demonstrating an obscure slide presentation

system, and one of the tricks it had was to let you draw on slides

with the mouse. So you could underline or arrow specific points,

or, more important (since underlines and arrows are easily included

in slides), draw something in response to an audience question.

Neat feature, but there were other reasons I didn't want to switch to

that particular slide system.

Many years later, and quite happy with my home-grown

htmlpreso system

for HTML-based slides, I was sitting in an astronomy panel discussion

listening to someone explain black holes when it occurred to me:

with HTML Canvas being a fairly mature technology, how hard could

it be to add drawing to my htmlpreso setup? It would just take a javascript

snippet that creates a canvas on top of the existing slide, plus

some basic event handling and drawing code that surely someone else

has already written.

![[Drawing on top of an HTML slide]](http://shallowsky.com/blog/images/screenshots/htmlpreso-drawing.jpg)

Curled up in front of the fire last night with my laptop, it only took a

couple of hours to whip up a proof of concept that seems remarkably usable.

I've added it to htmlpreso.

I have to confess, I've never actually felt the need to draw on a slide

during a talk. But I still love knowing that it's possible.

It'll be interesting to see how often I actually use it.

To play with drawing on slides, go to my

HTMLPreso

self-documenting slide set (with JavaScript enabled)

and, on any slide, type Shift-D.

Some color swatches should appear in the upper right of the slide,

and now you can scribble over the tops of slides to your heart's content.

Tags: talks, programming, web

[

14:39 Jan 10, 2019

More speaking |

permalink to this entry |

]

Sun, 29 Jul 2018

In my several recent articles about building Firefox from source,

I omitted one minor change I made, which will probably sound a bit silly.

A self-built Firefox thinks its name is "Nightly", so, for example,

the Help menu includes About Nightly.

Somehow I found that unreasonably irritating. It's not

a nightly build; in fact, I hope to build it as seldom as possible,

ideally only after a git pull when new versions are released.

Yet Firefox shows its name in quite a few places, so you're constantly

faced with that "Nightly". After all the work to build Firefox,

why put up with that?

To find where it was coming from,

I used my recursive grep alias which skips the obj- directory plus

things like object files and metadata. This is how I define it in my .zshrc

(obviously, not all of these clauses are necessary for this Firefox

search), and then how I called it to try to find instances of

"Nightly" in the source:

gr() {

find . \( -type f -and -not -name '*.o' -and -not -name '*.so' -and -not -name '*.a' -and -not -name '*.pyc' -and -not -name '*.jpg' -and -not -name '*.JPG' -and -not -name '*.png' -and -not -name '*.xcf*' -and -not -name '*.gmo' -and -not -name '.intltool*' -and -not -name '*.po' -and -not -name 'po' -and -not -name '*.tar*' -and -not -name '*.zip' -or -name '.metadata' -or -name 'build' -or -name 'obj-*' -or -name '.git' -or -name '.svn' -prune \) -print0 | xargs -0 grep $* /dev/null

}

gr Nightly | grep -v '//' | grep -v '#' | grep -v isNightly | grep test | grep -v task | fgrep -v .js | fgrep -v .cpp | grep -v mobile >grep.out

Even with all those exclusions, that still ends up printing

an enormous list. But it turns out all the important hits

are in the browser directory, so you can get away with

running it from there rather than from the top level.

I found a bunch of likely files that all had very similar

"Nightly" lines in them:

- browser/branding/nightly/branding.nsi

- browser/branding/nightly/configure.sh

- browser/branding/nightly/locales/en-US/brand.dtd

- browser/branding/nightly/locales/en-US/brand.ftl

- browser/branding/nightly/locales/en-US/brand.properties

- browser/branding/unofficial/configure.sh

- browser/branding/unofficial/locales/en-US/brand.dtd

- browser/branding/unofficial/locales/en-US/brand.properties

- browser/branding/unofficial/locales/en-US/brand.ftl

Since I didn't know which one was relevant, I changed each of them to

slightly different names, then rebuilt and checked to see which names

I actually saw while running the browser.

It turned out that

browser/branding/unofficial/locales/en-US/brand.dtd

is the file that controls the application name in the Help menu

and in Help->About -- though the title of the

About window is still "Nightly" and I haven't found what controls that.

branding/unofficial/locales/en-US/brand.ftl controls the

"Nightly" references in the Edit->Preferences window.

I don't know what all the others do.

There may be other instances of "Nightly" that appear elsewhere in the app,

the other files, but I haven't seen them yet.

Past Firefox building articles:

Building Firefox Quantum;

Building Firefox for ALSA (non PulseAudio) Sound;

Firefox Quantum: Fixing Ctrl W (or other key bindings).

Tags: firefox, web, build, programming

[

18:23 Jul 29, 2018

More tech/web |

permalink to this entry |

]

Sat, 07 Jul 2018

A quick followup to my article on

Modifying

Firefox Files Inside omni.ja:

The steps for modifying the file are fairly easy, but they have to be

done a lot.

First there's the problem of Firefox updates: if a new omni.ja

is part of the update, then your changes will be overwritten, so

you'll have to make them again on the new omni.ja.

But, worse, even aside from updates they don't stay changed.

I've had Ctrl-W mysteriously revert back to its old wired-in

behavior in the middle of a Firefox session. I'm still not clear

how this happens: I speculate that something in Firefox's update mechanism

may allow parts of omni.ja to be overridden, even though I was

told by Mike Kaply, the onetime master of overlays, that they

weren't recommended any more (at least by users, though that doesn't

necessarily mean they're not used for updates).

But in any case, you can be browsing merrily along and suddenly one

of your changes doesn't work any more, even though the change is still

right there in browser/omni.ja. And the only fix I've found

so far is to download a new Firefox and re-apply the changes.

Re-applying them to the current version doesn't work -- they're

already there. And it doesn't help to keep the tarball you originally

downloaded around so you can re-install that; firefox updates every

week or two so that version is guaranteed to be out of date.

All this means that it's crazy not to script the omni changes so

you can apply them easily with a single command. So here's a shell

script that takes the path to the current Firefox, unpacks

browser/omni.ja, makes a couple of simple changes and

re-packs it. I called it

kitfox-patch

since I used to call my personally modified Firefox build "Kitfox".

Of course, if your changes are different from mine

you'll want to edit the script to change the sed commands.

I hope eventually to figure out how it is that omni.ja changes

stop working, and whether it's an overlay or something else,

and whether there's a way to re-apply fixes without having to

download a whole new Firefox.

If I figure it out I'll report back.

Tags: firefox, web

[

15:01 Jul 07, 2018

More tech/web |

permalink to this entry |

]

Sat, 23 Jun 2018

My article on

Fixing

key bindings in Firefox Quantum by modifying the source tree got

attention from several people who offered helpful suggestions via

Twitter and email on how

to accomplish the same thing using just files in omni.ja,

so it could be done without rebuilding the Firefox source.

That would be vastly better, especially for people who need to

change something like key bindings or browser messages but don't

have a souped-up development machine to build the whole browser.

Brian Carpenter had several suggestions and eventually pointed me to

an old post by Mike Kaply,

Don’t Unpack and Repack omni.ja[r]

that said there were better ways to override specific files.

Unfortunately, Mike Kaply responded that that article was written

for XUL extensions, which are now obsolete, so the article

ought to be removed. That's too bad, because it did sound like a much

nicer solution. I looked into trying it anyway, but the instructions

it points to for

Overriding

specific files is woefully short on detail on how to map a path

inside omni.ja like chrome://package/type/original-uri.whatever,

to a URL, and the single example I could find was so old that the file

it referenced didn't exist at the same location any more. After a fruitless

half hour or so, I took Mike's warning to heart and decided it wasn't

worth wasting more time chasing something that wasn't expected to work

anyway. (If someone knows otherwise, please let me know!)

But then Paul Wise offered a solution that actually worked, as an

easy to follow sequence of shell commands. (I've changed some of

them very slightly.)

$ tar xf ~/Tarballs/firefox-60.0.2.tar.bz2

# (This creates a "firefox" directory inside the current one.)

$ mkdir omni

$ cd omni

$ unzip -q ../firefox/browser/omni.ja

warning [../firefox-60.0.2/browser/omni.ja]: 34187320 extra bytes at beginning or within zipfile

(attempting to process anyway)

error [../firefox-60.0.2/browser/omni.ja]: reported length of central directory is

-34187320 bytes too long (Atari STZip zipfile? J.H.Holm ZIPSPLIT 1.1

zipfile?). Compensating...

zsh: exit 2 unzip -q ../firefox-60.0.2/browser/omni.ja

$ sed -i 's/or enter address/or just twiddle your thumbs/' chrome/en-US/locale/browser/browser.dtd chrome/en-US/locale/browser/browser.properties

I was a little put off by all the warnings unzip gave, but kept going.

Of course, you can just edit those two files rather than using sed;

but the sed command was Paul's way of being very specific about

the changes he was suggesting, which I appreciated.

Use these flags to repackage omni.ja:

$ zip -qr9XD ../omni.ja *

I had tried that before (without the q since I like to see what zip

and tar commands are doing) and hadn't succeeded. And indeed, when I

listed the two files, the new omni.ja I'd just packaged was

about a third the size of the original:

$ ls -l ../omni.ja ../firefox-60.0.2/browser/omni.ja

-rw-r--r-- 1 akkana akkana 34469045 Jun 5 12:14 ../firefox/browser/omni.ja

-rw-r--r-- 1 akkana akkana 11828315 Jun 17 10:37 ../omni.ja

But still, it's worth a try:

$ cp ../omni.ja ../firefox/browser/omni.ja

Then run the new Firefox. I have a spare profile I keep around for

testing, but Paul's instructions included a nifty way of running with

a brand new profile and it's definitely worth knowing:

$ cd ../firefox

$ MOZILLA_DISABLE_PLUGINS=1 ./firefox -safe-mode -no-remote -profile $(mktemp -d tmp-firefox-profile-XXXXXXXXXX) -offline about:blank

Also note the flags like safe-mode and no-remote, plus disabling

plugins -- all good ideas when testing something new.

And it worked! When I started up, I got the new message, "Search or

just twiddle your thumbs", in the URL bar.

Fixing Ctrl-W

Of course, now I had to test it with my real change. Since I like Paul's

way of using sed to specify exactly what changes to make, here's a

sed version of my Ctrl-W fix:

$ sed -i '/key_close/s/ reserved="true"//' chrome/browser/content/browser/browser.xul

Then run it. To test Ctrl-W, you need a website that includes a text

field you can type in, so -offline isn't an option unless you

happen to have a local web page that includes some text fields.

Google is an easy way to test ... and you might as well re-use that

firefox profile you just made rather than making another one:

$ MOZILLA_DISABLE_PLUGINS=1 ./firefox -safe-mode -no-remote -profile tmp-firefox-profile-* https://google.com

I typed a few words in the google search field that came up, deleted

them with Ctrl-W -- all was good! Thanks, Paul! And Brian, and

everybody else who sent suggestions.

Why are the sizes so different?

I was still puzzled by that threefold difference in size between the

omni.ja I repacked and the original that comes with Firefox.

Was something missing? Paul had the key to that too: use zipinfo

on both versions of the file to see what differed. Turned out

Mozilla's version, after a long file listing, ends with

2650 files, 33947999 bytes uncompressed, 33947999 bytes compressed: 0.0%

while my re-packaged version ends with

2650 files, 33947969 bytes uncompressed, 11307294 bytes compressed: 66.7%

So apparently Mozilla's omni.ja is using no compression at all.

It may be that that makes it start up a little faster; but Quantum

takes so long to start up that any slight difference in uncompressing

omni.ja isn't noticable to me.

I was able to run through this whole procedure on my poor slow netbook,

the one where building Firefox took something like 15 hours ... and

in a few minutes I had a working modified Firefox. And with the sed

command, this is all scriptable, so it'll be easy to re-do whenever

Firefox has a security update. Win!

Update: I have a simple shell script to do this:

Script to modify omni.ja for a custom Firefox.

Tags: firefox, web

[

20:37 Jun 23, 2018

More tech/web |

permalink to this entry |

]

Sat, 09 Jun 2018

I did the work to

built

my own Firefox primarily to fix a couple of serious regressions

that couldn't be fixed any other way. I'll start with the one that's

probably more common (at least, there are many people complaining

about it in many different web forums): the fact that Firefox won't

play sound on Linux machines that don't use PulseAudio.

There's a bug with a long discussion of the problem,

Bug 1345661 - PulseAudio requirement breaks Firefox on ALSA-only systems;

and the discussion in the bug links to another

discussion

of the Firefox/PulseAudio problem). Some comments in those

discussions suggest that some near-future version of Firefox may

restore ALSA sound for non-Pulse systems; but most of those comments

are six months old, yet it's still not fixed in the version Mozilla

is distributing now.

In theory, ALSA sound is easy to enable.

Build

pptions in Firefox are controlled through a file called mozconfig.

Create that file at the top level of your build directory, then add to it:

ac_add_options --enable-alsa

ac_add_options --disable-pulseaudio

You can see other options with ./configure --help

Of course, like everything else in the computer world, there were

complications. When I typed mach build, I got:

Assertion failed in _parse_loader_output:

Traceback (most recent call last):

File "/home/akkana/outsrc/gecko-dev/python/mozbuild/mozbuild/mozconfig.py", line 260, in read_mozconfig

parsed = self._parse_loader_output(output)

File "/home/akkana/outsrc/gecko-dev/python/mozbuild/mozbuild/mozconfig.py", line 375, in _parse_loader_output

assert not in_variable

AssertionError

Error loading mozconfig: /home/akkana/outsrc/gecko-dev/mozconfig

Evaluation of your mozconfig produced unexpected output. This could be

triggered by a command inside your mozconfig failing or producing some warnings

or error messages. Please change your mozconfig to not error and/or to catch

errors in executed commands.

mozconfig output:

------BEGIN_ENV_BEFORE_SOURCE

... followed by a many-page dump of all my environment variables, twice.

It turned out that was coming from line 449 of

python/mozbuild/mozbuild/mozconfig.py:

# Lines with a quote not ending in a quote are multi-line.

if has_quote and not value.endswith("'"):

in_variable = name

current.append(value)

continue

else:

value = value[:-1] if has_quote else value

I'm guessing this was added because some Mozilla developer sets

a multi-line environment variable that has a quote in it but doesn't

end with a quote. Or something. Anyway, some fairly specific case.

I, on the other hand, have a different specific case: a short

environment variable that includes one or more single quotes,

and the test for their specific case breaks my build.

(In case you're curious why I have quotes in an environment variable:

The prompt-setting code in my .zshrc includes a variable called

PRIMES. In a login shell, this is set to the empty string,

but in subshells, I add ' for each level of shell under the login shell.

So my regular prompt might be (hostname)-, but if I run a

subshell to test something, the prompt will be (hostname')-,

a subshell inside that will be (hostname'')-, and so on.

It's a reminder that I'm still in a subshell and need to exit when

I'm done testing. In theory, I could do that with SHLVL, but SHLVL

doesn't care about login shells, so my normal shells inside X are

all SHLVL=2 while shells on a console or from an ssh are

SHLVL=1, so if I used SHLVL I'd have to have some special case

code to deal with that.

Also, of course I could use a character other than a single-quote.

But in the thirty or so years I've used this, Firefox is the first

program that's ever had a problem with it. And apparently I'm not the

first one to have a problem with this:

bug 1455065

was apparently someone else with the same problem. Maybe that will show

up in the release branch eventually.)

Anyway, disabling that line fixed the problem:

# Lines with a quote not ending in a quote are multi-line.

if False and has_quote and not value.endswith("'"):

and after that,

mach build succeeded, I built a new

Firefox, and lo and behond! I can play sound in YouTube videos and

on Xeno-Canto again, without needing an additional browser.

Tags: web, firefox, linux

[

16:49 Jun 09, 2018

More tech/web |

permalink to this entry |

]

Thu, 31 May 2018

For the last year or so the Firefox development team has been making

life ever harder for users. First they broke all the old extensions

that were based on XUL and XBL, so a lot of customizations no longer

worked. Then they

made

PulseAudio mandatory on Linux bug (1345661), so on systems

like mine that don't run Pulse, there's no way to get sound in

a web page. Forget YouTube or XenoCanto unless you keep another

browser around for that purpose.

For those reasons I'd been avoiding the Firefox upgrade, sticking to

Debian's firefox-esr ("Extended Support Release"). But when

Debian updated firefox-esr to Firefox 56 ESR late last year, performance

became unusable. Like half a minute between when you hit Page Down

and when the page actually scrolls. It was time to switch browsers.

Pale Moon

I'd been hearing about the Firefox variant Pale Moon. It's a fork of

an older Firefox, supposedly with an emphasis on openness and configurability.

I installed the Debian palemoon package. Performance was fine,

similar to Firefox before the tragic firefox-56. It was missing a few

things -- no built-in PDF viewer or Reader mode -- but I don't use

Reader mode that often, and the built-in PDF viewer is an annoyance at

least as often as it's a help. (In Firefox it's fairly random about when

it kicks in anyway, so I'm never sure whether I'll get the PDF viewer

or a Save-as prompt on any given PDF link).

For form and password autofill, for some reason Pale Moon doesn't fill

out fields until you type the first letter. For instance, if I had an

account with name "myname" and a stored password, when I loaded the

page, both fields would be empty, as if there's nothing stored for that

page. But typing an 'm' in the username field makes both username and

password fields fill in. This isn't something Firefox ever did and I

don't particularly like it, but it isn't a major problem.

Then there were some minor irritations, like the fact that profiles

were stored in a folder named ~/.moonchild\ productions/ --

super long so it messed up directory listings, and with a space in the

middle. PaleMoon was also very insistent about using new tabs for

everything, including URLs launched from other programs -- there

doesn't seem to be any way to get it to open URLs in the active tab.

I used it as my main browser for several months, and it basically worked.

But the irritations started to get to me, and I started considering

other options. The final kicker when I saw

Pale Moon

bug 86, in which, as far as I can tell, someone working on the

PaleMoon in OpenBSD tries to use system libraries instead of

PaleMoon's patched libraries, and is attacked for it in the bug.

Reading the exchange made me want to avoid PaleMoon for two reasons.

First, the rudeness: a toxic community that doesn't treat contributors

well isn't likely to last long or to have the resources to keep on top

of bug and security fixes. Second, the technical question: if Pale

Moon's code is so quirky that it can't use standard system libraries

and needs a bunch of custom-patched libraries, what does that say

about how maintainable it will be in the long term?

Firefox Quantum

Much has been made in the technical press of the latest Firefox,

called "Quantum", and its supposed speed. I was a bit dubious of that:

it's easy to make your program seem fast after you force everybody

into a few years of working with a program that's degraded its

performance by an order of magnitude, like Firefox had. After

firefox 56, anything would seem fast.

Still, maybe it would at least be fast enough to be usable. But I had

trepidations too. What about all those extensions that don't work any

more? What about sound not working? Could I live with that?

Debian has no current firefox package, so I downloaded the tarball

from mozilla.org, unpacked it,

made a new firefox profile and ran it.

Initial startup performance is terrible -- it takes forever to bring

up the first window, and I often get a "Firefox seems slow to start up"

message at the bottom of the screen, with a link to a page of a bunch

of completely irrelevant hints.

Still, I typically only start Firefox once a day. Once it's up,

performance is a bit laggy but a lot better than firefox-esr 56 was,

certainly usable.

I was able to find replacements for most of the really important

extensions (the ones that control things like cookies and javascript).

But sound, as predicted, didn't work. And there were several other,

worse regressions from older Firefox versions.

As it turned out, the only way to make Firefox Quantum usable for me

was to build a custom version where I could fix the regressions.

To keep articles from being way too long, I'll write about all those

issues separately:

how to build Firefox,

how to fix broken key bindings,

and how to fix the PulseAudio problem.

Tags: web, firefox

[

16:07 May 31, 2018

More tech/web |

permalink to this entry |

]

Sun, 27 May 2018

After I'd

switched

from the Google Maps API to Leaflet get my trail map

working on my own website,

the next step was to move it to the Nature Center's website

to replace the broken Google Maps version.

PEEC, unfortunately for me, uses Wordpress (on the theory that this

makes it easier for volunteers and non-technical staff to add

content). I am not a Wordpress person at all; to me, systems

like Wordpress and Drupal mostly add obstacles that mean standard HTML

doesn't work right and has to be modified in nonstandard ways.

This was a case in point.

The Leaflet library for displaying maps relies on calling an

initialization function when the body of the page is loaded:

<body onLoad="javascript:init_trailmap();">

But in a Wordpress website, the <body> tag comes

from Wordpress, so you can't edit it to add an onload.

A web search found lots of people wanting body onloads, and

they had found all sorts of elaborate ruses to get around the problem.

Most of the solutions seemed like they involved editing

site-wide Wordpress files to add special case behavior depending

on the page name. That sounded brittle, especially on a site where

I'm not the Wordpress administrator: would I have to figure this out

all over again every time Wordpress got upgraded?

But I found a trick in a Stack Overflow discussion,

Adding onload to body,

that included a tricky bit of code. There's a javascript function to add

an onload to the

tag; then that javascript is wrapped inside a

PHP function. Then, if I'm reading it correctly, The PHP function registers

itself with Wordpress so it will be called when the Wordpress footer is

added; at that point, the PHP will run, which will add the javascript

to the

body tag in time for for the

onload

even to call the Javascript. Yikes!

But it worked.

Here's what I ended up with, in the PHP page that Wordpress was

already calling for the page:

<?php

/* Wordpress doesn't give you access to the <body> tag to add a call

* to init_trailmap(). This is a workaround to dynamically add that tag.

*/

function add_onload() {

?>

<script type="text/javascript">

document.getElementsByTagName('body')[0].onload = init_trailmap;

</script>

<?php

}

add_action( 'wp_footer', 'add_onload' );

?>

Complicated, but it's a nice trick; and it let us switch to Leaflet

and get the

PEEC

interactive Los Alamos area trail map

working again.

Tags: web, programming, javascript, php, wordpress, mapping

[

15:49 May 27, 2018

More tech/web |

permalink to this entry |

]

Thu, 24 May 2018

A while ago I wrote an

interactive

trail map page for the PEEC nature center website.

At the time, I wanted to use an open library, like OpenLayers or Leaflet;

but there were no good sources of satellite/aerial map tiles at the

time. The only one I found didn't work because they had a big blank

area anywhere near LANL -- maybe because of the restricted

airspace around the Lab. Anyway, I figured people would want a

satellite option, so I used Google Maps instead despite its much

more frustrating API.

This week we've been working on converting the website to https.

Most things went surprisingly smoothly (though we had a lot more

absolute URLs in our pages and databases than we'd realized).

But when we got through, I discovered the trail map was broken.

I'm still not clear why, but somehow the change from http to https

made Google's API stop working.

In trying to fix the problem, I discovered that Google's map API

may soon cease to be free:

New pricing and product changes will go into effect starting June 11,

2018. For more information, check out the

Guide for

Existing Users.

That has a button for "Transition Tool" which, when you click it,

won't tell you anything about the new pricing structure until you've

already set up a billing account. Um ... no thanks, Google.

Googling for google maps api billing led to a page headed

"Pricing

that scales to fit your needs", which has an elaborate pricing

structure listing a whole bnch of variants (I have no idea which

of these I was using), of which the first $200/month is free.

But since they insist on setting up a billing account, I'd probably

have to give them a credit card number -- which one? My personal

credit card, for a page that isn't even on my site? Does the nonprofit

nature center even have a credit card? How many of these API calls is

their site likely to get in a month, and what are the chances of going

over the limit?

It all rubbed me the wrong way, especially when the context

of "Your trail maps page that real people actually use has

broken without warning, and will be held hostage until you give usa

credit card number". This is what one gets for using a supposedly free

(as in beer) library that's not Free open source software.

So I replaced Google with the excellent open source

Leaflet library, which, as a

bonus, has much better documentation than Google Maps. (It's not that

Google's documentation is poorly written; it's that they keep changing

their APIs, but there's no way to tell the dozen or so different APIs

apart because they're all just called "Maps", so when you search for

documentation you're almost guaranteed to get something that stopped

working six years ago -- but the documentation is still there making

it look like it's still valid.)

And I was happy to discover that, in the time since I originally set

up the trailmap page, some open providers of aerial/satellite map

tiles have appeared. So we can use open source and have a

satellite view.

Our trail map is back online with Leaflet, and with any luck,

this time it will keep working.

PEEC

Los Alamos Area Trail Map.

Tags: mapping, web, programming, javascript

[

16:13 May 24, 2018

More programming |

permalink to this entry |

]

Tue, 22 May 2018

Humble Bundle has a great

bundle going right now (for another 15 minutes -- sorry, I meant to post

this earlier) on books by Nebula-winning science fiction authors,

including some old favorites of mine, and a few I'd been meaning to read.

I like Humble Bundle a lot, but one thing about them I don't like:

they make it very difficult to download books, insisting that you click

on every single link (and then do whatever "Download this link / yes, really

download, to this directory" dance your browser insists on) rather than

offering a sane option like a tarball or zip file. I guess part of their

business model includes wanting their customers to get RSI. This has

apparently been a problem for quite some time; a web search found lots

of discussions of ways of automating the downloads, most of which

apparently no longer work (none of the ones I tried did).

But a wizard friend on IRC quickly came up with a solution:

some javascript you can paste into Firefox's console. She started

with a quickie function that fetched all but a few of the files, but

then modified it for better error checking and the ability to get

different formats.

In Firefox, open the web console (Tools/Web Developer/Web Console)

and paste this in the single-line javascript text field at the bottom.

// How many seconds to delay between downloads.

var delay = 1000;

// whether to use window.location or window.open

// window.open is more convenient, but may be popup-blocked

var window_open = false;

// the filetypes to look for, in order of preference.

// Make sure your browser won't try to preview these filetypes.

var filetypes = ['epub', 'mobi', 'pdf'];

var downloads = document.getElementsByClassName('download-buttons');

var i = 0;

var success = 0;

function download() {

var children = downloads[i].children;

var hrefs = {};

for (var j = 0; j < children.length; j++) {

var href = children[j].getElementsByClassName('a')[0].href;

for (var k = 0; k < filetypes.length; k++) {

if (href.includes(filetypes[k])) {

hrefs[filetypes[k]] = href;

console.log('Found ' + filetypes[k] + ': ' + href);

}

}

}

var href = undefined;

for (var k = 0; k < filetypes.length; k++) {

if (hrefs[filetypes[k]] != undefined) {

href = hrefs[filetypes[k]];

break;

}

}

if (href != undefined) {

console.log('Downloading: ' + href);

if (window_open) {

window.open(href);

} else {

window.location = href;

}

success++;

}

i++;

console.log(i + '/' + downloads.length + '; ' + success + ' successes.');

if (i < downloads.length) {

window.setTimeout(download, delay);

}

}

download();

If you have "Always ask where to save files" checked in

Preferences/General, you'll still get a download dialog for each book

(but at least you don't have to click; you can hit return for each

one). Even if this is your preference, you might want to consider

changing it before downloading a bunch of Humble books.

Anyway, pretty cool! Takes the sting out of bundles, especially big

ones like this 42-book collection.

Tags: ebook, programming, web, firefox

[

17:49 May 22, 2018

More tech/web |

permalink to this entry |

]

Fri, 11 May 2018

I was working on a weather project to make animated maps of the

jet stream. Getting and plotting wind data is a much longer article

(coming soon), but once I had all the images plotted, I wanted to

combine them all into a time-lapse video showing how the jet stream moves.

Like most projects, it's simple once you find the right recipe.

If your images are named outdir/filename00.png, outdir/filename01.png,

outdir/filename02.png and so on,

you can turn them into an MPEG4 video with ffmpeg:

ffmpeg -i outdir/filename%2d.png -filter:v "setpts=6.0*PTS" -pix_fmt yuv420p jetstream.mp4

%02d, for non-programmers, just means a 2-digit decimal integer

with leading zeros, If the filenames just use 1, 2, 3, ... 10, 11 without

leading zeros, use %2d instead; if they have three digits, use %03d or

%3d, and so on.

Update:

If your first photo isn't numbered 00, you can set a

-start_number — but it must come before the -i and

filename template. For instance:

ffmpeg -start_number 17 --i outdir/filename%2d.png -filter:v "setpts=6.0*PTS" -pix_fmt yuv420p jetstream.mp4

That "setpts=6.0*PTS" controls the speed of the playback,

by adding or removing frames.

PTS stands for "Presentation TimeStamps",

which apparently is a measure of how far along a frame is in the file;

setpts=6.0*PTS means for each frame, figure out how far

it would have been in the file (PTS) and multiply that by 6. So if

a frame would normally have been at timestamp 10 seconds, now it will be at

60 seconds, and the video will be six times longer and six times slower.

And yes, you can also use values less than one to speed a video up.

You can also change a video's playback speed by

changing the

frame rate, either with the -r option, e.g. -r 30,

or with the fps filter, filter:v fps=30.

The default frame rate is 25.

You can examine values like the frame rate, number of frames and duration

of a video file with:

ffprobe -select_streams v -show_streams filename

or with the mediainfo program (not part of ffmpeg).

The -pix_fmt yuv420p turned out to be the tricky part.

The recipes I found online didn't include that part, but without it,

Firefox claims "Video can't be played because the file is corrupt",

even though most other browsers can play it just fine.

If you open Firefox's web console and reload, it offers the additional

information

"Details: mozilla::SupportChecker::AddMediaFormatChecker(const mozilla::TrackInfo&)::<lambda()>: Decoder may not have the capability to handle the requested video format with YUV444 chroma subsampling.":

Adding -pix_fmt yuv420p cured the problem and made the

video compatible with Firefox, though at first I had problems with

ffmpeg complaining "height not divisible by 2 (1980x1113)" (even though

the height of the images was in fact divisible by 2).

I'm not sure what was wrong; later ffmpeg stopped giving me that error

message and converted the video. It may depend on where in the ffmpeg

command you put the pix_fmt flag or what other flags are

present. ffmpeg arguments are a mystery to me.

Of course, if you're only making something to be uploaded to youtube,

the Firefox limitation probably doesn't matter and you may not need

the -pix_fmt yuv420p argument.

Animated GIFs

Making an animated GIF is easier. You can use ImageMagick's convert:

convert -delay 30 -loop 0 *.png jetstream.gif

The GIF will be a lot larger, though. For my initial test of thirty

1000 x 500 images, the MP4 was 760K while the GIF was 4.2M.

Tags: web, video, time-lapse, firefox

[

09:59 May 11, 2018

More linux |

permalink to this entry |

]

Thu, 01 Mar 2018

I updated my Debian Testing system via apt-get upgrade,

as one does during the normal course of running a Debian system.

The next time I went to a locally hosted website, I discovered PHP

didn't work. One of my websites gave an error, due to a directive

in .htaccess; another one presented pages that were full of PHP code

interspersed with the HTML of the page. Ick!

In theory, Debian updates aren't supposed to change configuration files

without asking first, but in practice, silent and unexpected Apache

bustage is fairly common. But for this one, I couldn't find anything

in a web search, so maybe this will help.

The problem turned out to be that /etc/apache2/mods-available/

includes four files:

$ ls /etc/apache2/mods-available/*php*

/etc/apache2/mods-available/php7.0.conf

/etc/apache2/mods-available/php7.0.load

/etc/apache2/mods-available/php7.2.conf

/etc/apache2/mods-available/php7.2.load

The appropriate files are supposed to be linked from there into

/etc/apache2/mods-enabled. Presumably, I previously had a link

to ../mods-available/php7.0.* (or perhaps 7.1?); the upgrade to

PHP 7.2 must have removed that existing link without replacing it with

a link to the new ../mods-available/php7.2.*.

The solution is to restore those links, either with ln -s

or with the approved apache2 commands (as root, of course):

# a2enmod php7.2

# systemctl restart apache2

Whew! Easy fix, but it took a while to realize what was broken, and

would have been nice if it didn't break in the first place.

Why is the link version-specific anyway? Why isn't there a file called

/etc/apache2/mods-available/php.* for the latest version?

Does PHP really change enough between minor releases to break websites?

Doesn't it break a website more to disable PHP entirely than to swap in

a newer version of it?

Tags: linux, debian, apache, web

[

10:31 Mar 01, 2018

More linux |

permalink to this entry |

]

Wed, 04 Jan 2017

A couple of days ago I blogged about using

Firefox's

"Delete Node" to make web pages more readable.

In a

subsequent Twitter

discussion someone pointed out that if the goal is to make a web

page's content clearer, Firefox's relatively new "Reader Mode" might be

a better way.

I knew about Reader Mode but hadn't used it. It only shows up on some

pages. as a little "open book" icon to the right of the URLbar just

left of the Refresh/Stop button. It did show up on the Pogue Yahoo article;

but when I clicked it, I just got a big blank page with an icon of a

circle with a horizontal dash; no text.

It turns out that to see Reader Mode content in noscript, you must

explicitly enable javascript from about:reader.

There are some reasons it's not automatically whitelisted:

see discussions in

bug 1158071

and

bug 1166455

-- so enable it at your own risk.

But it's nice to be able to use Reader Mode, and I'm glad the Twitter

discussion spurred me to figure out why it wasn't working.

Tags: firefox, web

[

11:37 Jan 04, 2017

More tech/web |

permalink to this entry |

]

Mon, 02 Jan 2017

It's trendy among web designers today -- the kind who care more about

showing ads than about the people reading their pages -- to use fixed

banner elements that hide part of the page. In other words, you have

a header, some content, and maybe a footer; and when you scroll the

content to get to the next page, the header and footer stay in place,

meaning that you can only read the few lines sandwiched in between them.

But at least you can see the name of the site no matter how far you

scroll down in the article! Wouldn't want to forget the site name!

Worse, many of these sites don't scroll properly. If you Page Down,

the content moves a full page up, which means that the top of the new

page is now hidden under that fixed banner and you have to scroll back

up a few lines to continue reading where you left off.

David Pogue wrote about that problem recently and it got a lot of play

when Slashdot picked it up:

These 18 big websites fail the space-bar scrolling test.

It's a little too bad he concentrated on the spacebar. Certainly it's

good to point out that hitting the spacebar scrolls down -- I was

flabbergasted to read the Slashdot discussion and discover that lots

of people didn't already know that, since it's been my most common way

of paging since browsers were invented. (Shift-space does a Page Up.)

But the Slashdot discussion then veered off into a chorus of "I've

never used the spacebar to scroll so why should anyone else care?",

when the issue has nothing to do with the spacebar: the issue is

that Page Down doesn't work right, whichever key you use to

trigger that page down.

But never mind that. Fixed headers that don't scroll are bad even

if the content scrolls the right amount, because it wastes precious

vertical screen space on useless cruft you don't need.

And I'm here to tell you that you can get rid of those annoying fixed

headers, at least in Firefox.

![[Article with intrusive Yahoo headers]](http://shallowsky.com/blog/images/delete-node/pogue-article.jpg)

Let's take Pogue's article itself, since Yahoo is a perfect example of

annoying content that covers the page and doesn't go away. First

there's that enormous header -- the bottom row of menus ("Tech Home" and

so forth) disappear once you scroll, but the rest stay there forever.

Worse, there's that annoying popup on the bottom right ("Privacy | Terms"

etc.) which blocks content, and although Yahoo! scrolls the right

amount to account for the header, it doesn't account for that privacy

bar, which continues to block most of the last line of every page.

The first step is to call up the DOM Inspector. Right-click on the

thing you want to get rid of and choose Inspect Element:

![[Right-click menu with Inspect Element]](http://shallowsky.com/blog/images/delete-node/inspect-menu.jpg)

That brings up the DOM Inspector window, which looks like this

(click on the image for a full-sized view):

![[DOM Inspector]](http://shallowsky.com/blog/images/delete-node/inspector.jpg)

The upper left area shows the hierarchical structure of the web page.

Don't Panic! You don't have to know HTML or understand any of

this for this technique to work.

Hover your mouse over the items in the hierarchy. Notice that as you

hover, different parts of the web page are highlighted in translucent blue.

Generally, whatever element you started on will be a small part of the

header you're trying to eliminate. Move up one line, to the element's

parent; you may see that a bigger part of the header is highlighted.

Move up again, and keep moving up, one line at a time, until the whole

header is highlighted, as in the screenshot. There's also a dark grey

window telling you something about the HTML, if you're interested;

if you're not, don't worry about it.

Eventually you'll move up too far, and some other part of the page,

or the whole page, will be highlighted. You need to find the element

that makes the whole header blue, but nothing else.

Once you've found that element, right-click on it to get a context menu,

and look for Delete Node (near the bottom of the menu).

Clicking on that will delete the header from the page.

Repeat for any other part of the page you want to remove, like that

annoying bar at the bottom right. And you're left with a nice, readable

page, which will scroll properly and let you read every line,

and will show you more text per page so you don't have to scroll as often.

![[Article with intrusive Yahoo headers]](http://shallowsky.com/blog/images/delete-node/pogue-fixed.jpg)

It's a useful trick.

You can also use Inspect/Delete Node for many of those popups that

cover the screen telling you "subscribe to our content!" It's

especially handy if you like to browse with NoScript, so you

can't dismiss those popups by clicking on an X.

So happy reading!

Addendum on Spacebars

By the way, in case you weren't aware that the spacebar did a page

down, here's another tip that might come in useful: the spacebar also

advances to the next slide in just about every presentation program,

from PowerPoint to Libre Office to most PDF viewers. I'm amazed at how

often I've seen presenters squinting with a flashlight at the keyboard

trying to find the right-arrow or down-arrow or page-down or whatever

key they're looking for. These are all ways of advancing to the next

slide, but they're all much harder to find than that great big

spacebar at the bottom of the keyboard.

Tags: web, firefox

[

16:23 Jan 02, 2017

More tech/web |

permalink to this entry |

]

Thu, 09 Jul 2015

For a year or so, I've been appending "output=classic" to any Google

Maps URL. But Google disabled Classic mode last month.

(There have been

a

few other ways to get classic Google maps back, but Google is gradually

disabling them one by one.)

I have basically three problems with the new maps:

- If you search for something, the screen is taken up by a huge

box showing you what you searched for; if you click the "x" to dismiss

the huge box so you can see the map underneath, the box disappears but

so does the pin showing your search target.

- A big swath at the bottom of the screen is taken up by a filmstrip

of photos from the location, and it's an extra click to dismiss that.

- Moving or zooming the map is very, very slow: it relies on OpenGL

support in the browser, which doesn't work well on Linux in general,

or on a lot of graphics cards on any platform.

Now that I don't have the "classic" option any more, I've had to find

ways around the problems -- either that, or switch to Bing maps.

Here's how to make the maps usable in Firefox.

First, for the slowness: the cure is to disable webgl in Firefox.

Go to about:config and search for webgl.

Then doubleclick on the line for webgl.disabled to make it

true.

For the other two, you can add userContent lines to tell

Firefox to hide those boxes.

Locate your

Firefox profile.

Inside it, edit chrome/userContent.css (create that file

if it doesn't already exist), and add the following two lines:

div#cards { display: none !important; }

div#viewcard { display: none !important; }

Voilà! The boxes that used to hide the map are now invisible.

Of course, that also means you can't use anything inside them; but I

never found them useful for anything anyway.

Tags: web, tech, mapping, GIS, firefox

[

10:54 Jul 09, 2015

More tech/web |

permalink to this entry |

]

Tue, 23 Jun 2015

Although Ant

builds

have made Android development much easier, I've long been curious

about the cross-platform phone development apps: you write a simple

app in some common language, like HTML or Python, then run something

that can turn it into apps on multiple mobile platforms, like

Android, iOS, Blackberry, Windows phone, UbuntoOS, FirefoxOS or Tizen.

Last week I tried two of the many cross-platform mobile frameworks:

Kivy and PhoneGap.

Kivy lets you develop in Python, which sounded like a big plus. I went

to a Kivy talk at PyCon a year ago and it looked pretty interesting.

PhoneGap takes web apps written in HTML, CSS and Javascript and

packages them like native applications. PhoneGap seems much more

popular, but I wanted to see how it and Kivy compared.

Both projects are free, open source software.

If you want to skip the gory details, skip to the

summary: how do Kivy and PhoneGap compare?

PhoneGap

I tried PhoneGap first.

It's based on Node.js, so the first step was installing that.

Debian has packages for nodejs, so

apt-get install nodejs npm nodejs-legacy did the trick.

You need nodejs-legacy to get the "node" command, which you'll

need for installing PhoneGap.

Now comes a confusing part. You'll be using npm to install ...

something. But depending on which tutorial you're following, it may

tell you to install and use either phonegap or cordova.

Cordova is an Apache project which is intertwined with PhoneGap. After

reading all their FAQs on the subject, I'm as confused as ever about

where PhoneGap ends and Cordova begins, which one is newer, which one

is more open-source, whether I should say I'm developing in PhoneGap

or Cordova, or even whether I should be asking questions on the

#phonegap or #cordova channels on Freenode. (The one question I had,

which came up later in the process, I asked on #phonegap and got a

helpful answer very quickly.) Neither one is packaged in Debian.

After some searching for a good, comprehensive tutorial, I ended up on a

The Cordova

tutorial rather than a PhoneGap one. So I typed:

sudo npm install -g cordova

Once it's installed, you can create a new app, add the android platform

(assuming you already have android development tools installed) and

build your new app:

cordova create hello com.example.hello HelloWorld

cordova platform add android

cordova build

Oops!

Error: Please install Android target: "android-22"

Apparently Cordova/Phonegap can only build with its own

preferred version of android, which currently is 22.

Editing files to specify android-19 didn't work for me;

it just gave errors at a different point.

So I fired up the Android SDK manager, selected android-22 for install,

accepted the license ... and waited ... and waited. In the end it took

over two hours to download the android-22 SDK; the system image is 13Gb!

So that's a bit of a strike against PhoneGap.

While I was waiting for android-22 to download, I took a look at Kivy.

Kivy

As a Python enthusiast, I wanted to like Kivy best.

Plus, it's in the Debian repositories: I installed it with

sudo apt-get install python-kivy python-kivy-examples

They have a nice

quickstart

tutorial for writing a Hello World app on their site. You write

it, run it locally in python to bring up a window and see what the

app will look like. But then the tutorial immediately jumps into more

advanced programming without telling you how to build and deploy

your Hello World. For Android, that information is in the

Android

Packaging Guide. They recommend an app called Buildozer (cute name),

which you have to pull from git, build and install.

buildozer init

buildozer android debug deploy run

got started on building ... but then I noticed that it was attempting

to download and build its own version of apache

ant

(sort of a Java version of

make). I already have ant --

I've been using it for weeks for building my own Java android apps.

Why did it want a different version?

The file buildozer.spec in your project's

directory lets you uncomment and customize variables like:

# (int) Android SDK version to use

android.sdk = 21

# (str) Android NDK directory (if empty, it will be automatically downloaded.)

# android.ndk_path =

# (str) Android SDK directory (if empty, it will be automatically downloaded.)

# android.sdk_path =

Unlike a lot of Android build packages, buildozer will not inherit

variables like ANDROID_SDK, ANDROID_NDK and ANDROID_HOME from your

environment; you must edit buildozer.spec.

But that doesn't help with ant.

Fortunately, when I inspected the Python code for buildozer itself, I

discovered there was another variable that isn't mentioned in the

default spec file. Just add this line:

android.ant_path = /usr/bin

Next, buildozer gave me a slew of compilation errors:

kivy/graphics/opengl.c: No such file or directory

... many many more lines of compilation interspersed with errors

kivy/graphics/vbo.c:1:2: error: #error Do not use this file, it is the result of a failed Cython compilation.

I had to ask on #kivy to solve that one. It turns out that the current

version of cython, 0.22, doesn't work with kivy stable. My choices were

to uninstall kivy and pull the development version from git, or to uninstall

cython and install version 0.21.2 via pip. I opted for the latter option.

Either way, there's no "make clean", so removing the dist and build

directories let me start over with the new cython.

apt-get purge cython

sudo pip install Cython==0.21.2

rm -rf ./.buildozer/android/platform/python-for-android/dist

rm -rf ./.buildozer/android/platform/python-for-android/build

Buildozer was now happy, and proceeded to download and build Python-2.7.2,

pygame and a large collection of other Python libraries for the ARM platform.

Apparently each app packages the Python language and all libraries it needs

into the Android .apk file.

Eventually I ran into trouble because I'd named my python file hello.py