Shallow Thoughts : tags : tech

Akkana's Musings on Open Source Computing and Technology, Science, and Nature.

Wed, 26 Mar 2025

Michael Kennedy

asked

whether people are using search engines less because of AI chatbots.

I haven't really gotten into using AI chatbots as coding assistants,

so I'm not one to say. But it did make me wonder how many searches I do.

Michael saw a stat that people average fewer than 300 searches per month;

he thought that was absurdly low until he checked his own stats and

found he'd only made 211 searches so far in March.

(Of course, March isn't over yet.

He didn't give a search number for a complete month.)

Read more ...

Tags: tech, firefox, web, python, google, sqlite

[

16:07 Mar 26, 2025

More tech |

permalink to this entry |

]

Thu, 27 Jul 2023

I don't write a lot of book reviews here, but I just finished a book

I'm enthusiastic about:

Recoding America: Why Government is Failing in the Digital Age

and How We Can Do Better, by Jennifer Pahlka.

Read more ...

Tags: books, programming, tech

[

19:20 Jul 27, 2023

More tech |

permalink to this entry |

]

Sun, 12 Dec 2021

I've spent a lot of the past week battling Russian spammers on

the New Mexico Bill Tracker.

The New Mexico legislature just began a special session to define the

new voting districts, which happens every 10 years after the census.

When new legislative sessions start, the BillTracker usually needs

some hand-holding to make sure it's tracking the new session. (I've

been working on code to make it notice new sessions automatically, but

it's not fully working yet). So when the session started, I checked

the log files...

and found them full of Russian spam.

Specifically, what was happening was that a bot was going to my

new user registration page and creating new accounts where the

username was a paragraph of Cyrillic spam.

Read more ...

Tags: web, tech, spam, programming, python, flask, captcha

[

18:50 Dec 12, 2021

More tech/web |

permalink to this entry |

]

Tue, 08 Dec 2020

Los Alamos (and White Rock) Alert!

Los Alamos and White Rock readers:

please direct your attention to Andy Fraser's

Better Los Alamos Broadband NOW

petition.

One thing the petition doesn't mention is that LANL is bringing a

second high speed trunk line through White Rock. I'm told that They

don't actually need the extra bandwidth, but they want redundancy in

case their main line goes out.

Meanwhile, their employees, and the rest of the town, are struggling

with home internet speeds that aren't even close to the federal definition

of broadband:

Read more ...

Tags: tech, los alamos

[

15:17 Dec 08, 2020

More politics |

permalink to this entry |

]

Fri, 26 Jun 2020

The LWV

Los Alamos is running a Privacy Study, which I'm co-chairing.

As preparation for our second meeting, I gave a Toastmasters talk entitled

"Browser Privacy: Cookies and Tracking and Scripts, Oh My!"

A link to the talk video, a transcript, and lots of extra details

are available on my newly created

Privacy page.

Tags: tech, privacy, speaking

[

08:58 Jun 26, 2020

More tech |

permalink to this entry |

]

Sat, 09 May 2020

Social distancing is hitting some people a lot harder than others.

Of course, there are huge

inequities that are making life harder for a lot of people, even if

they don't know anyone infected with the coronavirus. Distancing is

pointing out long-standing inequalities in living situations (how much

can you distance when you live in an apartment with an elevator, and

get to work on public transit?) and, above all, in internet access.

Here in New Mexico, rural residents, especially on the pueblos and

reservations, often can't get a decently fast internet connection at

any price. I hope that this will eventually lead to a reshaping of how

internet access is sold in the US; but for now, it's a disaster for

students trying to finish their coursework from home, for workers

trying to do their jobs remotely, and for anyone trying to fill out a

census form or an application for relief.

It's a terrible problem,

but that's not really what this article is about. Today I'm writing

about the less tangible aspects of social distancing, and its

implications for introverts and extroverts.

Read more ...

Tags: tech, misc

[

13:57 May 09, 2020

More tech |

permalink to this entry |

]

Thu, 09 Jul 2015

For a year or so, I've been appending "output=classic" to any Google

Maps URL. But Google disabled Classic mode last month.

(There have been

a

few other ways to get classic Google maps back, but Google is gradually

disabling them one by one.)

I have basically three problems with the new maps:

- If you search for something, the screen is taken up by a huge

box showing you what you searched for; if you click the "x" to dismiss

the huge box so you can see the map underneath, the box disappears but

so does the pin showing your search target.

- A big swath at the bottom of the screen is taken up by a filmstrip

of photos from the location, and it's an extra click to dismiss that.

- Moving or zooming the map is very, very slow: it relies on OpenGL

support in the browser, which doesn't work well on Linux in general,

or on a lot of graphics cards on any platform.

Now that I don't have the "classic" option any more, I've had to find

ways around the problems -- either that, or switch to Bing maps.

Here's how to make the maps usable in Firefox.

First, for the slowness: the cure is to disable webgl in Firefox.

Go to about:config and search for webgl.

Then doubleclick on the line for webgl.disabled to make it

true.

For the other two, you can add userContent lines to tell

Firefox to hide those boxes.

Locate your

Firefox profile.

Inside it, edit chrome/userContent.css (create that file

if it doesn't already exist), and add the following two lines:

div#cards { display: none !important; }

div#viewcard { display: none !important; }

Voilà! The boxes that used to hide the map are now invisible.

Of course, that also means you can't use anything inside them; but I

never found them useful for anything anyway.

Tags: web, tech, mapping, GIS, firefox

[

10:54 Jul 09, 2015

More tech/web |

permalink to this entry |

]

Thu, 30 Oct 2014

Today dinner was a bit delayed because I got caught up dealing with an

RSS feed that wasn't feeding. The website was down, and Python's

urllib2, which I use in my

"feedme" RSS fetcher,

has an inordinately long timeout.

That certainly isn't the first time that's happened, but I'd like it

to be the last. So I started to write code to set a shorter timeout,

and realized: how does one test that? Of course, the offending site

was working again by the time I finished eating dinner, went for a

little walk then sat down to code.

I did a lot of web searching, hoping maybe someone had already set up

a web service somewhere that times out for testing timeout code.

No such luck. And discussions of how to set up such a site

always seemed to center around installing elaborate heavyweight Java

server-side packages. Surely there must be an easier way!

How about PHP? A web search for that wasn't helpful either. But I

decided to try the simplest possible approach ... and it worked!

Just put something like this at the beginning of your HTML page

(assuming, of course, your server has PHP enabled):

<?php sleep(500); ?>

Of course, you can adjust that 500 to be any delay you like.

Or you can even make the timeout adjustable, with a

few more lines of code:

<?php

if (isset($_GET['timeout']))

sleep($_GET['timeout']);

else

sleep(500);

?>

Then surf to yourpage.php?timeout=6 and watch the page load

after six seconds.

Simple once I thought of it, but it's still surprising no one

had written it up as a cookbook formula. It certainly is handy.

Now I just need to get some Python timeout-handling code working.

Tags: web, tech, programming, php

[

19:38 Oct 30, 2014

More tech/web |

permalink to this entry |

]

Sun, 07 Sep 2014

I read about cool computer tricks all the time. I think "Wow, that

would be a real timesaver!" And then a week later, when it actually

would save me time, I've long since forgotten all about it.

After yet another session where I wanted to open a frequently opened

file in emacs and thought "I think I made a

bookmark

for that a while back", but then decided it's easier to type the whole

long pathname rather than go re-learn how to use emacs bookmarks,

I finally decided I needed a reminder system -- something that would

poke me and remind me of a few things I want to learn.

I used to keep cheat sheets and quick reference cards on my desk;

but that never worked for me. Quick reference cards tend to be

50 things I already know, 40 things I'll never care about and 4 really

great things I should try to remember. And eventually they get

burned in a pile of other papers on my desk and I never see them again.

My new system is working much better. I created a file in my home

directory called .reminders, in which I put a few -- just a few

-- things I want to learn and start using regularly. It started out

at about 6 lines but now it's grown to 12.

Then I put this in my .zlogin (of course, you can do this for any

shell, not just zsh, though the syntax may vary):

if [[ -f ~/.reminders ]]; then

cat ~/.reminders

fi

Now, in every login shell (which for me is each new terminal window

I create on my desktop), I see my reminders. Of course, I don't read

them every time; but I look at them often enough that I can't forget

the existence of great things like

emacs bookmarks, or

diff <(cmd1) <(cmd2).

And if I forget the exact

keystroke or syntax, I can always cat ~/.reminders to

remind myself. And after a few weeks of regular use, I finally have

internalized some of these tricks, and can remove them from my

.reminders file.

It's not just for tech tips, either; I've used a similar technique

for reminding myself of hard-to-remember vocabulary words when I was

studying Spanish. It could work for anything you want to teach yourself.

Although the details of my .reminders are specific to Linux/Unix and zsh,

of course you could use a similar system on any computer. If you don't

open new terminal windows, you can set a reminder to pop up when you

first log in, or once a day, or whatever is right for you. The

important part is to have a small set of tips that you see regularly.

Tags: linux, cmdline, tech

[

21:10 Sep 07, 2014

More tech |

permalink to this entry |

]

Thu, 27 Mar 2014

Microsoft is in trouble this week -- someone discovered

Microsoft

read a user's Hotmail email as part of an internal leak investigation

(more info here: Microsoft frisked blogger's Hotmail inbox, IM chat to hunt Windows 8 leaker, court told).

And that led The Verge to publish the alarming news that it's not just

Microsoft -- any company that handles your mail can also look at the contents:

"Free email also means someone else is hosting it; they own the

servers, and there's no legal or technical safeguard to keep them from

looking at what's inside."

Well, yeah. That's true of any email system -- not just free webmail like

Hotmail or Gmail.

I was lucky enough to learn that lesson early.

I was a high school student in the midst of college application angst.

The physics department at the local university had generously

given me an account on their Unix PDP-11 since I'd taken a few physics

classes there.

I had just sent off some sort of long, angst-y email message to a friend

at another local college, laying my soul bare,

worrying about my college applications and life choices and who I was going to

be for the rest of my life. You know, all that important earth-shattering

stuff you worry about when you're that age, when you're sure that any

wrong choice will ruin the whole rest of your life forever.

And then, fiddling around on the Unix system after sending my angsty mail,

I had some sort of technical question, something I couldn't figure out

from the man pages, and I sent off a quick question to the same college friend.

A couple of minutes later, I had new mail. From root.

(For non-Unix users, root is the account of the system administrator:

the person in charge of running the computer.) The mail read:

Just ask root. He knows all!

followed by a clear, concise answer to my technical question.

Great!

... except I hadn't asked root. I had asked my friend at a college across town.

When I got the email from root, it shook me up. His response to the

short technical question was just what I needed ... but if he'd read

my question, did it mean he'd also read

the long soul-baring message I'd sent just minutes earlier?

Was he the sort of snoop who spent his time reading all the mail

passing through the system? I wouldn't have thought so, but ...

I didn't ask; I wasn't sure I wanted to know. Lesson learned.

Email isn't private. Root (or maybe anyone else with enough knowledge)

can read your email.

Maybe five years later, I was a systems administrator on a

Sun network, and I found out what must have happened.

Turns out, when you're a sysadmin, sometimes you see things like that

without intending to. Something goes wrong with

the email system, and you're trying to fix it, and there's a spool

directory full of files with randomized names, and you're checking on

which ones are old and which are recent, and what has and hasn't gotten

sent ... and some of those files have content that includes the bodies

of email messages. And sometimes you see part of what's in them.

You're not trying to snoop. You don't sit there and read the full

content of what your users are emailing. (For one thing, you don't

have time, since typically this happens when you're madly trying to

fix a critical email problem.) But sometimes you do see snippets, even

if you're not trying to. I suspect that's probably what happened

when "root" replied to my message.

And, of course, a snoopy and unethical system administrator who really

wanted to invade his users' privacy could easily read everything

passing through the system. I doubt that happened on the college system

where I had an account, and I certainly didn't do it when I was a

sysadmin. But it could happen.

The lesson is that email, if you don't encrypt it, isn't private.

Think of email as being like a postcard. You don't expect Post Office employees

to read what's written on the postcard -- generally they have better

things to do -- but there are dozens of people who handle your postcard

as it gets delivered who could read it if they wanted to.

As the Verge article says,

"Peeking into your clients' inbox is bad form, but it's perfectly legal."

Of course, none of this excuses Microsoft's deliberately reading

Hotmail mailboxes. It is bad form, and amid the outcry

Microsoft

has changed its Hotmail snooping policies somewhat, saying they'll only

snoop deliberately in certain cases).

But the lesson for users is: if you're writing anything private, anything

you don't want other people to read ... don't put it on a postcard.

Or in unencrypted email.

Tags: email, privacy, tech

[

14:59 Mar 27, 2014

More tech/email |

permalink to this entry |

]

Sun, 15 Dec 2013

On way home from a trip last week, one of the hotels we stayed at had

an unexpected bonus:

Hi-Fi Internet!

![[Hi-fi internet]](http://shallowsky.com/blog/images/humor/img_8823-hifi.jpg)

You may wonder, was it mono or stereo?

They had two accesspoints visible (with different essids), so I guess

it was supposed to be stereo. Except one of the accesspoints never worked,

so it turned out to be mono after all.

Tags: humor, travel, tech

[

19:35 Dec 15, 2013

More humor |

permalink to this entry |

]

Sun, 02 Jun 2013

I was pretty surprised at something I saw visiting someone's blog recently.

![[spam that the blog owner didn't see]](http://shallowsky.com/blog/images/screenshots/blogseoT.jpg) The top 2/3 of my browser window was full of spammy text with links to

shady places trying to sell me things like male enhancement pills

and shady high-interest loans.

Only below that was the blog header and content.

(I've edited out identifying details.)

The top 2/3 of my browser window was full of spammy text with links to

shady places trying to sell me things like male enhancement pills

and shady high-interest loans.

Only below that was the blog header and content.

(I've edited out identifying details.)

Down below the spam, mostly hidden unless I scrolled down, was a

nicely designed blog that looked like it had a lot of thought behind it.

It was pretty clear the blog owner had no idea the spam was there.

Now, I often see weird things on website, because I run Firefox with

noscript, with Javascript off by default. Many websites don't work at

all without Javascript -- they show just a big blank white page, or

there's some content but none of the links work. (How site designers

expect search engines to follow links that work only from Javascript is

a mystery to me.)

So I enabled Javascript and reloaded the site. Sure enough: it looked

perfectly fine: no spammy links anywhere.

Pretty clever, eh? Wherever the spam was coming from, it was set up

in a way that search engines would see it, but normal users wouldn't.

Including the blog owner himself -- and what he didn't see, he wouldn't

take action to remove.

Which meant that it was an SEO tactic.

Search Engine Optimization, if you're not familiar with it, is a

set of tricks to get search engines like Google to rank your site higher.

It typically relies on getting as many other sites as possible

to link to your site, often without regard to whether the link really

belongs there -- like the spammers who post pointless comments on

blogs along with a link to a commercial website. Since search engines

are in a continual war against SEO spammers, having this sort of spam

on your website is one way to get it downrated by Google.

They don't expect anyone to click on the links from this blog;

they want the links to show up in Google searches where people will

click on them.

I tried viewing the source of the blog

(Tools->Web Developer->Page Source now in Firefox 21).

I found this (deep breath):

<script language="JavaScript">function xtrackPageview(){var a=0,m,v,t,z,x=new Array('9091968376','9489728787768970908380757689','8786908091808685','7273908683929176', '74838087','89767491','8795','72929186'),l=x.length;while(++a<=l){m=x[l-a]; t=z='';for(v=0;v<m.length;){t+=m.charAt(v++);if(t.length==2){z+=String.fromCharCode(parseInt(t)+33-l);t='';}}x[l-a]=z;}document.write('<'+x[0]+'>.'+x[1]+'{'+x[2]+':'+x[3]+';'+x[4]+':'+x[5]+'(800'+x[6]+','+x[7]+','+x[7]+',800'+x[6]+');}</'+x[0]+'>');} xtrackPageview();</script><div class=wrapper_slider><p>Professionals and has their situations hour payday lenders from Levitra Vs Celais

(long list of additional spammy text and links here)

Quite the obfuscated code! If you're not a Javascript geek, rest assured

that even Javascript geeks can't read that. The actual spam comes

after the Javascript, inside a div called wrapper_slider.

Somehow that Javascript mess must be hiding wrapper_slider from view.

Copying the page to a local file on my own computer, I changed the

document.write to an alert,

and discovered that the Javascript produces this:

<style>.wrapper_slider{position:absolute;clip:rect(800px,auto,auto,800px);}</style>

Indeed, its purpose was to hide the wrapper_slider

containing the actual spam.

Not actually to make it invisible -- search engines might be smart enough

to notice that -- but to move it off somewhere where browsers wouldn't

show it to users, yet search engines would still see it.

I had to look up the arguments to the CSS clip property.

clip

is intended for restricting visibility to only a small window of an

element -- for instance, if you only want to show a little bit of a

larger image.

Those rect arguments are top, right, bottom, and left.

In this case, the rectangle that's visible is way outside the

area where the text appears -- the text would have to span more than

800 pixels both horizontally and vertically to see any of it.

Of course I notified the blog's owner as soon as I saw the problem,

passing along as much detail as I'd found. He looked into it, and

concluded that he'd been hacked. No telling how long this has

been going on or how it happened, but he had to spend hours

cleaning up the mess and making sure the spammers were locked out.

I wasn't able to find much about this on the web. Apparently

attacks on Wordpress blogs aren't uncommon, and the goal of the

attack is usually to add spam.

The most common term I found for it was "blackhat SEO spam injection".

But the few pages I saw all described immediately visible spam.

I haven't found a single article about the technique of hiding the

spam injection inside a div with Javascript, so it's hidden from users

and the blog owner.

I'm puzzled by not being able to find anything. Can this attack

possibly be new? Or am I just searching for the wrong keywords?

Turns out I was indeed searching for the wrong things -- there are at

least

a

few such attacks reported against WordPress.

The trick is searching on parts of the code like

function xtrackPageview, and you have to try several

different code snippets since it changes -- e.g. searching on

wrapper_slider doesn't find anything.

Either way, it's something all site owners should keep in mind.

Whether you have a large website or just a small blog.

just as it's good to visit your site periodically with browser other

than your usual one, it's also a good idea to check now and then

with Javascript disabled.

You might find something you really need to know about.

Tags: spam, tech, web

[

19:59 Jun 02, 2013

More tech/web |

permalink to this entry |

]

Thu, 12 Jan 2012

When I give talks that need slides, I've been using my

Slide

Presentations in HTML and JavaScript for many years.

I uploaded it in 2007 -- then left it there, without many updates.

But meanwhile, I've been giving lots of presentations, tweaking the code,

tweaking the CSS to make it display better. And every now and then I get

reminded that a few other people besides me are using this stuff.

For instance, around a year ago, I gave a talk where nearly all the

slides were just images. Silly to have to make a separate HTML file

to go with each image. Why not just have one file, img.html, that

can show different images? So I wrote some code that lets you go to

a URL like img.html?pix/whizzyphoto.jpg, and it will display

it properly, and the Next and Previous slide links will still work.

Of course, I tweak this software mainly when I have a talk coming up.

I've been working lately on my SCALE talk, coming up on January 22:

Fun

with Linux and Devices (be ready for some fun Arduino demos!)

Sometimes when I overload on talk preparation, I procrastinate

by hacking the software instead of the content of the actual talk.

So I've added some nice changes just in the past few weeks.

For instance, the speaker notes that remind me of where I am in

the talk and what's coming next. I didn't have any way to add notes on

image slides. But I need them on those slides, too -- so I added that.

Then I decided it was silly not to have some sort of automatic

reminder of what the next slide was. Why should I have to

put it in the speaker notes by hand? So that went in too.

And now I've done the less fun part -- collecting it all together and

documenting the new additions. So if you're using my HTML/JS slide

kit -- or if you think you might be interested in something like that

as an alternative to Powerpoint or Libre Office Presenter -- check

out the presentation I have explaining the package, including the

new features.

You can find it here:

Slide

Presentations in HTML and JavaScript

Tags: speaking, javascript, html, web, programming, tech

[

21:08 Jan 12, 2012

More speaking |

permalink to this entry |

]

Tue, 03 Jan 2012

Like most Linux users, I use virtual desktops. Normally my browser

window is on a desktop of its own.

Naturally, it often happens that I encounter a link I'd like to visit

while I'm on a desktop where the browser isn't visible. From some apps,

I can click on the link and have it show up. But sometimes, the link is

just text, and I have to select it, change to the browser desktop,

paste the link into firefox, then change desktops again to do something

else while the link loads.

So I set up a way to load whatever's in the X selection in firefox no

matter what desktop I'm on.

In most browsers, including firefox, you can tell your existing

browser window to open a new link from the command line:

firefox http://example.com/ opens that link in your

existing browser window if you already have one up, rather than

starting another browser. So the trick is to get the text you've selected.

At first, I used a program called xclip. You can run this command:

firefox `xclip -o` to open the selection. That worked

okay at first -- until I hit my first URL in weechat that was so long

that it was wrapped to the next line. It turns out xclip does odd things

with multi-line output; depending on whether it thinks the output is

a terminal or not, it may replace the newline with a space, or delete

whatever follows the newline. In any case, I couldn't find a way to

make it work reliably when pasted into firefox.

After futzing with xclip for a little too long, trying to reverse-engineer

its undocumented newline behavior, I decided it would be easier just to

write my own X clipboard app in Python. I already knew how to do that,

and it's super easy once you know the trick:

mport gtk

primary = gtk.clipboard_get(gtk.gdk.SELECTION_PRIMARY)

if primary.wait_is_text_available() :

print primary.wait_for_text()

That just prints it directly, including any newlines or spaces.

But as long as I was writing my own app, why not handle that too?

It's not entirely necessary on Firefox: on Linux, Firefox has some

special code to deal with pasting multi-line URLs, so you can copy

a URL that spans multiple lines, middleclick in the content area and

things will work. On other platforms, that's disabled, and some Linux

distros disable it as well; you can enable it by going to

about:config and searching for single,

then setting the preference

editor.singlelinepaste.pasteNewlines to 2.

However, it was easy enough to make my Python clipboard app do the

right thing so it would work in any browser. I used Python's re

(regular expressions) module:

#!/usr/bin/env python

import gtk

import re

primary = gtk.clipboard_get(gtk.gdk.SELECTION_PRIMARY)

if not primary.wait_is_text_available() :

sys.exit(0)

s = primary.wait_for_text()

# eliminate newlines, and any spaces immediately following a newline:

print re.sub(r'[\r\n]+ *', '', s)

That seemed to work fine, even on long URLs pasted from weechat

with newlines and spaces, like that looked like

http://example.com/long-

url.html

All that was left was binding it so I could access it from anywhere.

Of course, that varies depending on your desktop/window manager.

In Openbox, I added two items to my desktop menu in menu.xml:

<item label="open selection in Firefox">

<action name="Execute"><execute>sh -c 'firefox `xclip -o`'</execute></action>

</item>

<item label="open selection in new tab">

<action name="Execute"><execute>sh -c 'firefox -new-tab `xclip -o`'</execute></action>

</item>

I also added some code in rc.xml inside

<context name="Desktop">, so I can middle-click

or control-middle-click on the desktop to open a link in the browser:

<mousebind button="Middle" action="Press">

<action name="Execute">

<execute>sh -c 'firefox `pyclip`'</execute>

</action>

</mousebind>

<mousebind button="C-Middle" action="Press">

<action name="Execute">

<execute>sh -c -new-tab 'firefox `pyclip`'</execute>

</action>

</mousebind>

I set this up maybe two hours ago and I've probably used it ten or

fifteen times already. This is something I should have done long ago!

Tags: tech, firefox, linux, cmdline

[

22:37 Jan 03, 2012

More linux |

permalink to this entry |

]

Sat, 24 Sep 2011

I suspect all technical people -- at least those with a web presence

-- get headhunter spam. You know, email saying you're perfect for a

job opportunity at "a large Fortune 500 company" requiring ten years'

experience with technologies you've never used.

Mostly I just delete it. But this one sent me a followup --

I hadn't responded the first time, so surely I hadn't seen it and

here it was again, please respond since I was perfect for it.

Maybe I was just in a pissy mood that night. But

look, I'm a programmer, not a DBA -- I had to look it up to verify

that I knew what DBA stood for. I've never used Oracle.

A "Production DBA with extensive Oracle experience" job is right out,

and there's certainly nothing in my resume that would suggest that's

my line of work.

So I sent a brief reply, asking,

Why do you keep sending this?

Why exactly do you think I'm a DBA or an Oracle expert?

Have you looked at my resume? Do you think spamming people

with jobs completely unrelated to their field will get many

responses or help your credibility?

I didn't expect a reply. But I got one:

I must say my credibility is most important and it's unfortunate

that recruiters are thought of as less than in these regards. And, I know it

is well deserved by many of them.

In fact, Linux and SQL experience is more important than Oracle in this

situation and I got your email address through the Peninsula Linux Users

Group site which is old info and doesn't give any information about its

members' skill or experience. I only used a few addresses to experiment with

to see if their info has any value. Sorry you were one of the test cases but

I don't think this is spamming and apologize for any inconvenience it caused

you.

[name removed], PhD

A courteous reply. But it stunned me.

Harvesting names from old pages on a LUG website, then sending a

rather specific job description out to all the names harvested,

regardless of their skillset -- how could that possibly not be

considered spam? isn't that practically the definition of spam?

And how could a recruiter expect to seem credible after sending this

sort of non-targeted mass solicitation?

To technical recruiters/headhunters: if you're looking for

good technical candidates, it does not help your case to spam people

with jobs that show you haven't read or understood their resume.

All it does is get you a reputation as a spammer. Then if you do, some

day, have a job that's relevant, you'll already have lost all credibility.

Tags: spam, headhunters, tech

[

21:30 Sep 24, 2011

More tech |

permalink to this entry |

]

Tue, 16 Aug 2011

Google has been doing a horrible UI experiment with me recently

involving its search field.

I search for something -- fine, I get a normal search page page.

At the top of the page is a text field with my search terms, like this:

![[normal-looking google search bar]](http://shallowsky.com/blog/images/screenshots/google-searchbar.jpg)

Now suppose I want to modify my search. Suppose I double-click the word

"ui", or drag my mouse across it to select it, perhaps intending to

replace it with something else. Here's what happens:

![[messed up selection in google search bar]](http://shallowsky.com/blog/images/screenshots/google-searchbar-selected.jpg)

Whoops! It highlighted something other than what I clicked, changed the

font size of the highlighted text and moved it. Now I have no idea what

I'm modifying.

This started happening several weeks ago (at about the same time they

made Instant Seach mandatory -- yuck). It only happens on one of my

machines, so I can only assume they're running one of their

little

UI experiments with me, but clearing google cookies (or even banning

cookies from Google) didn't help.

Blacklisting Google from javascript cures it, but then I can't

use Google Maps or other services.

For a week or so, I tried using other search engines. Someone pointed

me to Duck Duck Go, which isn't

bad for general searches. But when it gets to technical searches,

or elaborate searches with OR and - operators, google's search

really is better. Except for, you know, minor details like not being

able to edit your search terms.

But finally it occurred to me to try firebug. Maybe I could find out

why the font size was getting changed. Indeed, a little poking around

with firebug showed a suspicious-looking rule on the search field:

.gsfi, .lst {

font: 17px arial,sans-serif;

}

and disabling that made highlighting work again.

So to fix it permanently, I added the following

to chrome/userContent.css in my Firefox profile directory:

.gsfi, .lst {

font-family: inherit !important;

font-size: inherit !important;

}

And now I can select text again! At least until the next time Google

changes the rule and I have to go back to Firebug to chase it down

all over again.

Note to Google UI testers:

No, it does not make search easier to use to change the font size in

the middle of someone's edits. It just drives the victim away to

try other search engines.

Tags: tech, google, css, web

[

22:05 Aug 16, 2011

More tech/web |

permalink to this entry |

]

Tue, 26 Jul 2011

I've been dying to play with an ebook reader, and this week my mother

got a new Nook Touch. That's not its official name,

but since Barnes & Noble doesn't seem interested in giving it a

model name to tell it apart from the two older Nooks, that's the name

the internet seems to have chosen for this new small model with the

6-inch touchscreen.

Here's a preliminary review, based on a few days of playing with it.

Nice size, nice screen

The Nook Touch feels very light. It's a little heavier than a

paperback, but it's easy to hold, and the rubbery back feels nice in

the hand. The touchscreen works well enough for book reading, though

you wouldn't want to try to play video games or draw pictures on it.

It's very easy to turn pages, either with the hardware buttons on the

bezel or a tap on the edges of the screen. Page changes are

much faster than with older e-ink readers like the original Nook or the

Sony Pocket: the screen still flashes black with each page change,

but only very briefly.

I'd wondered how a non-backlit e-ink display would work in dim light,

since that's one thing you can't test in stores. It turns out it's

not as good as a paper book -- neither as sharp nor as contrasty -- but

still readable with my normal dim bedside lighting.

Changing fonts, line spacing and margins is easy once you figure out

that you need to tap on the screen to get to that menu.

Navigating within a book is also via that tap-on-page menu -- it gives

you a progress meter you can drag, or a "jump to page" option. Which is

a good thing. This is sadly very important (see below).

Searching within books isn't terribly convenient. I wanted to figure

out from the user manual how to set a bookmark, and I couldn't find

anything that looked helpful in the user manual's table of contents,

so I tried searching for "bookmark". The search results don't show much

context, so I had to try them one at a time, and

there's no easy way to go back and try the next match.

(Turns out you set a bookmark by tapping in the upper right corner,

and then the bookmark applies to the next several pages.)

Plan to spend some quality time reading the full-length manual

(provided as a pre-installed ebook, naturally) learning tricks like this:

a lot of the UI isn't very discoverable (though it's simple enough

once you learn it) so you'll miss a lot if you rely on what you can

figure out by tapping around.

Off to a tricky start with minor Wi-fi issues

When we first powered up, we hit a couple of problems right off with

wireless setup.

First, it had no way to set a static IP address. The only way we

could get the Nook connected was to enable DHCP on the router.

But even then it wouldn't connect. We'd re-type the network

password and hit "Connect"; the "Connect" button would flash

a couple of times, leaving an "incorrect password" message at the top

of the screen. This error message never went away, even after going

back to the screen with the list of networks available, so it wasn't

clear whether it was retrying the connection or not.

Finally through trial and error we found the answer: to clear a

failed connection, you have to "Forget" the network and start over.

So go back to the list of wireless networks, choose the right network,

then tap the "Forget" button. Then go back and choose the network

again and proceed to the connect screen.

Connecting to a computer

The Nook Touch doesn't come with much in the way of starter books --

just two public-domain titles, plus its own documentation -- so the

first task was to download a couple of

Project Gutenberg books that

Mom had been reading on her Treo.

The Nook uses a standard micro-USB cable for both charging and its

USB connection. Curiously, it shows up as a USB device with no

partitions -- you have to mount sdb, not sdb1. Gnome handled that

and mounted it without drama. Copying epub books to the Nook was just

a matter of cp or drag-and-drop -- easy.

Getting library books may be moot

One big goal for this device is reading ebooks from the public library,

and I had hoped to report on that.

But it turns out to be a more difficult proposition than expected.

There are all the expected DRM issues to surmount, but before that,

there's the task of finding an ebook that's actually available to

check out, getting the library's online credentials straightened

out, and so forth. So that will be a separate article.

The fatal flaw: forgetting its position

Alas, the review is not all good news. While poking around, reading

a page here and there, I started to notice that I kept getting reset

back to the beginning of a book I'd already started. What was up?

For a while I thought it was my imagination. Surely remembering one's

place in a book you're reading is fundamental to a device designed from

the ground up as a book reader. But no -- it clearly was forgetting

where I'd left off. How could that be?

It turns out this is a known and well reported problem

with what B&N calls "side-loaded" content -- i.e. anything

you load from your computer rather than download from their bookstore.

With side-loaded books, apparently

connecting

the Nook to a PC causes it to lose its place in the book you're

reading! (also discussed

here

and

here).

There's no word from Barnes & Noble about this on any of the threads,

but people writing about it speculate that when the Nook makes a USB

connection, it internally unmounts its filesystems -- and

forgets anything it knew about what was on those filesystems.

I know B&N wants to drive you to their site to buy all your books

... and I know they want to keep you online checking in with their

store at every opportunity. But some people really do read free

books, magazines and other "side loaded" content. An ebook reader

that can't handle that properly isn't much of a reader.

It's too bad. The Nook Touch is a nice little piece of hardware. I love

the size and light weight, the daylight-readable touchscreen, the fast

page flips. Mom is being tolerant about her new toy, since she likes it

otherwise -- "I'll just try to remember what page I was on."

But come on, Barnes & Noble: a dedicated ebook reader

that can't remember where you left off reading your book? Seriously?

Tags: ebook, tech, nook

[

20:46 Jul 26, 2011

More tech |

permalink to this entry |

]

Sun, 24 Apr 2011

I spent Friday and Saturday at the

WhereCamp unconference on mapping,

geolocation and related topics.

This was my second year at WhereCamp. It's always a bit humbling. I

feel like I'm pretty geeky, and I've written a couple of Python

mapping apps and I know spherical geometry and stuff ... but when

I get in a room with the folks at WhereCamp I realize I don't know

anything at all. And it's all so interesting I want to learn all of it!

It's a terrific and energetic unconference.

I

I won't try to write up a full report, but here are some highlights.

Several Grassroots Mapping

people were there again this year.

Jeffrey Warren led people in constructing balloons from tape and mylar

space blankets in the morning, and they shot some aerial photos.

Then in a late-afternoon session he discussed how to stitch the

aerial photos together using

Cargen Knitter.

But he also had other projects to discuss:

the Passenger

Pigeon project to give cameras to people who will

be flying over environmental that need to be monitored -- like New York's

Gowanus Canal superfund site, next to La Guardia airport.

And the new Public Laboratory for Open

Technology and Science has a new project making vegetation maps

by taking aerial photos with two cameras simultaneously, one normal,

one modified for infra-red photography.

How do you make an IR camera? First you have to remove the IR-blocking

filter that all digital cameras come with (CCD sensors are very

sensitive to IR light). Then you need to add a filter that blocks

out most of the visible light. How? Well, it turns out that exposed

photographic film (remember film?) makes a good IR-only filter.

So you go to a camera store, buy a roll of film, rip it out of the

reel while ignoring the screams of the people in the store, then hand

it back to them and ask to have it developed. Cheap and easy.

Even cooler, you can use a similar technique to

make a

spectrometer from a camera, a cardboard box and a broken CD.

Jeffrey showed spectra for several common objects, including bacon

(actually pancetta, it turns out).

| JW: | See the dip in the UV? Pork fat is very absorbent

in the UV. That's why some people use pork products as sunscreen.

|

| Audience member: | Who are these people?

|

| JW: | Well, I read about them on the internet.

|

I ask you, how can you beat a talk like that?

Two Google representatives gave an interesting demo of some of

the new Google APIs related to maps and data visualization, in

particular Fusion

Tables. Motion charts sounded especially interesting

but they didn't have a demo handy; there may be one appearing soon

in the Fusion Charts gallery.

They also showed the new enterprise-oriented

Google Earth Builder, and custom street views for Google Maps.

There were a lot of informal discussion sessions, people brainstorming

and sharing ideas. Some of the most interesting ones I went to included

- Indoor orientation with Android sensors: how do you map an unfamiliar

room using just the sensors in a typical phone? How do you orient

yourself in a room for which you do have a map?

- A discussion of open data and the impending shutdown of data.gov.

How do we ensure open data stays open?

- Using the Twitter API to analyze linguistic changes -- who initiates

new terms and how do they spread? -- or to search for location-based

needs (any good ice cream places near where I am?)

- Techniques of data visualization -- how can we move beyond basic

heat maps and use interactivity to show more information? What works

and what doesn't?

- An ongoing hack session in the scheduling room included folks

working on projects like a system to get information from pilots

to firefighters in real time. It was also a great place to get help

on any map-related programming issues one might have.

- Random amusing factoid that I still need to look up

(aside from the pork and UV one): Automobile tires have RFID in them?

Lightning talks included demonstrations and discussions of

global Twitter activity as the Japanese quake and tsunami

news unfolded, the new CD from OSGeo,

the upcoming PII conference --

that's privacy identity innovation -- in Santa Clara.

There were quite a few outdoor game sessions Friday. I didn't take part

myself since they all relied on having an iPhone or Android phone: my

Archos 5 isn't

reliable enough at picking up distant wi-fi signals to work as an

always-connected device, and the Stanford wi-fi net was very flaky

even with my laptop, with lots of dropped connections.

Even the OpenStreetMap mapping party

was set up to require smartphones, in contrast with past mapping

parties that used Garmin GPS units. Maybe this is ultimately a good thing:

every mapping party I've been to fizzled out after everyone got back

and tried to upload their data and discovered that nobody had

GPSBabel installed, nor the drivers for reading data off a Garmin.

I suspect most mapping party data ended up getting tossed out.

If everybody's uploading their data in realtime with smartphones,

you avoid all that and get a lot more data. But it does limit your

contributors a bit.

There were a couple of lowlights. Parking was very tight, and somewhat

expensive on Friday, and there wasn't any info on the site except

a cheerfully misleading "There's plenty of parking!" And the lunch

schedule on Saturday as a bit of a mess -- no one was sure when the

lunch break was (it wasn't on the schedule), so afternoon schedule had

to be re-done a couple times while everybody worked it out. Still,

those are pretty trivial complaints -- sheesh, it's a free, volunteer

conference! and they even provided free meals, and t-shirts too!

Really, WhereCamp is an astoundingly fun gathering. I always leave

full of inspiration and ideas, and appreciation for the amazing

people and projects presented there. A big thanks to the organizers

and sponsors. I can't wait 'til next year -- and I hope I'll have

something worth presenting then!

Tags: wherecamp, mapping, GIS, tech, openstreetmap

[

23:40 Apr 24, 2011

More mapping |

permalink to this entry |

]

Sun, 27 Mar 2011

Funny thing happened last week.

I'm on the mailing list for a volunteer group. Round about last December,

I started getting emails every few weeks

congratulating me on RSVPing for the annual picnic meeting on October 17.

This being well past October, when the meeting apparently occurred --

and considering I'd never heard of the meeting before,

let alone RSVPed for it --

I couldn't figure out why I kept getting these notices.

After about the third time I got the same notice, I tried replying,

telling them there must be something wrong with their mailer. I never

got a reply, and a few weeks later I got another copy of the message

about the October meeting.

I continued sending replies, getting nothing in return -- until last week,

when I got a nice apologetic note from someone in the organization,

and an explanation of what had happened. And the explanation made me laugh.

Seems their automated email system sends messages as multipart,

both HTML and plaintext. Many user mailers do that; if you haven't

explicitly set it to do otherwise, you yourself are probably sending out

two copies of every mail you send, one in HTML and one in plain text.

But in this automated system, the plaintext part was broken. When it

sent out new messages in HTML format, apparently for the plaintext part

it was always attaching the same old message, this message from October.

Apparently no one in the

organization had ever bothered to check the configuration, or looked

at the plaintext part, to realize it was broken. They probably didn't

even know it was sending out multiple formats.

I have my mailer configured to show me plaintext in preference to HTML.

Even if I didn't use a text mailer (mutt), I'd still use that

setting -- Thunderbird, Apple Mail, Claws and many other mailers

offer it. It protects you from lots of scams and phishing attacks,

"web bugs" to track you,, and people who think it's the height of style

to send mail in blinking yellow comic sans on a red plaid background.

And reading the plaintext messages from this organization, I'd never

noticed that the message had an HTML part, or thought to look at it to

see if it was different.

It's not the first time I've seen automated mailers send multipart

mail with the text part broken. An astronomy club I used to belong to

set up a new website last year, and now all their meeting notices,

which used to come in plaintext over a Yahoo groups mailing list,

have a text part that looks like this actual example from a few days ago:

Subject: Members' Night at the Monthly Meeting

<p><style type="

16;ext/css">@font-face {

font-family: "MS 明朝";

}@font-face {

font-family: "MS 明朝";

}@font-face {

font-family: "Cambria";

}p.MsoNormal, li.MsoNormal, div.MsoNormal { margin: 0in 0in 0.0001pt; font-size:

12pt; font-family: Cambria; }a:link, span.MsoHyperlink { color: blue;

text-decoration: underline; }a:visited, span.MsoHyperlinkFollowed { color:

purple; text-decoration: underline; }.MsoChpDefault { font-family: Cambria;

}div.WordSection1 { page: WordSection1;

}</style>

<p class="MsoNormal">Friday April 8<sup>th</sup> is members’ night at the

monthly meeting of the PAS.<span style="">  </span>We are asking for

anyone, who has astronomical photographs that they would like to share, to

present them at the meeting.<span style="">  </span>Each presenter will

have about 15 minutes to present and discuss his pictures.<span style=""> We

already have some presenters.   </span></p>

<p class="MsoNormal"> </p>

... on and on for pages full of HTML tags and no line breaks.

I contacted the webmaster, but he was just using packaged software and

didn't seem to grok that the software was broken and was sending HTML

for the plaintext part as well as for the HTML part. His response was

fairly typical: "It looks fine to me".

I eventually gave up even trying to read their meeting announcements,

and now I just delete them.

The silly thing about this is that I can read HTML mail just fine, if

they'd just send HTML mail. What causes the problem is these automated

systems that insist on sending both HTML and plaintext, but then the

plaintext part is wrong. You'll see it on a lot of spam, too, where

the plaintext portion says something like "Get a better mailer"

(why? so I can see your phishing attack in all its glory?)

Folks, if you're setting up an automated email system, just pick one format

and send it. Don't configure it to send multiple formats unless you're

willing to test that all the formats actually work.

And developers, if you're writing an automated email system: don't

use MIME multipart/alternative by default unless you're actually sending

the same message in different formats. And if you must use multipart ...

test it. Because your users, the administrators deploying your system

for their organizations, won't know how to.

Tags: tech, email

[

14:19 Mar 27, 2011

More tech/email |

permalink to this entry |

]

Fri, 25 Feb 2011

This week's Linux Planet article continues the Plug Computer series,

with

Cross-compiling

Custom Kernels for Little Linux Plug Computers.

It covers how to find and install a cross-compiler (sadly, your

Linux distro probably doesn't include one), configuring the Linux

kernel build, and a few gotchas and things that I've found not to

work reliably.

It took me a lot of trial and error to figure out some of this --

there are lots of howtos on the web but a lot of them skip the basic

steps, like the syntax for the kernel's CROSS_COMPILE argument --

but you'll need it if you want to enable any unusual drivers like

GPIO. So good luck, and have fun!

Tags: tech, linux, plug, kernel, writing

[

12:30 Feb 25, 2011

More tech |

permalink to this entry |

]

Thu, 10 Feb 2011

This week's Linux Planet article continues my

Plug

Computing part 1 from two weeks ago.

This week, I cover the all-important issue of "unbricking":

what to do when you mess something up and the plug doesn't boot

any more. Which happens quite a lot when you're getting started

with plugs.

Here it is:

Un-Bricking

Linux Plug Computers: uBoot, iBoot, We All Boot for uBoot.

If you want more exhaustive detail, particular on those uBoot scripts and

how they work, I go through some of the details in a brain-dump I wrote

after two weeks of struggling to unbrick my first GuruPlug:

Building

and installing a new kernel for a SheevaPlug.

But don't worry if that page isn't too clear;

I'll cover the kernel-building part more clearly in my next

LinuxPlanet article on Feb. 24.

Tags: tech, linux, plug, uboot, writing

[

10:15 Feb 10, 2011

More tech |

permalink to this entry |

]

Thu, 27 Jan 2011

My article this week on Linux Planet is an introduction to Plug

Computers: tiny Linux-based "wall wart" computers that fit in a

box not much bigger than a typical AC power adaptor.

Although they run standard Linux (usually Debian or Ubuntu),

there are some gotchas to choosing and installing plug computers.

So this week's article starts with the basics of choosing a model

and connecting to it; part II, in two weeks, will address more

difficult issues like how to talk to uBoot, flash a new kernel or

recover if things go wrong.

Read part I here: Tiny

Linux Plug Computers: Wall Wart Linux Servers.

Tags: tech, linux, plug

[

19:36 Jan 27, 2011

More tech |

permalink to this entry |

]

Wed, 05 May 2010

On a Linux list, someone was having trouble with wireless networking,

and someone else said he'd had a similar problem and solved it by

reinstalling Kubuntu from scratch. Another poster then criticised

him for that:

"if

the answer is reinstall, you might as well downgrade to Windows.",

and later added,

"if

"we should understand a problem, and *then* choose a remedy to match."

As someone who spends quite a lot of time trying to track down root

causes of problems so that I can come up with a fix that doesn't

involve reinstalling, I thought that was unfair.

Here is how I replied on the list (or you can go straight to

the

mailing list version):

I'm a big fan of understanding the root cause of a problem and solving it

on that basis. Because I am, I waste many days chasing down problems

that ought to "just work", and probably would "just work" if I

gave in and installed a bone stock Ubuntu Gnome desktop with no

customizations. Modern Linux distros (except maybe Gentoo) are

written with the assumption that you aren't going to change anything

-- so reverting to the original (reinstalling) will often fix a problem.

Understanding this stuff *shouldn't* take days of wasted time -- but

it does, because none of this crap has decent documentation. With a

lot of the underlying processes in Linux -- networking, fonts, sound,

external storage -- there are plenty of "Click on the System Settings

menu, then click on ... here's a screenshot" howtos, but not much

"Then the foo daemon runs the /etc/acpi/bar.sh script, which calls

ifconfig with these arguments". Mostly you have to reverse-engineer

it by running experiments, or read the source code.

Sometimes I wonder why I bother. It may be sort of obsessive-compulsive

disorder, but I guess it's better than washing my hands 'til they bleed,

or hoarding 100 cats. At least I end up with a nice customized system

and more knowledge about how Linux works. And no cat food expenses.

But don't get on someone's case because he doesn't have days to

waste chasing down deep understanding of a system problem. If

you're going to get on someone's case, go after the people who

write these systems and then don't document how they actually work,

so people could debug them.

Tags: linux, tech, documentation

[

19:37 May 05, 2010

More linux |

permalink to this entry |

]

Tue, 01 Dec 2009

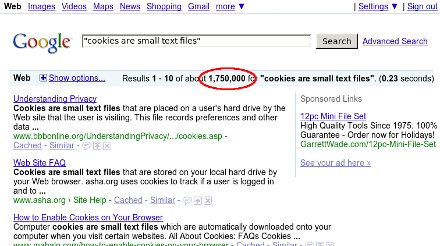

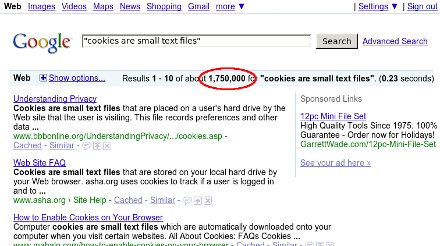

"Cookies are small text files which websites place on a visitor's

computer."

I've seen this exact phrase hundreds of times, most recently on a site

that should know better,

The Register.

I'm dying to know who started this ridiculous non-explanation,

and why they decided to explain cookies using an implementation

detail from one browser -- at least, I'm guessing IE must implement cookies

using separate small files, or must have done so at one point. Firefox

stores them all in one file, previously a flat file and now an sqlite

database.

How many users who don't know what a cookie is do know what a

"text file" is? No, really, I'm serious. If you're a geek, go ask a few

non-geeks what a text file is and how it differs from other files.

Ask them what they'd use to view or edit a text file.

Hint: if they say "Microsoft Word" or "Open Office",

they don't know.

And what exactly makes a cookie file "text" anyway?

In Firefox, cookies.sqlite is most definitely not a "text file" --

it's full of unprintable characters.

But even if IE stores cookies using printable characters --

have you tried to read your cookies?

I just went and looked at mine, and most of them looked something like this:

Name: __utma

Value: 76673194.4936867407419370000.1243964826.1243871526.1243872726.2

I don't know about you, but I don't spend a lot of time reading text

that looks like that.

Why not skip the implementation details entirely, and just tell users

what cookies are? Users don't care if they're stored in one file or many,

or what character set is used. How about this?

Cookies are small pieces of data which your web browser stores at the

request of certain web sites.

I don't know who started this meme or why people keep copying it

without stopping to think.

But I smell a Fox Terrier. That was Stephen Jay Gould's example,

in his book Bully for Brontosaurus, of a

factoid invented by one writer and blindly copied by all who come later.

The fox terrier -- and no other breed -- was used universally for years to

describe the size of Eohippus. At least it was reasonably close;

Gould went on to describe many more examples where people copied the

wrong information, each successive textbook copying the last with

no one ever going back to the source to check the information.

It's usually a sign that the writer doesn't really understand what

they're writing. Surely copying the phrase everyone else uses must

be safe!

Tags: web, browsers, writing, skepticism, tech, firefox, mozilla

[

21:25 Dec 01, 2009

More tech/web |

permalink to this entry |

]

Sun, 11 Oct 2009

Ah, silence is golden!

For my birthday last month, Dave gave me a nice pair of Bose powered

speakers to replace the crappy broken set I'd been using.

Your basic computer speakers, except I actually use them primarily

for a little portable radio I listen to while hacking.

Only one problem: they had a major hum as soon as I turned them on.

Even when I turned on the radio, I could hear the hum in the

background. It got better if I turned the speakers way down and

the radio up -- it wasn't coming from the radio.

After about a month it was starting to irritate me.

I mentioned it on #linuxchix to see if anyone had any insights.

Maria and Wolf did, and narrowed it down pretty quickly to some sort

of ground problem. The speakers need to get a real ground from somewhere.

They don't get it through their AC power plug (a two-prong wall wart).

They also don't get it from the radio, which is plugged in to AC

via its own 2-prong wall wart, so it doesn't have a ground either.

How could I test this? Wolf suggested an alligator clip going from

one of the RCA plugs on the back of the speaker to my computer's case.

But it turned out there was an easier way. These speakers have dual

inputs: a second set of RCA plugs so I can have another cable going

to an MP3 player, radio or whatever, without needing to unplug

from the radio first. I ran a spare cable from these second RCA plugs

to the sound card output of my spare computer -- bingo!

The hum entirely went away.

I suppose most people buy this type of speaker for use with computers,

so it isn't a problem. But I was surprised that they'd adapt so

poorly to a portable device like a radio or MP3 player. Is that so uncommon?

Tags: tech, audio

[

21:49 Oct 11, 2009

More tech |

permalink to this entry |

]

Tue, 01 Sep 2009

It's so easy as a techie to forget how many people tune out anything

that looks like it has to do with technology.

I've been following the terrible "Station fire" that's threatening

Mt Wilson observatory as well as homes and firefighters' lives

down in southern California. And in addition to all the serious

and useful URLs for tracking the fire, I happened to come across

this one:

http://iscaliforniaonfire.com/

Very funny! I laughed, and so did the friends with whom I shared it.

So when a non-technical mailing list

began talking about the fire, I had to share it, with the comment

"Here's a useful site I found for tracking the status of California fires."

Several people laughed (not all of them computer geeks).

But one person said,

All it said was "YES." No further comments.

The joke seems obvious, right? But think about it: it's only funny

if you read the domain name before you go to the page.

Then you load the page, see what's there, and laugh.

But if you're the sort of person who immediately tunes out when you

see a URL -- because "that's one of those technical things I don't

understand" -- then the page wouldn't make any sense.

I'm not going to stop sharing techie jokes that require some

background -- or at least the ability to read a URL.

But sometimes it's helpful to be reminded of how a lot of the

world looks at things. People see anything that looks "technical" --

be it an equation, a Latin word, or a URL -- and just tune out.

The rest of it might as well not be there -- even if the words

following that "http://" are normal English you think anyone

should understand.

Tags: tech, humor

[

21:48 Sep 01, 2009

More misc |

permalink to this entry |

]

Fri, 12 Jun 2009

My last Toastmasters speech was on open formats: why you should use

open formats rather than closed/proprietary ones and the risks of

closed formats.

To make it clearer, I wanted to print out handouts people could take home

summarizing some of the most common closed formats, along with

open alternatives.

Surely there are lots of such tables on the web, I thought.

I'll just find one and customize it a little for this specific audience.

To my surprise, I couldn't find a single one. Even

openformats.org didn't

have very much.

So I started one:

Open vs. Closed Formats.

It's far from complete, so

I hope I'll continue to get contributions to flesh it out more.

And the talk? It went over very well, and people appreciated the

handout. There's a limit to how much information you can get across

in under ten minutes, but I think I got the point across.

The talk itself, such as it is, is here:

Open up!

Tags: tech, formats, open source, toastmasters

[

11:37 Jun 12, 2009

More tech |

permalink to this entry |

]

Wed, 10 Jun 2009

Lots has been written about

Bing,

Microsoft's new search engine.

It's better than Google, it's worse than Google, it'll never catch

up to Google. Farhad Manjoo of Slate had perhaps the best reason

to use Bing:

"If you switch,

Google's going to do some awesome things to try to win you back."

![[Bing in Omniweb thinks we're in Portugal]](http://shallowsky.com/blog/images/screenshots/omnigalT.jpg) But what I want to know about Bing is this:

Why does it think we're in Portugal when Dave runs it under Omniweb on Mac?

But what I want to know about Bing is this:

Why does it think we're in Portugal when Dave runs it under Omniweb on Mac?

In every other browser it gives the screen you've probably seen,

with side menus (and a horizontal scrollbar if your window isn't

wide enough, ugh) and some sort of pretty picture as a background.

In Omniweb, you get a cleaner layout with no sidebars or horizontal

scrollbars, a different pretty picture -- often

prettier than the one you get on all the other browsers, though

both images change daily -- and a set of togglebuttons that don't

show up in any of the other browsers, letting you restrict results

to only English or only results from Portugal.

Why does it think we're in Portugal when Dave uses Omniweb?

Equally puzzling, why do only people in Portugal have the option

of restricting the results to English only?

Tags: tech, browsers, mapping, geolocation, GIS

[

10:37 Jun 10, 2009

More tech |

permalink to this entry |

]

Sat, 15 Nov 2008

Dave and I recently acquired a lovely trinket from a Mac-using friend:

an old 20-inch Apple Cinema Display.

I know what you're thinking (if you're not a Mac user): surely

Akkana's not lustful of Apple's vastly overpriced monitors when

brand-new monitors that size are selling for under $200!

Indeed, I thought that until fairly recently. But there actually

is a reason the Apple Cinema displays cost so much more than seemingly

equivalent monitors -- and it's not the color and shape of the bezel.

The difference is that Apple cinema displays are a technology called

S-IPS, while normal consumer LCD monitors -- those ones you

see at Fry's going for around $200 for a 22-inch 1680x1050 -- are

a technology called TN. (There's a third technology in between the

two called S-PVA, but it's rare.)

The main differences are color range and viewing angle.

The TN monitors can't display full color: they're only

6 bits per channel. They simulate colors outside that range

by cycling very rapidly between two similar colors

(this is called "dithering" but it's not the usual use of the term).

Modern TN monitors are

astoundingly fast, so they can do this dithering faster than

the eye can follow, but many people say they can still see the

color difference. S-IPS monitors show a true 8 bits per color channel.

The viewing angle difference is much easier to see. The published

numbers are similar, something like 160 degrees for TN monitors versus

180 degrees for S-IPS, but that doesn't begin to tell the story.

Align yourself in front of a TN monitor, so the colors look right.

Now stand up, if you're sitting down, or squat down if you're

standing. See how the image suddenly goes all inverse-video,

like a photographic negative only worse? Try that with an S-IPS monitor,

and no matter where you stand, all that happens is that the image

gets a little less bright.

(For those wanting more background, read

TN Film, MVA,

PVA and IPS – Which one's for you?, the articles on

TFT Central,

and the wikipedia

article on LCD technology.)

Now, the comparison isn't entirely one-sided. TN monitors have their

advantages too. They're outrageously inexpensive. They're blindingly

fast -- gamers like them because they don't leave "ghosts" behind

fast-moving images. And they're very power efficient (S-IPS monitors,

are only a little better than a CRT). But clearly, if you spend a lot

of time editing photos and an S-IPS monitor falls into your

possession, it's worth at least trying out.

But how? The old Apple Cinema display has a nonstandard connector,

called ADC, which provides video, power and USB1 all at once.

It turns out the only adaptor from a PC video card with DVI output

(forget about using an older card that supports only VGA) to an ADC

monitor is the $99 adaptor from the Apple store. It comes with a power

brick and USB plug.

Okay, that's a lot for an adaptor, but it's the only game in town,

so off I went to the Apple store, and a very short time later I had

the monitor plugged in to my machine and showing an image. (On Ubuntu

Hardy, simply removing xorg.conf was all I needed, and X automatically

detected the correct resolution. But eventually I put back one section

from my old xorg.conf, the keyboard section that specifies

"XkbOptions" to be "ctrl:nocaps".)

And oh, the image was beautiful. So sharp, clear, bright and colorful.

And I got it working so easily!

Of course, things weren't as good as they seemed (they never are, with

computers, are they?) Over the next few days I collected a list of

things that weren't working quite right:

- The Apple display had no brightness/contrast controls; I got

a pretty bad headache the first day sitting in front of that

full-brightness screen.

- Suspend didn't work. And here when I'd made so much progress

getting suspend to work on my desktop machine!

- While X worked great, the text console didn't.

The brightness problem was the easiest. A little web searching led me

to acdcontrol, a

commandline program to control brightness on Apple monitors.

It turns out that it works via the USB plug of the ADC connector,

which I initially hadn't connected (having not much use for another

USB 1.1 hub). Naturally, Ubuntu's udev/hal setup created the device

in a nonstandard place and with permissions that only worked for root,

so I had to figure out that I needed to edit

/etc/udev/rules.d/20-names.rules and change the hiddev line to read:

KERNEL=="hiddev[0-9]*", NAME="usb/%k", GROUP="video", MODE="0660"

That did the trick, and after that acdcontrol worked beautifully.

On the second problem, I never did figure out why suspending with

the Apple monitor always locked up the machine, either during suspend

or resume. I guess I could live without suspend on a desktop, though I

sure like having it.

The third problem was the killer. Big deal, who needs text consoles,

right? Well, I use them for debugging, but what was more important,

also broken were the grub screen (I could no longer choose

kernels or boot options) and the BIOS screen (not something

I need very often, but when you need it you really need it).

In fact, the text console itself wasn't a problem. It turns out the

problem is that the Apple display won't take a 640x480 signal.

I tried building a kernel with framebuffer enabled, and indeed,

that gave me back my boot messages and text consoles (at 1280x1024),

but still no grub or BIOS screens. It might be possible to hack a grub

that could display at 1280x1024. But never being able to change BIOS

parameters would be a drag.

The problems were mounting up. Some had solutions; some required

further hacking; some didn't have solutions at all. Was this monitor

worth the hassle? But the display was so beautiful ...

That was when Dave discovered TFT

Central's search page -- and we learned that the Dell 2005FPW

uses the exact same Philips tube as the

Apple, and there are lots of them for sale used,.

That sealed it -- Dave took the Apple monitor (he has a Mac, though

he'll need a solution for his Linux box too) and I bought a Dell.

Its image is just as beautiful as the Apple (and the bezel is nicer)

and it works with DVI or VGA, works at resolutions down to 640x480

and even has a powered speaker bar attached.

Maybe it's possible to make an old Apple Cinema display work on a Mac.

But it's way too much work. On a PC, the Dell is a much better bet.

Tags: linux, tech, graphics, monitor, S-IPS, TN, ADC, DVI

[

21:57 Nov 15, 2008

More tech |

permalink to this entry |

]

Wed, 08 Oct 2008

Dear Asus, and other manufacturers who make Eee imitations:

The Eee laptops are mondo cool. So lovely and light.

Thank you, Asus, for showing that it can be done and that there's

lots of interest in small, light, cheap laptops, thus inspiring

a bazillion imitators. And thank you even more for offering

Linux as a viable option!

Now would one of you please, please offer some models that

have at least XGA resolution so I can actually buy one? Some of us

who travel with a laptop do so in order to make presentations.

On projectors that use 1024x768.

So far HP is the only manufacturer to offer WXGA, in the Mini-Note.

But I read that Linux support is poor for the "Chrome 9" graphics

chip, and reviewers seem very underwhelmed with the Via C7

processor's performance and battery life.

Rumours of a new Mini-Note with a Via Nano or, preferably, Intel

Atom and Intel graphics chip, keep me waiting.

C'mon, won't somebody else step up and give HP some competition?

It's so weird to have my choice of about 8 different 1024x600

netbook models under $500, but if I want another 168 pixels

vertically, the price from everyone except HP jumps to over $2000.

Folks: there is a marketing niche here that you're missing.

Tags: tech, netbook, laptop, resolution, projector

[

22:50 Oct 08, 2008

More tech |

permalink to this entry |